How data modeling has evolved for modern data teams

Previously, we looked at the best practices for modeling Snowplow data in dbt. In this post, we’ll look more broadly at behavioral data modeling, and what opportunities lay ahead for organizations looking to drive value with behavioral data.

In the early days of digital analytics, you didn’t model your data. You didn’t have to. Tools like Google Analytics and later, Adobe Analytics were really the only solutions for analytics available. Both of these products ‘packaged up’ the data journey, from collection, to transformation and reporting, all into a single solution.

At the time when these products burst onto the scene, the computation required to transform data was expensive. This meant that GA and Adobe Analytics had to make hard decisions about how data models should be structured in an efficient and cost effective manner. The traditional web data model was born.

The traditional data model

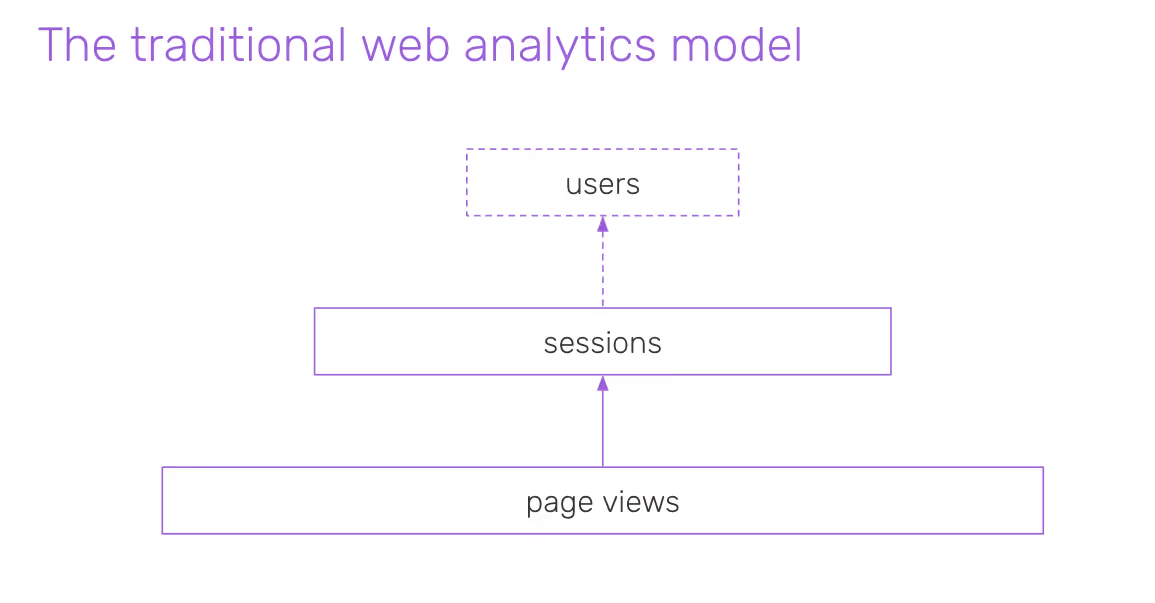

As we touched on in chapter 2, the traditional web data model was essentially built up around the idea of a ‘session’.

Sessions were a way to summarize a series of interactions, aggregating certain web metrics over time to paint a picture of what’s happening. Definitions of sessions varied, but by and large the industry standardized on the idea of a period of activity where no two actions were separated by more than 30 minutes.

This is the most common way of modeling behavioral data on web, but this approach comes with certain limitations.

First, there’s a higher level of abstraction often missing from this view – the user level. This gives us useful information about users that businesses find especially useful. Different teams can use this information to answer specific questions, for example:

- Product teams: “am I getting better at retaining newer cohorts of users?

- Marketing teams: are we acquiring high value users?

- Customer teams: how effective are we at growing the value of our customers over time?

Then there are lower, deeper levels of aggregation missing, below the session level. The most common of these are funnels. While most web analytics tools enabled users to create fixed “funnels” and analyze how users progress through them, they didn’t provide any way for analysts to engage with shorter or longer periods than a session in their journey.

Or to take a more real example of the limitations of sessionization, let’s consider a non-traditional business model, say, a streaming service like Netflix. Within a platform like Netflix, the concept of a session doesn’t make much sense, nor would it help a data team better understand more granular behavior, such as how a certain content series was consumed by audiences over several weeks, or months.

- How do consumption patterns vary between different series? (Are some more bingable than others?)

- How do consumption patterns very between different user types?

- To what extent is more bingable content better at driving users to becoming a subscriber? (Or staying subscribed?)

These are kinds of questions that dive deeper into user behavior, which break the paradigms of traditional data models.

Digital analytics typically catered to standardized, transactional behaviors, such as retail or ecommerce purchases. But even in the retail industry, companies have evolved from these early ways of working with data. For example, many people use delivery services from grocery stores like Target, which follows a fairly typical item select – basket – order business model. But what if you want to dive deeper into how different customers were buying their items?

What if you wanted to know how long the user journey takes from filling a basket to making an order? For a family purchasing their weekly groceries, this might play out over several days.

Modeling data to answer a broader set of questions

As businesses evolve, the questions they want to ask about their users become more nuanced. We can see that the traditional model, built around sessions, isn’t suited to many businesses today. Particularly since many modern websites are more like ‘web products’ than the standard web structures we saw in the early 2000s.

This evolution in data modeling was first recognized by Gary Angel, CTO of consulting firm Semphonic (later acquired by Ernst and Young), and a pioneer of advanced analytics. Angel predicted as early as 2008 that warehousing web data and stitching it with behavioral data from multiple sources would unlock a whole new level of opportunity in analytics.

“I think that probably the biggest direction in analytics is going to be increasing interest in data warehousing analytics information and joining it to customer information, really opening up that information from an IT perspective or platforms that are not just reporting platforms, give people a wide variety of analytic, modeling and statistical tools. The sophisticated organisations that we are working with that have sort of gone through the process of mastering web analytics, have stuck one or two years in getting a basic infrastructure in place, getting good at web analytics reporting. Where they are going now is warehousing that data, joining it to their customer information, opening it up to a whole new set of tools, a whole new set of analysts, a whole new set of reports.” – Interview with Gary Angel in 2008

Gary Angel was exactly right. When Amazon launched its cloud data warehouse, Redshift in 2012, it became possible (and cost effective, even at small scale) for companies to process data with solutions like Apache Hadoop, Hive and Spark, without relying on restrictive tools like Google Analytics. Angel foresaw that organizations would use these tools for advanced analytics to build a picture of their user journey, aggregating data in a way that made sense to them.

Once it was possible for companies to break out of the packaged analytics paradigm, it became important to make the leap for two key reasons:

- Organizations didn’t fit the traditional mould (i.e. standard, ecommerce-shaped customer journeys), such as subscription businesses like Gousto and two-sided marketplaces like Unsplash;

- Organizations wanted to go deeper with their data, ask more dynamic questions and evolve their analytics over time.

As businesses evolve, so must their analytics

Today, many companies see their analytics as a way to differentiate. They are exploring ways to do more advanced things with their data. Even industries that have been well-served by tools like Google Analytics are building their own data infrastructure or looking to the modern data stack to capture and model behavioral data. For example:

- Media organizations are looking beyond standard sessionization to measure engagement with their content, with new ways to calculate time on page or to attribute the success of their ad campaigns;

- Retailers are experimenting with personalization and using behavioral data to measure buying propensity, so they can trigger offers and promotions at optimal times.

These are just two examples, but the move to advanced analytics has become more mainstream in almost every sector. Companies now demand more flexibility over how they capture and model behavioral data, and want the ability to go beyond basic reporting to use cases that deliver real business value.

Analysts, for example, used to be limited to building business reports. The process of implementing a new reporting system, from tracking, processing and visualizing the data might take anywhere from nine to 18 months to complete. For this reason, organizations would often be ‘stuck’ with their report, unable to adapt to changes in the business or product.

But in today’s competitive landscape, analytics has to keep up with the pace of the business. Product development, to take one example, is only possible when supported by robust analytics that can evolve as product features evolve, as the customer journey changes, or as the business grows. As many startups know, this is not a one-and-done process, but a cycle of continuous improvement.

To achieve this, data teams are turning increasingly to a more flexible toolkit to help them to capture and model their data. Breaking out of the limitations of Google and Adobe Analytics, they’re turning instead to a combination of solutions to meet the needs of their data journey – choosing from a marketplace of tools to build a ‘modern data stack’.

Where Snowplow and dbt come in

Within this ecosystem, Snowplow and dbt stand out as highly flexible, open-source solutions that can equip data teams to ask more questions of their behavioral data.

With Snowplow, you have a robust set of tooling for capturing behavioral data, with total control and assurance that your data will land in your data warehouse in a clean, well-structured format. With dbt, Analytics Engineers can transform their data seamlessly, build a seamless workflow for data modeling, and evolve their models over time to meet the needs of their business.

Crucially, both Snowplow and dbt are built from the ground up to support organizations evolve their behavioral data as they become more data sophisticated and start using the data in new ways, and as their business and products and services change. Traditional packaged analytics tools assumed that implementation was a one-off process and that there was no need to evolve the data beyond that. But modern organizations are constantly evolving the way they use their data.

Snowplow enables them to do this with, for example, the ability to version definitions of events and entities, and roll out new versions in a controlled, stepwise fashion. Cloud data warehouses offer the compute power required to recompute metrics on top of the entire data set (if those definitions change). Better yet, dbt offers structured workflows to enable organizations to evolve their data models incrementally as their behavioral data changes (the Snowplow input) and their use cases multiply and evolve (the output).

In unison, Snowplow and dbt enable the data team to achieve radically higher levels of productivity, supporting organizations moving much much faster with their data than was possible with the last generation of tooling.

Where productivity is high, and data flows freely through the business, it’s faster and easier for the organization to answer key questions. It’s these data-nimble companies that are able to iterate quickly on their choices, making smart choices in product, marketing and other areas on the fly that make all the difference for their users.