Forwarding Events to Downstream Destinations

Deliver Real-time Data to Downstream Platforms

Trigger downstream actions instantly with enriched, low-latency behavioral data—delivered in real time from your Snowplow pipeline to any system.

Low-Latency Delivery

Stream events in real time to internal systems or external platforms, empowering teams to take action as customer behaviors unfold.

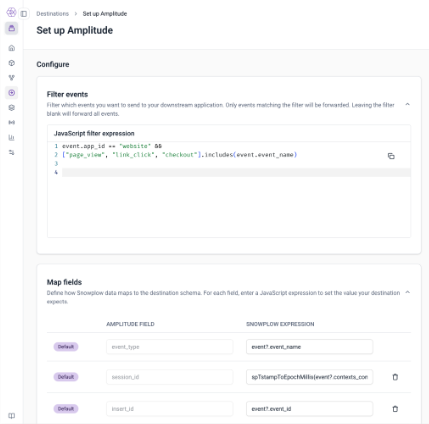

Self-Service UI

Create and manage data forwarding workflows directly in your Snowplow Console. Define which events to transform and send, all in a few clicks.

End-to-End Data Integrity

Forward only validated, enriched events - no raw or partial data - preserving data quality and trust across real-time workflows within your technology stack.

Power Real-Time Operations

Event Forwarding allows teams to act on behavioral data the moment it’s generated, whether for real-time analytics, marketing campaign triggers, or internal stream processing. Events are enriched upstream and delivered to SaaS applications and stream destinations via pre-built API integrations.

Power user journeys and marketing personalization in real time

Detect risky behaviors or fraudulent activity to trigger alerts

Enable event-driven microservices with low latency

Seamless Integration with your Stack

Integrate Snowplow’s governed behavioral data to the tools and systems your teams rely on, whether that’s out-of-the-box integrations with Braze, Amplitude, Mixpanel or custom connections via HTTP API destinations. These integrations are ready to use with minimal setup, bringing immediate value to business teams.

Stream to native destinations such as Braze and Amplitude in real time

Deliver enriched, ready-to-use JSON events for downstream processing

Reduce engineering effort to build and maintain custom integrations

Flexible Transformations in Console

Give teams the flexibility to shape and filter data using custom JavaScript expressions directly within the Console. Define precise logic for which events to forward, how to map fields to destination schemas, and apply real-time transformations without additional tooling.

Simplified setup within the Snowplow Console UI

Full transparency and control over schema and payload

Test transformations to ensure downstream compatibility