The Power of Behavioral Data for Real-Time Personalization

Organizations are starting to realize the value of real-time data to power data products with increasing levels of personalization and tailored experiences.

The combination of real-time data and AI enables powerful traditional applications, including:

- User Segmentation: Classifying a customer based on various features and assigning them to a cohort

- Anomaly Detection: Identifying anomalies or outliers in real-time, enabling rapid detection of fraud, system failures, or security breaches

- Targeted Advertising: Combining a user's current real-time context and historical data to determine the best ads to serve or suppress

These could certainly unlock significant business value in the right context, but some applications are now only truly possible in the new Generative AI paradigm, such as:

- Dynamic Content Generation: Create personalized content like product descriptions, marketing emails, article headlines and placement, or reports on the fly based on a user's real-time data and preferences

- Conversational AI: Generative language models can engage in free-form dialogues, understand context, and generate relevant responses in real time. This enables more natural conversational experiences compared to discriminative models

- Adaptive User Interfaces: Generative models could dynamically adapt the user experience of apps/websites based on how the user interacts and navigates in real-time, personalizing layouts, workflows and micro-copy

Wherever a business is on their journey of adopting these capabilities, they must be aware that the bar is slowly but surely being raised. There is an inexorable AI arms race of "nice to haves" becoming table stakes.

Whereas cohort-level personalization used to be acceptable, now users are coming to expect their unique preferences to be accounted for in their experience. In the context of highly competitive or even commoditized products or services, effective use of the technology could therefore be the deciding factor between winning or losing a customer to another business.

The emerging Generative AI paradigm will only serve to accelerate this race and widen the gap between said winners and losers.

To personalize at this level, it is necessary to build sophisticated customer profiles, incorporating various sources of customer data - the most significant of which and arguably the most underserved is behavioral data, both structured and unstructured. The question then becomes how to dynamically incorporate behaviors into these customer profiles in order to maximize opportunities to delight customers at every touchpoint.

However, this is a challenging engineering feat to achieve and must also be executed within the bounds of data privacy requirements. Snowplow’s customer data infrastructure (CDI) is intentionally designed to support customers in meeting this challenge, regardless of their approach to leveraging AI within their data products.

Architectures to Support Real-Time Personalization

We can think about the architectures to support real-time personalization as spanning three distinct eras:

- Initial Separation Era: There was a clear delineation and separation of concerns between data platforms (for analytics) and stream processors (for low-latency pipelines) as two distinct and separate architectures

- Lambda Era: A single overarching architecture that consisted of a real-time component for operational use cases and an analytical component for slower data warehousing workloads - however, this still required maintaining multiple versions of the same data across those components, which would achieve "eventual consistency" by rationalizing the versions later

- Unified Era: Blurring of lines between analytics and streaming workloads - data platforms have capabilities allowing unified logs and true real-time analytical systems (Databricks' Delta Live Tables and Snowflake Dynamic Tables)

As it stands, we're straddling the Lambda and Unified Architecture Eras - many organizations that formally would have defaulted to the former now have options. The choice is an important one and would take significant effort to unwind - as such, it's important to consider the benefits of each approach:

Centralizing onto a Data Platform:

- Allows a simplified infrastructure as opposed to two independent, parallel systems - this theoretically halves the operational overhead and reduces the points of failure to be addressed

- No segregation of batch/analytical and streaming datasets that would otherwise need to be integrated together requiring further data engineering effort

- Governance and security only need to be applied to a single pipeline rather than being replicated and then maintained to ensure consistency between two separate data flows

- A layer of abstraction is placed on top of complex streaming infrastructure resulting in a much friendlier learning curve for FTEs given that these platforms leverage SQL. This minimizes the need for data engineering expertise and means that analytics experts are better equipped to self-serve

- Overall, this approach entails less complexity and effort to build and maintain relative to traditional streaming systems, which in turn facilitates easier iteration, mitigation against unforeseen impacts of changes, and compressed time to value

Utilizing a traditional streaming solution offers benefits, which are often the converse of the above:

- Dedicated expertise optimizing for high-volume, low-latency streaming, potentially offering advanced, fine-tuned capabilities tailored for mission-critical real-time personalization use cases

- Purpose-built architectures designed from the ground up to handle unique challenges of real-time data like high throughput, fault tolerance, and strict latency requirements for better performance and reliability

- Mature ecosystem with wide range of specialized third-party tools, integrations and larger developer community providing diverse solutions and support for complex streaming needs

- Conversely to the above - flexibility to independently scale and optimize separate batch and streaming workloads based on unique requirements

Dispelling a Common Myth

A common misconception about real-time personalization and machine learning models is that new information should constantly feed into and retrain the algorithm on a continuous basis - i.e., the model is always up to date with all new data that the business has captured.

In reality, this isn't practical. Firstly, it would likely be prohibitively expensive - this would require a considerable amount of compute, even with models that can iteratively incorporate new data rather than taking a "drop and recompute" approach of retraining on the dataset as a whole on every iteration.

If the value generated by this approach was proportional to this cost, then it would be viable - but a training dataset by definition should be very large. Continuous retraining would entail adding relatively marginal amounts of data to the initial training dataset, making negligible difference to the decisions or "rule set" that it generates.

To illustrate this with an example - take the use case of a real-time personalization engine. As a starting point, you may look to train a recommender on months of content selection behaviors. Incrementally retraining the model on additional minutes, hours, or even days worth of data would be very unlikely to influence the resultant decisions the model would make.

It's important therefore to establish that the real-time element of a behavioral data pipeline usually acts as a "triggering mechanism" as opposed to a live feed into a machine learning data product.

The event would usually be sent to an API, which in turn queries a precalculated rule set. That rule set is then retrained periodically - the exact frequency of retraining should take into account the trade-off discussed above.

It could in fact be optimized over time - i.e., are the results from retraining on a daily basis worth the cost in terms of favorable outcomes (e.g., conversions from a recommender) relative to retraining weekly?

There will be an inflection point of diminishing returns at which a higher frequency yields a negligible uplift in model performance.

Snowplow's Role in These Topologies

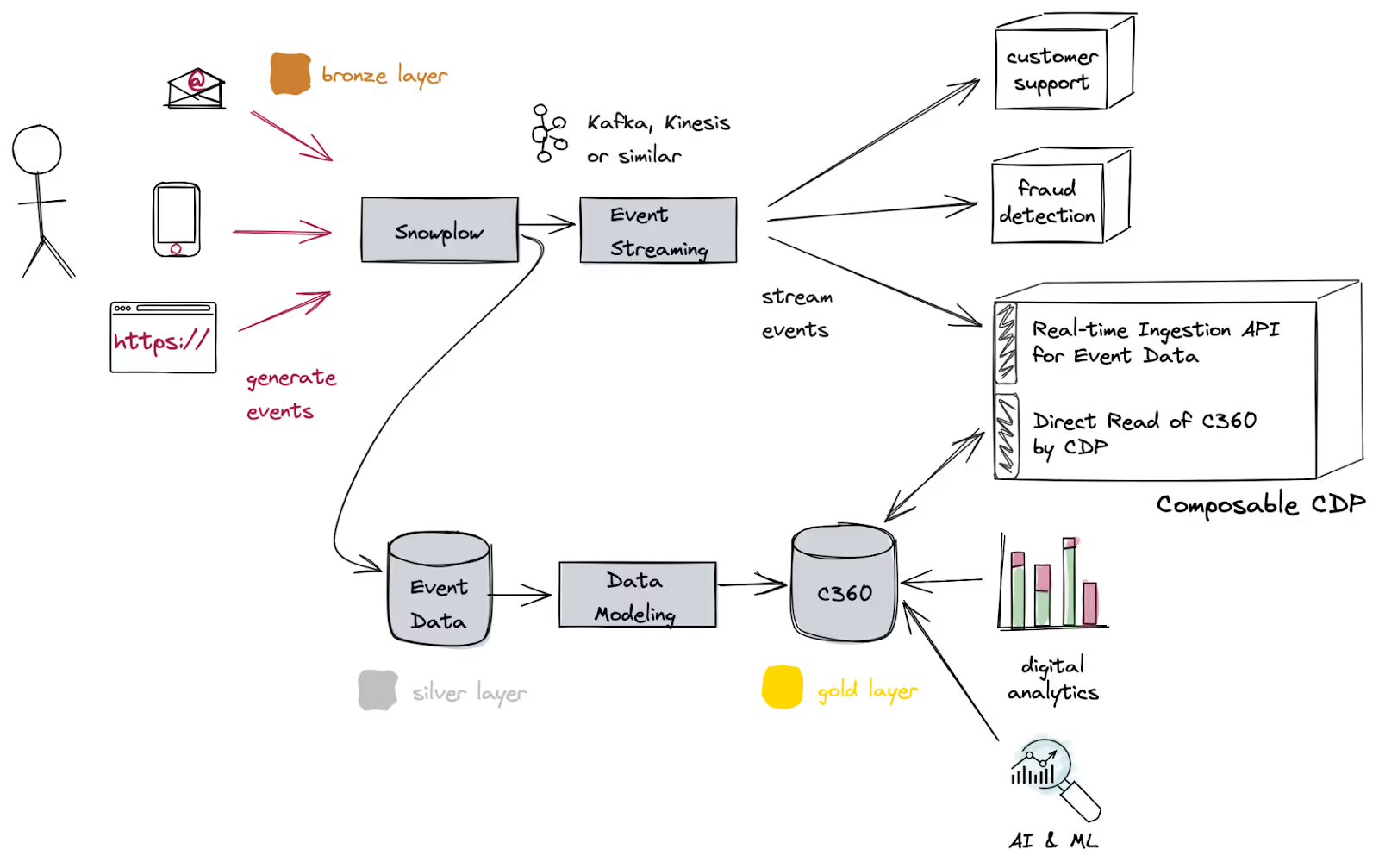

To focus on Snowplow's role - we act as an organization's Customer Data Infrastructure (CDI).

The CDI is the single source of event data, which is the first-party observation of your customers' behavior across your digital estate. This event data, sometimes called behavioral data (inclusive of both zero-party and first-party behavioral data), is the most high-fidelity and high-quality data that a brand can generate about its customers.

As such, it is a critical input into business decisioning and your AI strategy for real-time personalization.

Real-Time Personalization: Flexible Implementation Options

We provide our customers with full optionality on their approach to real-time data applications.

Powering the Lambda Architecture Approach

With a Lambda-style architecture in mind, where an organization leverages a traditional streaming solution, you have three options to consume our data feed and integrate it with downstream destinations:

- Leverage our suite of analytics SDKs: Allowing data engineers to use popular languages (e.g., Python, JavaScript, Scala) to parse Snowplow events and transform them from the native TSV format of our enriched stream to JSON. This tends to be a more popular format to manipulate and plug into downstream services, as the engineer sees fit.

- Use Snowbridge: Snowplow's real-time data replication tool that seamlessly propagates enriched behavioral data streams to third-party destinations, enabling low-latency data pipelines. Customers can route data to various targets including streaming solutions like Kafka with options to transform or filter data en route as required.

- Build a custom integration: Develop bespoke data pipelines and processing logic to handle the ingested data according to the customer's unique needs. Tools that could potentially be used here include Google Dataflow, Apache Flink, and Amazon Kinesis Data Analytics.

Powering the Unified Architecture Approach

If a customer is contemplating a unified approach, where customers choose to consolidate onto a data platform - we offer our Snowflake streaming loader, which writes to Snowflake using the Snowpipe streaming API and is supported across all clouds.

We have plans to introduce equivalent capabilities on Databricks.

The best choice will come down to the trade-off necessary when optimizing for either out-of-the-box functionality and time-to-value (i.e., Snowbridge) or latency (i.e., the analytics SDKs, custom integrations).

As it stands, and in response to market demand up until this point - with these options Snowplow currently supports use cases that:

- Require seconds-level latency (but not lower)

- Tolerate a degree of "eventual consistency"

- Permit some level of duplicate events, i.e., where high availability and low latency is prioritized over the complete data set of entirely unique events being surfaced in exactly the correct order

With our pedigree in analytics, powering machine learning models, and near real-time personalization and recommendation engines - this is the right trade-off to best serve our customers.

Empowering Real-Time Personalization: The Future of Customer Experience

The rapid evolution of data platforms and the emergence of generative AI models have unlocked new possibilities for delivering highly personalized and engaging customer experiences in real time. As organizations strive to delight their customers at every touchpoint, the ability to dynamically incorporate behavioral data into sophisticated customer profiles becomes crucial.

Snowplow's Customer Data Infrastructure (CDI) plays a pivotal role in this paradigm, serving as the sensory nerve that captures raw behavioral data and transforms it into actionable signals. By providing multiple integration options, Snowplow empowers its customers with the flexibility to choose the approach that best aligns with their requirements and existing infrastructure.

Companies like AutoTrader and Secret Escapes are already pioneering innovative data topologies that leverage Snowplow's CDI to deliver personalized customer experiences in real time. As the industry continues to evolve, Snowplow's Customer Data Infrastructure will remain a critical enabler, empowering organizations to unlock the full potential of their customer data and AI strategies to drive competitive advantage and business success.