Why data contracts are obviously a good idea

Data Contracts have been much discussed in the data community of late. Chad Sanderson posted on them in August 2022, after which debates on the merits of Data Contracts raged across social media, when Benn Stansil waded in September 2022, to concede that the problem they looked to solve was real but to argue that the approach was flawed.

Why all the fuss? In this post, I will argue that Data Contracts are:

- Very obviously a good idea (and one that should not be controversial)

- The reason this sensible idea is contentious today in the context of the Modern Data Stack, is that practitioners are stuck in the “data is oil” paradigm i.e. assume that the data is extracted, rather than deliberately creating data

Why Data Contracts are obviously a good idea

Let’s take it right back to the basics. In a modern organization there are many teams that consume data: to take just one (broad) example, data describing how your customer interacts with your digital products is going to be consumed, by:

- Your product team, who want to understand how those customers engage with different products and services, and how changes to those products and services drive changes to customer lifetime value

- Your customer team, so they can better understand and serve those customers

- Your marketing team, so they can more effectively target and market to customers based on their interests, product affinities and where they are in the purchase cycle

- Your commercial team, who want to understand revenue and profit per customer and customer segment

The data might not just be being used for analysis and understanding: it might be used directly to power production data applications that might, for example:

- Personalize the customer experience

- Triage support tickets based on customer value (so that high value customers get a better, faster service)

- Automatically generate audience segments (e.g. customers with a high propensity to churn) for targeting with particular offers, driving better, more relevant customer experience, and higher performance from those campaigns

- Identify customers who look like they might be committing fraud or victims of fraud, and take remedial action accordingly

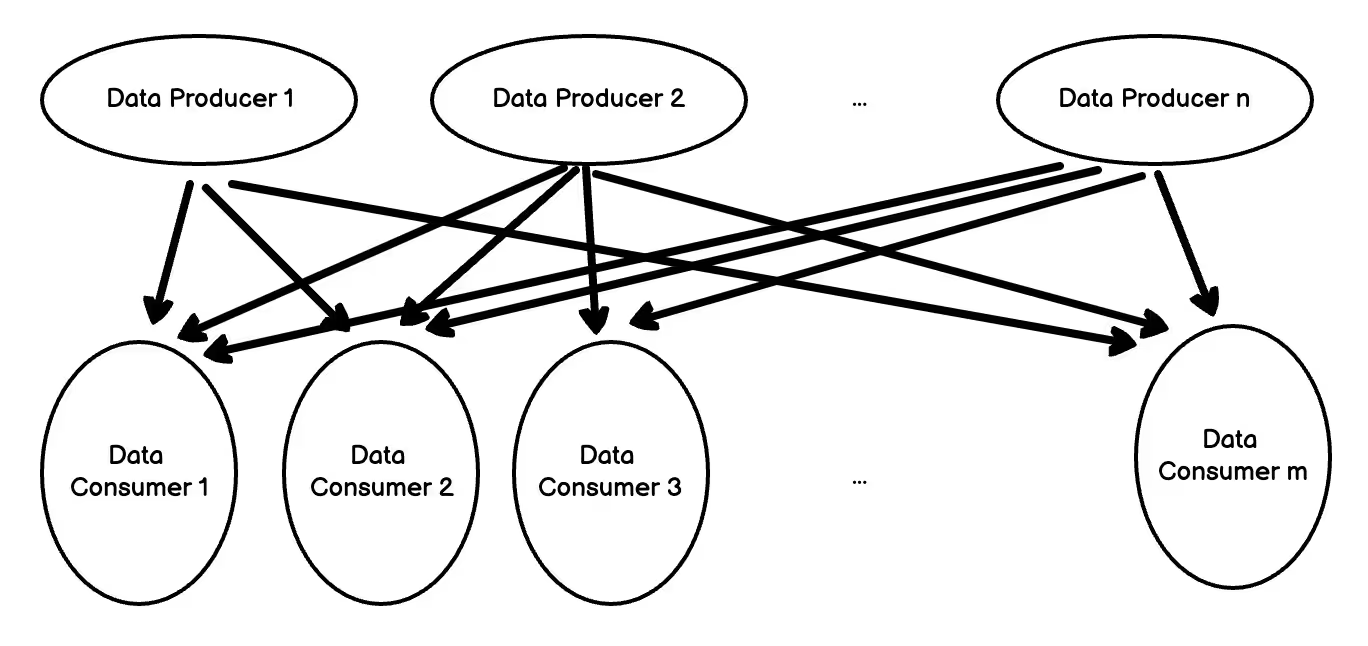

So we have a situation where the data produced by one team (the product team), is consumed by multiple teams. To make this more complicated, we have multiple teams producing data, all of which is consumed by multiple teams:

- Each product team / unit / squad is likely to produce its own data describing how users engage with that particular product or service

- Customer teams will generate their own data in their own CRM / loyalty / support systems

- Marketing teams will generate their own data in their own marketing tool that describe which campaigns and content have been served to who, and any subsequent engagement data (e.g. open and click through rates)

- Commercial teams are likely to generate their own data e.g. profitability models

The situation is a mess and the mess drives fragility. Any change to the data produced by an individual team has the potential to break multiple downstream reports and production applications owned by other teams. Worse, it is often completely opaque to a data producer which teams and applications are consuming the data they produce, and what assumptions and logic they have assumed is baked into the data. This means that even if they wanted to check with data consumers prior to making a change to the data produced they would not even know who to ask, nor have anyway of knowing what sorts of changes to the data are safe to make.

Data Contracts have been proposed as a straightforward solution to this challenge. Data producers commit to producing data that adheres to a specific contract, that typically defines at minimum, what schema the data conforms to, but can (and should) include other valuable information for teams that want to consume the data like:

- The semantics of the data i.e. what the rules are for setting properties to certain values. (We record transaction values in US dollars, we record a transaction when the payment gateway returns a successful response…)

- SLAs on the data (around accuracy, completeness, latency)

- Policy (what the data can and cannot be used for - this is particularly important for personal and health data)

- Information on who owns the data and how to go about requesting changes to it. (Data Contracts can be versioned so allow not just for the data to evolve over time with the needs of all teams, but provide a useful tool for enabling the controlled evolution of data in the business.)

Data consumers can review the contract, and then architect downstream applications that only make safe assumptions about the data i.e. assumptions that are explicitly captured in the contract. Then, multiple teams can build downstream data applications safe in the knowledge that the Data producing team will not make any changes to the data without first publishing a new version of the Data Contract. (Which should always be ahead of actually making any changes to the data produced.) Each data set (or “data product”) only needs a single Data Contract, and this contract can be used by all consumers of that data set.

So what is so objectionable about a data contract?

The most articulate argument against data contracts was made by Benn Stansil:

The way data contracts try to achieve this though—through a negotiated agreement—seems wrong on all fronts: It’s impractical to achieve, impossible to maintain, and—most damning of all—an undesirable outcome to chase…

It’s impractical to achieve because

A lot of data “providers” (e.g., software engineers who are maintaining an application database, and sales ops managers who configure Salesforce) can’t guarantee them anyway. If you change some bit of logic in Salesforce—if, for example, a team stops recording pricing information on the product object and starts recording it on the product attribute object—it’s hard to know how that change will be reflected in the underlying data model. Salesforce’s UI exists for exactly that reason—so that we don’t have to think about our entire CRM as a bunch of tables and an entity-relationship diagram. If administrators sign a contract to maintain a particular data structure, how can we expect them to hit what they can’t see?

It’s an undesirable outcome to chase because

We don’t want data providers to be worried about these contracts in the first place. Engineers, marketers, sales operations managers—all of these people have more important jobs to do than providing consistent data to a data team. They need to build great products, find new customers, and help sales teams sell that product to those customers. The data structures they create are in service of these goals. If those structures need to change to make a product better or to smooth over a kink in the sales cycle, they shouldn’t have to consult an analytics engineer first. In other words, data teams can’t expect to stop changes to products or Salesforce; we can only hope to contain them.

The key thing to realize is Benn’s exemplar for a data source is an export of Salesforce data into the data warehouse. He is 100% right that it is practically impossible to impose a data contract on data coming out of Salesforce. But he’s wrong that this means that data contracts are a bad idea. It is a bad idea to build production data applications (or important reports) on top of data extracted from Salesforce. Instead, you should be deliberately Creating Data to power your data applications, data that adheres to clearly defined and versioned data contracts.

What do you mean you should not build data applications on top of data extracted from Salesforce?

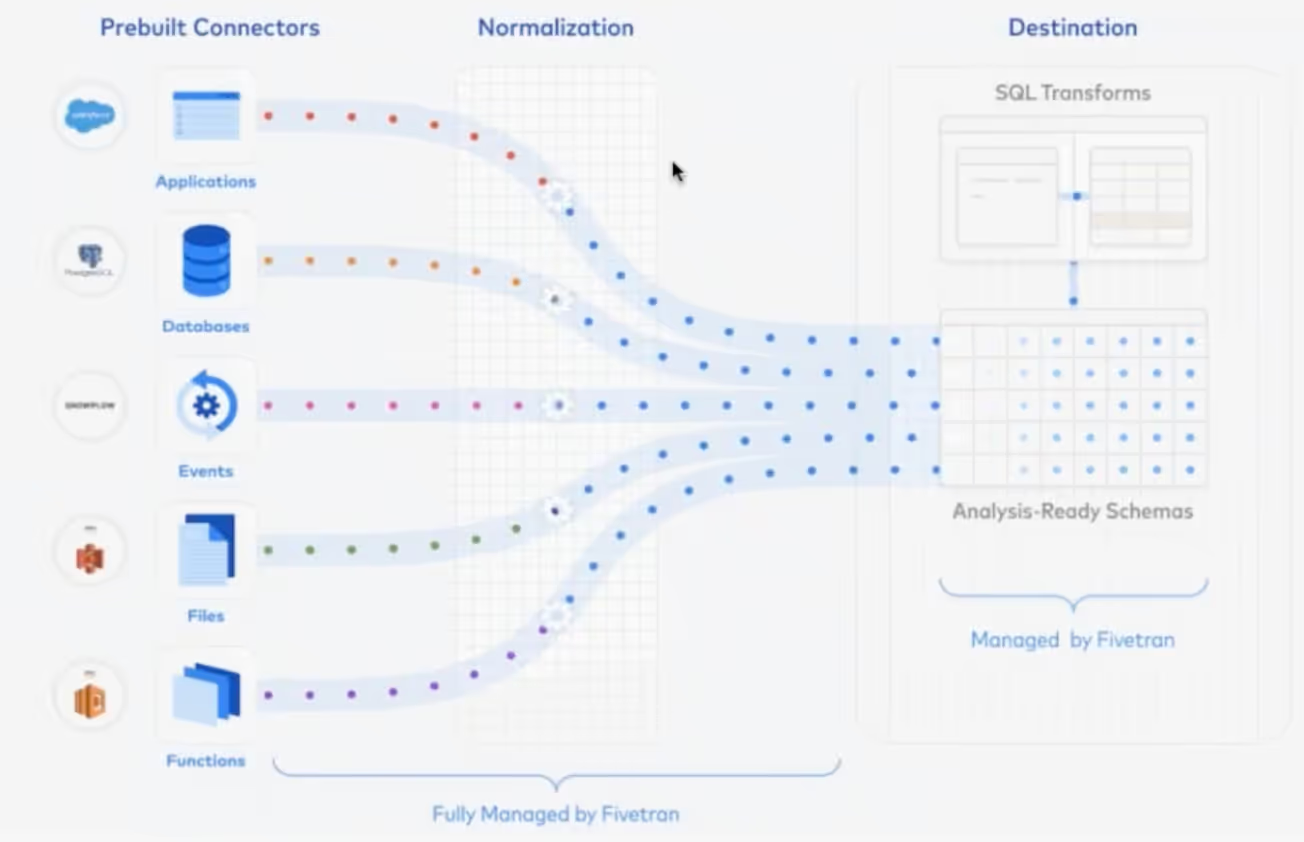

Fivetran, the vendor most commonly associated with ingesting data into the modern data stack, differentiate the following “sources” of data:

The Fivetran world view (shared by many in the Modern Data Stack) is that for any organization there is a finite set of sources (“data deposits” like “oil deposits”) where data can be extracted (like oil can be mined) and then loaded into the data warehouse (where later it will be refined into something valuable you can put in your car or power your ML application). The different icons on the left represent five different types of data source. Each type requires a different engineering approach, from Fivetran, to extracting and loading into the data warehouse. So this diagram is very useful if you’re a Fivetran engineer.

From the perspective of a particular organization or a particular team in the organization looking to get hold of data to power a data application, however, distinguishing potential sources of data in this way is not especially helpful. Taking a step back, data that an organization has resides in the applications it runs: typically in a database that is sat behind the application. If it is an application that the business is running itself, then it is likely to be possible to access the database behind a specific application directly, and replicate the data from there. (Using Fivetran potentially, or a Change Data Capture process, more generally.)

If instead the application is provided as SaaS, like Salesforce, then it will not be possible to access the database directly: instead the data in the database might be exposed via an API, that can be regularly polled for data. This fits the data as oil metaphor, with a regular process setup to ping the API, extract the data, and then copy it into a set of corresponding tables in the data warehouse. It is the same data, conceptually, whether it is accessed via a RESTful API or via SQL (or any other type of API). The difference is in the access pattern, that reflects who is responsible for running the application.

The trouble with both of the above technical approaches (extracting data directly from databases or via published APIs) is that the data made available is a side-effect: it has not been deliberately created for a specific data application or team, it is data exhaust. It would be very unsurprising if over time the way data was structured in the database would evolve quite dramatically to drive performance improvements (e.g. as the application scaled over a larger user-base) or as the application evolves functionally. Ben Stansill is right that it is undesirable to ask a the application developers to consider not just the performance of their own application when designing those database tables, but also the performance and integrity of any downstream data consumers that consume the replicated version of the database. The APIs that sit ontop of most SaaS solutions are typically either built to reflect the structure of the database in those underlying databases, or to power specific data integration use cases. (Rather than generalized downstream data intensive applications.) So we should not be surprised that the developers of those APIs do not design them around the needs of downstream data consumers. This is one reason why so much work is required to wrangle extracted data into something useful.

There is one type of data though that can provide a solid foundation for Creating Data optimized for downstream data consumers, data that can be governed by a contract. That data type is the third one listed in the Fivetran diagram, it is the humble “event”.

With “events” (i.e. Data Creation), it is straightforward to adopt data contracts

When we think of applications emitting “events” there are typically two types of application we think about:

- Web and mobile applications that typically emit events for analytics purposes. (By integrating SDKs from vendors like Google Analytics, Adobe, Snowplow, Segment or Mixpanel amongst others). These events are emitted as HTTP requests.

- Server-side systems that follow event-stream architectures. These are typically microservices that perform very specific functions, and communicate what they are doing to other microservices in the stack by emitting events into streams or topics rather than HTTP endpoints (e.g. in Kafka) and learn the current state of different entities that they care about by consuming the events emitted by other microservices in their ecosystem

In both cases, the application is deliberately creating data for use by other data consuming teams that are fundamentally decoupled from the teams responsible for the applications themselves: in the case of web and mobile product teams for the analytics team(s), and in the case of microservice applications for the other microservice application teams.

It is pretty common in both cases to adopt data contracts to support this effective exchange: Snowplow has supported data contracts since 2014 , with Segment adopting them with the introduction of Protocols in 2018 and Adobe with the release of the Adobe Experience Platform. In the case of microservices infrastructure the Confluent team published the first version of the Confluent Schema Registry back in 2014. There is scope to extend all these technologies to power more comprehensive data contracts: ones that go well beyond simply capturing the schema and some simple semantics. But the principle of a data contract has been there a long time because it is the only way to ensure data integrity, in the case of both digital analtyics applications and microservices architectures.

This model of publishing data as “events” for external consumers is really powerful:

- It means applications deliberately compose and emit data: the creation of data it not a side effect. As a result, it is really obvious to any developer working on an application that if she makes any changes to the code that emits that event that will have an impact on downstream consumers, because the only purpose of the code to emit the data is to serve downstream data consumers.

- The emission is light-weight. Whilst it is not desirable to ask an application developer to optimize the design of a database to serve a downstream data application, it is entirely reasonable to ask her to write some code to deliberately emit data that meets a specific specification (the data contract) - especially when supported by SDKs that abstract away technical complexity, so the developer can focus on emitting the right data at the right time. (As specified in the data contract.).

- There is already a well developed ecosystem of tooling for ensuring that the data emitted from applications as “events” is compliant with data contracts. The data contract can be used to derive the SDKs that are used to create the data, so that there is a causal link between the contract specification and the data emitted. Further, checks can be performed on the data as part of CICD and in production, to verify that the contract is not violated. (For example organizations running Snowplow use Snowplow Micro, a processing pipeline that is small enough to live on a container that is spun up as part of CICD, so that automated tests can be written to ensure that the right data is emitted at the right time with the right schema and semantics.) Today, it is easy for an application to integrate an SDK to emit events, debug the data emitted from a development environment, write automated tests to catch if the data violates the contract prior to the branch being merged to master and monitor the data in real-time to ensure it doesn’t deviate from the contract and alert on violations.

When we are emitting events (i.e. Creating Data) it is easy to adopt data contracts to ensure data integrity. But when we are relying on extract and load processes to poll 3rd party APIs it is hard. (You can’t control Salesforce, you can only hope to contain it.) So given data contracts are so important, can we adopt the “event” model as the primary approach data used in production data applications?

We can adopt “the event” (i.e. Data Creation) as the primary approach for delivering data to production data applications

For any application that we have developed ourselves, we have the possibility to integrate SDKs and deliberately create data that adheres to data contracts. This is always preferable extracting the data from the underlying database (e.g. using change data capture) or from data archived from the data (sat on a file system), because the data is not a side effect, it is deliberately created and the process of creation can be governed by a data contract.

But what if we want to power our data application with data that resides in a SaaS application? Here I would make two observations and one prediction:

- When you start developing a data application by considering what data you want (ideally), rather than what data you have (in different production systems), it is surprising how much of the data needs to deliberately created in your own applications. My favorite example is if you are building any sort of application that predicts the propensity for an individual to do something (like churn, upgrade, or buy a particular product) the time that an individual spends performing different related actions is nearly always the most predictive data: this is data that needs to be created in the applications in which the users perform those actions, and is never found in a CRM, that typically only stores much higher level (less predictive) facts about those same customers.

- More and more SaaS providers are deliberately emitting data in real-time in an “event-like” way: it is very common for them to support exposing data as a stream of real-time webhooks, and even give users the ability to specify the structure of the payloads emitted and the rules for when those payloads are emitted. (Zendesk is a good example.) Here, Salesforce is the notable exception.

- As more organizations start developing and running their own data-intensive applications in production, there is going to be real competitive pressure on SaaS vendors to provide their users with better tooling to create data from within them, the way that they can from their own applications. This has already started with webhooks, but needs to go further. (In terms of SaaS vendors publishing data contracts their webhooks conform to and providing functionality for users to customize those data contracts.) As every adherent to the Modern Data Stack knows, Martin Casado believes applications need to be reimagined so that they can be powered by intelligence built of an organization’s own data warehouse. I would argue that it is just as important that those reimagined applications enable the effective creation of data from the application directly into the data warehouse (including being governed by data contracts), as it is that they enable the flow of intelligence, built on that data, out of the data warehouse into the application.

Can we stop thinking of data sources based on the technical approach to moving the data (APIs, databases, events and files) and start distinguishing what matters (Data Creation vs Data Exhaust)?

I hope so but I fear not. But if you’ve made it this far I hope it’s clear why I believe this is the real distinction that matters to people who are building data applications. When data is created we can use data contracts to govern the process of creating and testing the data: and we then have a solid foundation for building downstream data processing applications. When we rely on data exhaust, we cannot.

Postscript: but we can enforce data contracts in CDC pipelines! (And Adrian and Chad have documented how do to this)

The day before I was due to hit publish on this post, Adrian Kreuziger authored a post on Chad Sanderson’s substack explaining how, from an engineering perspective, we can implement data contracts when relying on CDC for data ingest. This would counter my argument above, that data contracts only make sense when you’re relying on “event” data i.e. deliberately creating data, and undermine my argument that we should deliberately create data rather than rely on data exhaust.

In the setup Adrian describes, CDC is not being done directly on the tables in the database: instead an abstraction layer is introduced between the database and the place where the CDC events are generated. This provides a place where tests can be written that validate that the CDC events generated conform to the schema expected. Adrian describes how these tests can be run as part of CICD, failing a build where where a developer working on the application has accidentally made a change to the database that has caused a contract violation.

This achieves something really useful: it provides a way to catch releases that will break downstream data applications, and it provides those alerts to the data producing team, who are the right people to fix it. (If instead these tests were run in the data warehouse by an analytics engineer, the only possibility would be to add additional processing steps to take the data as ingested and try and wrangle it into the format as expected - which might work for schema changes but likely wont for semantic changes.)

But it is not nearly as good as the case where the data is deliberately created as an event emitted directly from the application code, because

- It is likely to be a complete surprise to the developer. Until the build fails chances are that data contracts were not top of mind. (Or even under consideration) Were the data emitted as an event this would be explicitly visible in the code (it’s not a side effect) - so it is much less likely that the test would fail in the first place.

- There is additional work required for the application developer because the process of ingesting the data is a side effect that would not be required if the process for creating the data was explicit. Specifically, the application developer is not free to make changes to the application that change the structure of the underlying database as this will break the data contract. This will inevitably have some impact on development velocity.

So maybe rather than a binary distinction between Data Creation and data exhaust, we should be thinking in terms of a more nuanced hierarchy, with:

- Data Creation (data as “events”) at the top, and data contracts driving both the creation of the data and the validation of the data

- Data extraction with data contracts enforced at source, next, so issues can at least be spotted and addressed at source, even if this generates more additional work for the application developers than data creation

- Data extraction with data contracts enforced in the warehouse, next, as better than complete chaos, but with the very significant challenge that the people who spot the issue (typically analytics engineers) have no context to understand the changes made, and are stuck with a limited toolset (SQL, dbt) to try and fix them after the fact

- Data extraction with no data contracts as a completely indefensible approach to application building

This article was originally published on the Data Creation Substack. The original article can be read here.