Designing a data model that reflects your user journey

In our last chapter, we looked at the growing prominence of data modeling and why it’s an important part of the modern data stack. This time, we’ll explore practical ways to design data models to serve internal teams effectively.

How should I structure my data models?

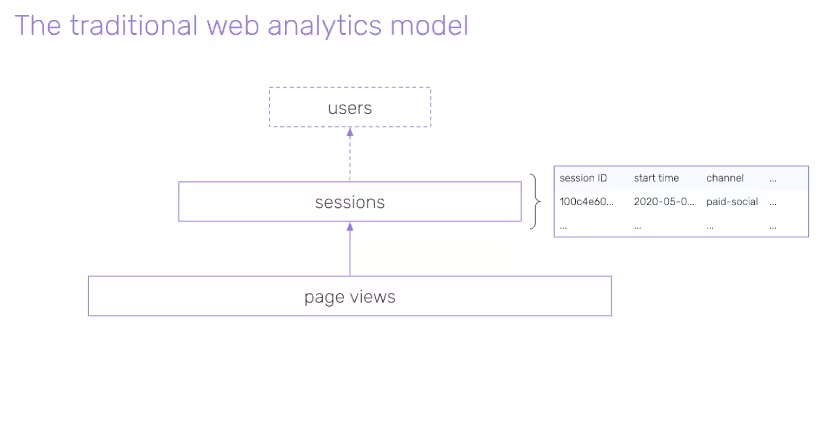

Before we answer that question, it’s worth looking back at the traditional web analytics model we see most often used in analytics. This model is most commonly used by analytics teams investigating user behavior on the web.

In this example, we can see the model broken down into three tables: page views, sessions and users. Page views are aggregated into sessions, with one row of data per session, and we might also have a table for users, provided we have reliable user identifiers over time.

This model, however, presents a few key limitations:

- Sessions themselves are not always relevant for your business model or use cases

- What does a session mean on mobile where engagement is often different to web interactions?

- How are sessions measured in a reliable way for businesses with multiple How are sessions measured in a reliable way for businesses with multiple target users/customers with different user journeys (i.e buyers and sellers on two-sided marketplaces)?

- Page views are not always the smallest unit of interaction – what about searches or video engagement?

- Without SQL skills, how can you explore this data at a more granular level?

- Different teams in the organization can’t all easily derive value from data in this format.

It’s worth keeping these limitations in mind, but it’s not all bad news. The underlying structure is still valuable – there’s something going on here that we can work with.

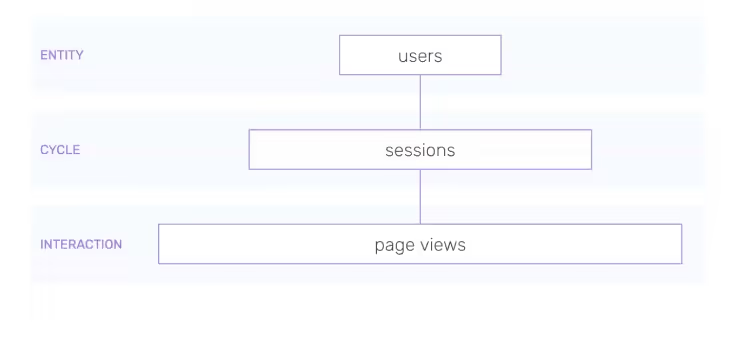

Another way to consider this traditional model is the type of dimensions each table is capturing. Page views could be seen as an ‘interaction’ – a way the user is engaging with the platform. A session, with interactions over time, could be classed as a ‘cycle’, and at the highest level, a user can be classed as an ‘entity’ – an agent or object involved with a level of interaction.

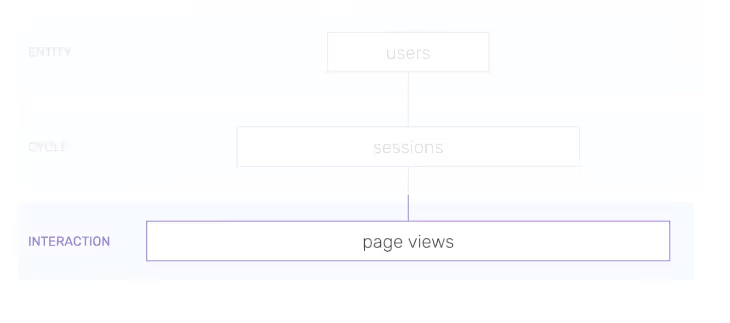

Using this framework, we can expand our structure to accommodate other use cases and platforms. Let’s look at the levels in isolation.

The interactions level

At the interactions level, we have one row per interaction. If we take page views as an example interaction, we can break down the information captured here as follows:

- Dimensions related to the interaction:

- Referrer, timestamps

- Dimensions related to the cycle:

- Session ID, session index

- Funnel, funnel step

- Dimensions related to the entities::

- Page title, url,

- User ID, location

- Interaction metrics: Time spent on page, scroll depth,

But page views are just one example of an interaction event. Other examples might include:

The type of interactions you include depends heavily on your specific business model.

The cycle level

At this level, we have one row per cycle. If we take sessions as an example cycle, the information could be broken down into:

- Dimensions related to the cycle:

- Session ID, session index,

- Dimensions related to the entities:

- User ID, location

- Cycle metrics:

- Page views, conversions

Cycles are essentially an aggregation of interactions over a period of time. Other examples of ‘cycles’ might include:

- Course completions

- Signup funnel

- Content discoveries

- Sessions

- Game level competitions

Once again, the types of cyclical information you capture depends heavily on your business and the logic you want to apply.

The entity level

The entity level contains one row per entity. If we take users as our example here, the information captured could be broken down into:

- Dimensions related to the entity:

- User ID, location

- Entity metrics:

- Sessions, page views

Other examples of entities could be:

- Products

- Articles

- Videos

- Campaigns

- Apps

- Data pipelines (if you’re capturing data about multiple Snowplow pipelines!)

This framework gives us a great foundation for designing a data model from first principles. But there are other technical aspects to consider when designing your data models:

- Who is consuming this data and what are they interested in?

- This will indicate what entities you need to think about

- What is the use case for this data?

- This will dictate how aggregated the tables need to be (whether at a granular level or high level)

- How will this data be consumed?

- This will inform how the tables need to be structured

Ultimately, your business model and the type of users, interactions and your unique customer journey will determine how your data models will be designed.

Data models in practice: examples in Ecommerce and subscription businesses

It’s worth looking at a few examples of data models in different teams and verticals to get an idea of how you can model data for specific consumers.

First, we’ll look at an example Ecommerce company (i.e Target), modeling data for the:

Second, we’ll consider an example for a Subscription (i.e Netflix), modelling data for the:

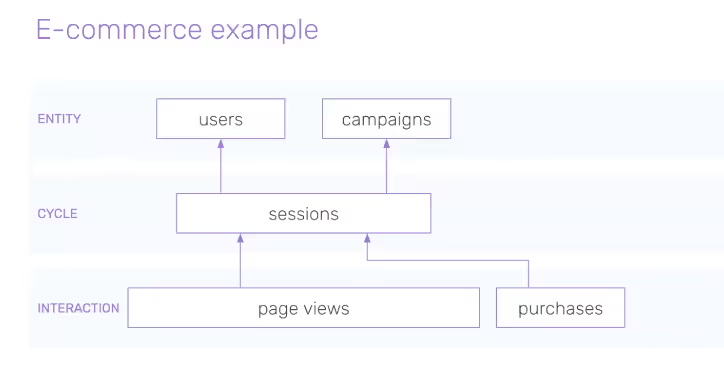

Ecommerce: Marketing want to understand how to optimize their marketing budget

Let’s consider a Marketing team within an ecommerce company. They might want to understand how their efforts are driving revenue. Specifically, they may ask:

– What marketing channels lead to the sessions with the most dollars of product purchased?

– What marketing channels or campaigns are best at winning repeat customers?

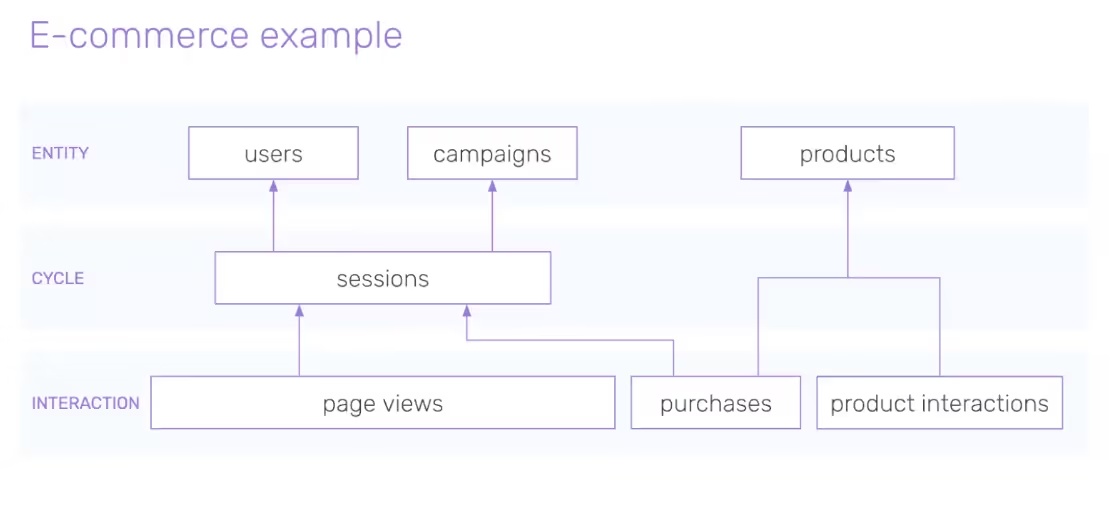

Core to this particular example is sessions. We want to know which sessions are bringing the most dollars. Also the page views part will be interesting to tell us where people came from. Therefore we’ll need one row per page view and to aggregate these up to the sessions level.

We also need purchases because they’re going to tell us about which sessions are most lucrative. These will tell us which products were purchased, the total purchase value of each transaction and so on.

This would already be really useful to show how much revenue is being produced from each individual channel, but you might want to go a step further and look at one row per campaign, showing the total number of sessions per campaign, total revenue produced per campaign, how many purchases, and so on.

Then there’s user lifetime value. So in that case it might be useful to aggregate on the user level. To see how many purchases they’ve made over their lifetime and total purchase value per user and how this correlates to each campaign (are there any patterns there? Are certain campaigns driving certain users to purchase more?).

But it’s not just the Marketing team who use data to make critical decisions. Let’s next imagine the inventory team come to the table with their own questions, such as:

– What products are most popular? What new stock should they buy?

– How is product performance related to positioning and pricing on the website? How can they sell their existing stock effectively?

These are quite different questions to the questions from the marketing team. Therefore we need to add information to our existing model.

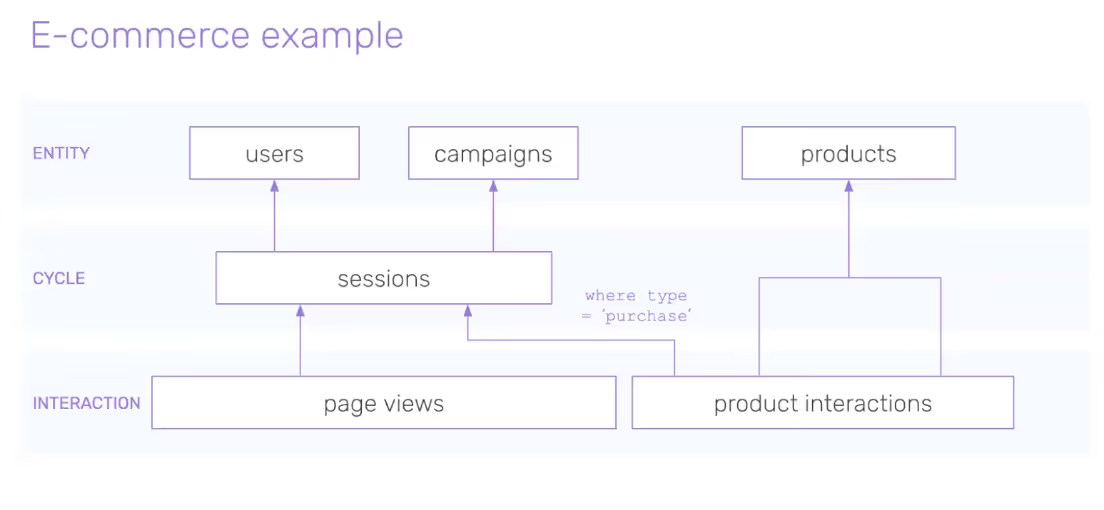

Just having purchases and sessions isn’t enough. We don’t just want to know when they purchased a product, we also want to know when they clicked for a product detail view, when they added a product to cart, removed it from cart – all the micro interactions that describe their behavior in granular detail.

We might also want to aggregate all product interactions and purchase information into a table with one row per product, that shows us how often a product was looked at and interacted with prior to purchase.

Side by side, these data models might look very different, with little overlap. At this point a data team could decide to build a comprehensive ‘product interactions’ table, but when it comes to sending product interactions into the sessions table, it could be a good idea to include a ‘purchases’ filter to isolate purchase actions for the marketing team.

Over time, the marketing team might grow their interest in granular product interactions, which can be easily included in their reports.

Subscription business: The product team want to better understand how to reduce churn

Now let’s imagine a Product team within a subscription company. They want to understand how to increase retention and reduce churn. They might ask questions such as:

– Which activities drive user engagement?

– What features cause friction in the user experience?

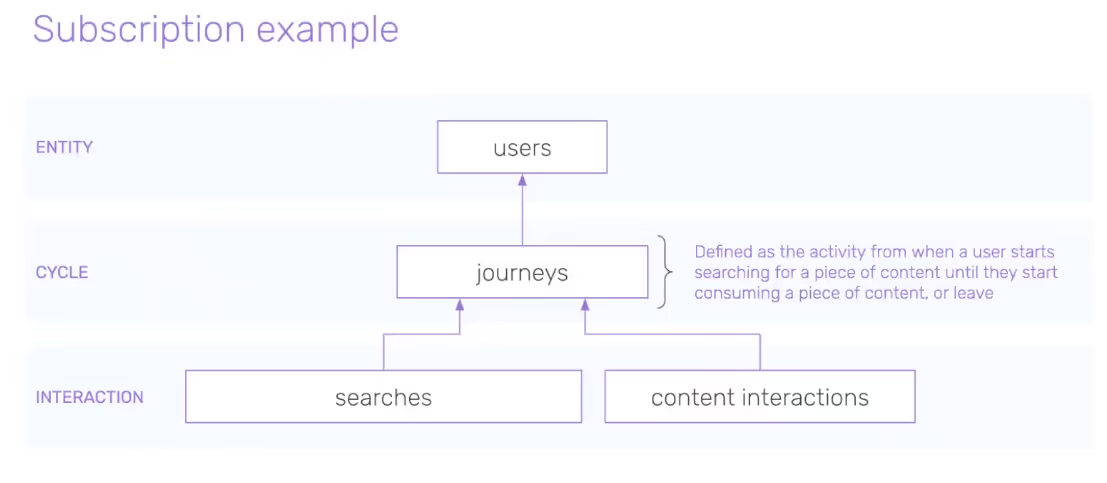

In these examples, we’re less interested in page views and more in searches to see user intent. What are they searching for and how often are they searching with similar terms; this is a good indicator of how well your search function is performing, and how valuable the users find it.

Then there are content interactions. With a Subscription business like Netflix, perhaps the user is previewing content or clicking the title to see more details.

We can aggregate these interactions into journeys (defined as the activity from the time they start searching until they start consuming or leave). Let’s assume for now that content views or plays are included in the interactions table.

Then we can aggregate up into the user level to see, for example, if a user who has had more searches per journey would be more likely to churn, or is there a correlation between number of content interactions and retention rate? We could look at these questions over metrics such as number of searches, number of journeys, number of content interactions but also average number of searches per journey user, or average number of videos per journey, etc.

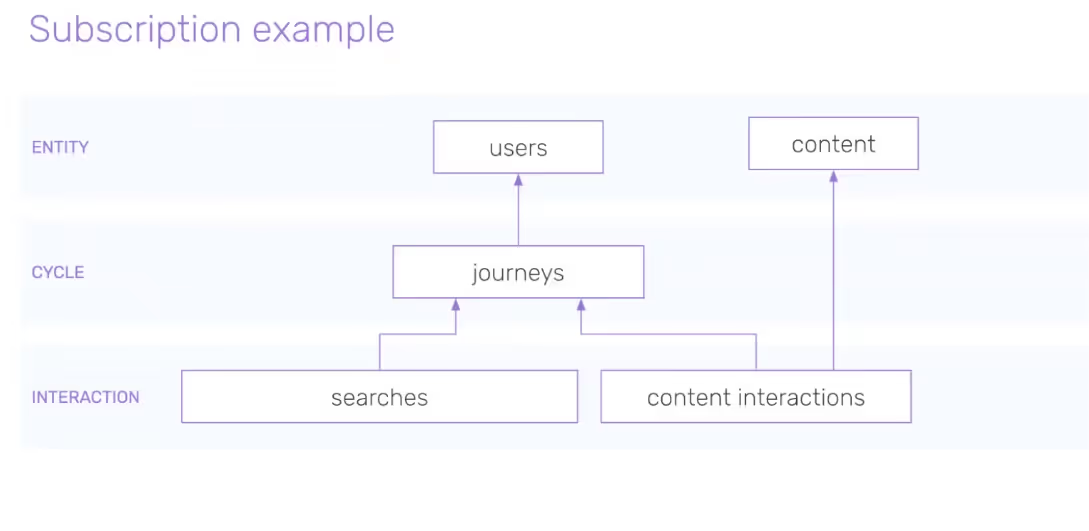

But what if the Content team then want to better understand which content is most popular:

– What content do people engage with multiple times?

– What content do people exit prematurely?

With one row per content item, an aggregated content interactions table would make it easier for the content team to see which content has received the most engagement (i.e views, play/pause clicks). Aggregating the same interaction-level data to a different entity increases the data’s value by making it relevant for another team.

As you can see from these examples, different businesses require different data models to meet their needs. To gain the most value from your data, you need to model it in a way that makes sense for your organization (and the various teams within it).

How can I build my data models in practice?

We’ve discussed how to design data models conceptually, but how about architecting them practically?

When building the data models in your data warehouse, there are some technical factors to consider:

- Dataset size:

- If your dataset is very large, think about using incremental models; they’ll allow you to deliver your data faster.

- Data warehouse:

- Columnar or row based storage? (most are columnar)

- Billing based on data scanned or query run time, or fixed costs?

- BI or visualization tool

- Does it run queries directly in the data warehouse?

- Or does it import data from the data warehouse?

- This will affect how you’ll want to build the tables and how aggregated they need to be

- Final consumer

- What’s the end use case, will the data go into a report or a ML model?

- Does the data need to run in a real-time engine?

These factors will determine whether you want the data to be normalized or denormalized. Let’s explain with an example.

Modeling data for a gaming company

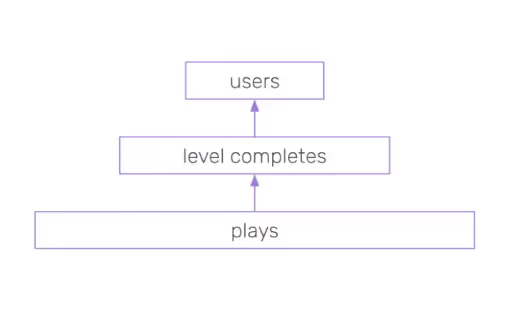

Let’s say you’re a gaming company modeling data to show plays, level completes and users. You’ll eventually land with a model that will show total number of plays by users, total number completed levels, and so on.

There are a few ways to build these tables in a data warehouse.

You could build large, flat tables made up of user-level dimensions and metrics, a table for level completes information, and one with metrics around plays. All the information is within each table directly and no joining is required.

The opposite way to structure your tables is to split out information in a way that includes user dimensions and level dimensions in various metrics tables. For example, user dimensions will be included in the user metrics table, level metrics table and plays metrics table – with a join key for user ID and for level ID. In this structural example, you can avoid data duplications, but you will have to perform joins when you execute a query.

This structure is not necessarily optimal for every situation. If this dataset is going to be consumed in multiple places, you would have to perform multiple joins which can both increase costs and hinder performance. In this case, you might prefer to stick to a flat table structure.

On the flip side, having large, flat tables can take up a lot of space in the data warehouse, which may not be optimal from a storage perspective and can also increase costs.

One caveat with data modeling, especially with flexible platforms like Snowplow and dbt, is that it can be tempting to build large, complex data models for multiple use cases at once, only to find months later that the data from those models are not being used.

These models, especially in a warehouse like Snowflake or Bigquery can lead to significant query costs for data that isn’t being used on a regular basis. It’s taking the time to understand the requests your stakeholders want to answer and prioritizing the most important projects, perhaps starting only with the most vital business questions first.

The most important thing to remember is that building data models is about making data available to the people who need them, in a way that provides value.

When it comes to data modeling, your end goal is always to deliver value to your end users. Even the most elegant data models are worthless if the data consumer doesn’t use them to drive success for the organization.

Build your data models in a way that encourages reusability and doesn’t result in data models being left stagnant or ‘dead dashboards’.

In our next chapter, we’ll be exploring data modeling on a practical level, building data models from Snowplow data in dbt.

This is a four-part series

Click below to navigate to the next chapter: