Announcing Snowplow Lake Loader

New data destinations on Azure and beyond

Back in August, we announced the first step to running Snowplow on Azure with our Quick Start guide for Open Source. For the first time, this enabled users to set up a full Snowplow pipeline within their Azure account and start collecting rich behavioral data and loading it into Snowflake.

Today we are introducing a set of additional destinations to build upon our continued support for Microsoft Azure:

- Databricks

- Azure Synapse Analytics

- Azure Fabric and OneLake

All of these destinations are supported by the same loader application: the new Snowplow Lake Loader (patent pending).

What is Snowplow Lake Loader?

Unlike most of our loaders, which deliver the data directly into a data warehouse, the Lake Loader streams data to… a data lake (okay, you probably guessed that).

The key to this approach is using an Open Table Format such as Delta. This way, you get the best of both worlds:

- Cost-effective storage in a data lake

- Efficient queries from any compatible data warehouse

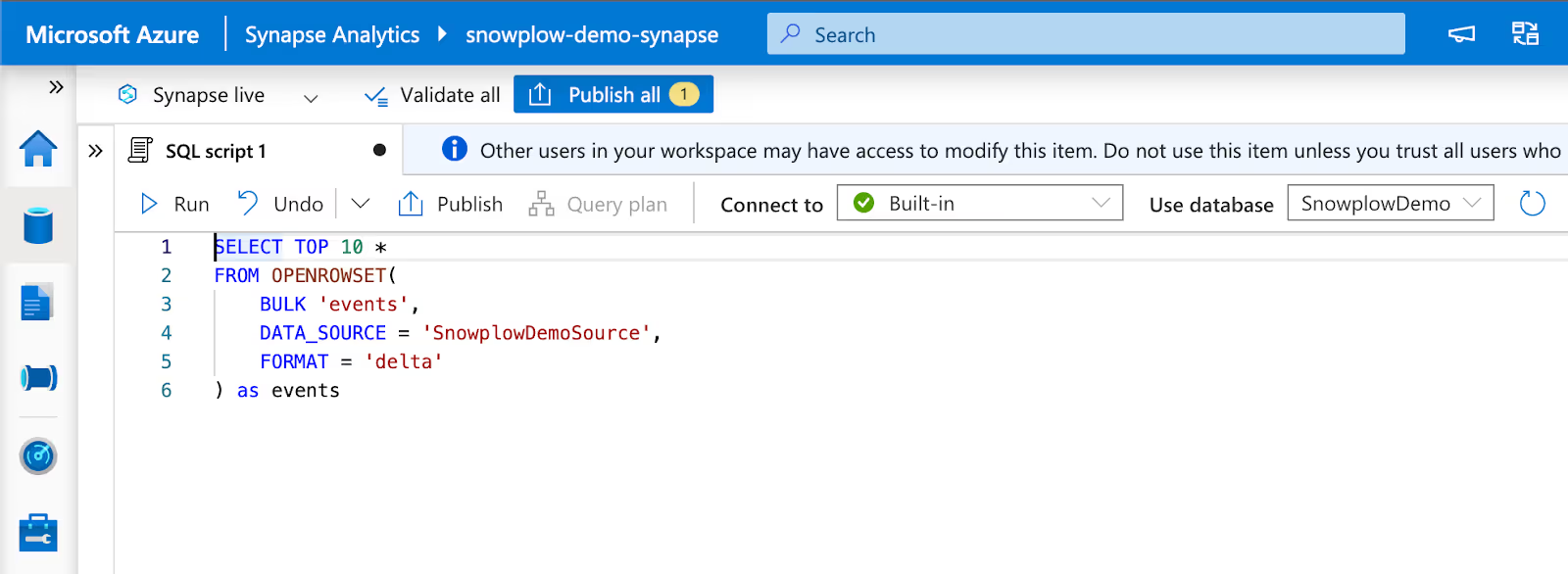

For example, Databricks, Azure Synapse Analytics and Azure Fabric Lakehouse can all work with Snowplow data residing in Azure Data Lake Storage in Delta format.

As with other Snowplow loaders, the Lake Loader automatically manages schema changes as you design and evolve your custom events. This ensures that the data in the lake is always well structured.

Running Snowplow Lake Loader on Azure

We’ve updated our Open Source quick start guide, so setting up the Lake Loader is a breeze. We’ve also added instructions on where to find your data in the data lake and how to query it with each analytics solution.

You can also read about the lake loader itself, its configuration settings, and more in our documentation.

What else can Snowplow Lake Loader do?

Our vision is for the Lake Loader to support a variety of clouds (Azure, AWS, GCP) and open table formats (Delta, Apache Iceberg, Apache Hudi). This will both enable new destinations for Snowplow data (e.g. ClickHouse, via S3 and Apache Iceberg), and provide alternative ways to load into existing destinations (e.g. Snowflake, Databricks, BigQuery).

Currently, we already support a few of these combinations:

- Azure + Delta — compatible with Synapse Analytics, Databricks, etc

- GCP + Delta — compatible with Databricks

The Lake Loader is still in its early version, although we have been running it with some of our largest customers as part of the private feature preview for Snowplow BDP Enterprise.

As with all our releases, we encourage you to share your thoughts and feedback with us on Discourse to help shape future development.

What’s next?

In the coming months, we’ll be announcing more destinations and clouds supported by the Lake Loader, including new Quick Start guides.

As for Azure support, stay tuned for a compatible release of our BDP Enterprise offering — you can join our waiting list here.