Limitations of Google Analytics 4 and how to solve them with Snowplow

Digital analytics is at the heart of every decision a marketing team makes from attributing marketing spend effectively to understanding and improving the customer journey.

Google Analytics ("GA") has long been the industry standard for digital analytics to try and enable teams to answer increasingly complex questions about their customers' behavior. Bringing self-serve analytics to the forefront back in 2005.

On July 1, 2023, the widely publicized sunsetting of Universal Analytics ("UA") took place. With UA 360 following suit with sunsetting scheduled for 1st July 2024, marketing and analytics practitioners have either been forced to transition to Google Analytics 4 (“GA4”) or migrate to alternative solutions.

Back in 2020, Google promised GA4 would bring a more complete understanding of how customers interact with your business, smarter insights and help brands respond to rising consumer expectations, regulatory developments, and changing technology standards.

For many data and marketing teams GA4 represents a number of failed promises and limits not only what can be achieved from an analytics perspective but also when utilising event data for other projects.

Marketing and Data teams are facing:

- Increasingly inaccurate view of behavior and its impact on business changes and marketing spend;

- Growing risk of GDPR infringements and other compliance challenges leaving teams unable to truly future-proof their data stack;

- Limitations on how data can be used out of Google Analytics UI with data latency, nested table structures and a lack of data quality;

- Higher cost of ownership when compared to other solutions.

SEOs: What are your thoughts about Google Analytics 4 (GA4) #ga4 #googleanalytics

— Barry Schwartz (@rustybrick) August 9, 2023

Wherever you are in your analytics journey, whether you are migrating to Google Analytics 4 or looking for alternative solutions, we break down the top 3 challenges you will face and how to solve for them:

- Limited view of customer behavior;

- Ongoing compliance battle; control and data ownership;

- GA4 data is not built for the data warehouse.

Limited view of customer behavior

Browsers including Safari and Firefox have increasingly limited a website's ability to track users. Sarafi’s Intelligent Tracking Prevention (ITP) and Firefox’s Enhanced Tracking Protection (ETP) both restrict access to all browser storage methods (e.g. localStorage) impacting how long a user can be identified when using third-party and client-set first-party cookies. Taking Sarafi as an example, third-party cookie tracking is restricted to 24 hours while first-party server-side cookies are restricted to 7 days.

GA relies on first-party client-set cookies generated on the device through JavaScript to track user behavior making them vulnerable to ad-blockers and these browser restrictions.

With missing or duplicated users and sessions provides inaccurate representation of user behavior for making decisions and attributing marketing spend.

With Sarafi alone accounting for ¼ of global browser market share according to Similar Web, reliance on GA tracking could significantly be impacting your marketing teams return on investment (ROI) and decision making.

How does Snowplow approach user tracking?

Snowplow offers 35+ trackers and webhooks to track events from across your digital platforms including Javascript tracker for web, Android, iOS and many more.

Snowplow’s Javascript tracker utilizes first-party server-set cookies, generated by the server and sent to the device - this allows us to profile users for up to 2 years. Using two cookies; _sp_id creates a unique identifier for each user, current session, tracks number of visits as well as many other information and our _sp_ses tracks session based activity (see our documentation for full details).

Snowplow also offers introduced support for an ‘ID Service’ in its web tracker allowing for the generation of a unique browser identifier to strengthen Snowplow tracking capabilities where Intelligent Tracking Prevention (ITP) is enabled.

With the expanded view of the customer journey, you have a full understanding from start to finish of your marketing funnel and what customer segments and channels are driving conversions. With this complete visibility, marketing teams can optimize budgets by focusing on the channels and campaigns that are driving the most return.

Brands like Digital Virgo, migrated from GA to Snowplow to build a more complete and accurate view to power accurate reporting across 40 countries. Using PHP and JavaScript trackers to build up a more complete picture of a user’s journey as they go through their session which had previously not been possible due to technology and cost implications.

Ongoing Data Compliance battle

GA has a recent history around its GDPR compliance status within the EU. The compliance status of GA4 stems from the collection of personal data and processing it outside of the EU.

GA4 introduced a series of privacy measures and features to help to try and resolve this including data retention settings, collecting data via EU-based servers, consent mode and no longer collecting IP addresses as well as many more.

Despite these efforts GA has increasingly come under scrutiny from Data Protection Authorities (DPA’s) across the EU as it Google Analytic still collects personal data (unique user identifiers) and processes it outside of the EU.

Here’s a quick breakdown of what has happened since 2020:

- In 2020 the European Court of Justice (CJEU) ruled that transfer of personal data from the EU to the US on the basis of the Privacy Shield Decision was illegal as part of the Schrems II case, brought by Max Schrems and his non-profit NOYB (None of Your Business). Prior to this companies could transfer data to the US under the EU-US Privacy Shield;

- In August 2020, NOYB filed complaints against 101 European companies in 30 EU and EEA member alleging that in light of the Schrems II ruling in July 2020 by the European Court of Justice (CJEU) that these 101 companies were in violation of GDPR by transferring personal data (cookies and IP addresses) to the United States through their use of Google Analytics;

- Since then we have seen numerous Data Protection Agencies (DPA’s) across the EU take action against Google Analytics;

- January 2022: Austrian Data Protection Authority: “The use of Google Analytics violates GDPR;”

- February 2022: French Data Protection Authority CNIL: The EU-US data transfer to Google Analytics is illegal;

- June 2022: Italian Data Protection Authority Garante: “Unlawfulness of data transfers to the USA;”

- September 2022: Danish Data Protection Authority Datatilsynet: Sites must stop using Google Analytics;

- March 2023 Norwegian Data Protection Authority Datatilsynet: “Google Analytics 4 does not correct the problems we have identified.”

- Swedish Authority for Privacy Protection (IMY) in July of this year imposing fines of 12 million SEK (approximately $1.1 million dollars) and ordered three companies to stop using Google Analytics;

- In late July this year, despite members of the European parliament against the ruling, the European Commission approved the EU-U.S. Data Privacy Framework, designed as a new attempt to bring legal certainty around the transfer of personal data from the EU to US companies without extra safeguards. Though this is expected heavily challenged by NYOB.

This on-going battle creates true uncertainty for data teams and the wider business for a number of reasons:

- Risk of GDPR infringements could incur fines of up to €20 million or 4% of the business’s total annual worldwide turnover;

- Teams using Google Analytics are unable to truly future-proof their data stack with uncertainty around future compliance challenges when handling EU data;

- If future rulings go against Google Analytics, teams will be forced to migrate to an alternative solution.

How does Snowplow approach compliance?

With this continued uncertainty having full ownership over your data, controlling what and where the data is processed to future-proof your data and analytics strategy.

- At Snowplow we have always championed a Private SaaS Deployment model, whereby Snowplow is deployed in your own private cloud in your jurisdiction of choice. Your data is collected, enriched and modelled in your own cloud providing full ownership and control over your customer data. You can find out more about the differences between private versus public Saas here;

- Snowplow offers the ability to respect customer privacy with out-of-the-box real-time PII pseudonymization, cookieless or anonymous tracking gives customers and you complete control over what is collected;

- With Snowplow, you can set up separate data pipelines for different regions. Then, if one jurisdiction changes their data privacy rules, you can adapt the security protocols for that region’s pipeline—without affecting data stored in other locations;

- Full transparency into customer consent with Snowplow’s ‘basis for tracking’ contexts, you can record a full GDPR context (or other regulatory framework) with every event. Have access to a complete consent status in a single schema table for every user.

How Trinny London used Snowplow to take ownership over their data

Snowplow provides Trinny London with first-party data and full pipeline ownership as it is deployed within its Google Cloud Platform (“GCP”).

Snowplow’s solution also enables the creation of data sets, rather than using pre-built data or data extracted from solutions such as GA. This gives Trinny London’s data team full visibility into their data and control over what is tracked, which is critical for enabling GDPR compliance.

GA4 data is not built for the data warehouse

As marketing and data teams begin to advance the insight and use behavioral data to build additional data products such as churn prediction and recommendation engines in real-time, they need to go beyond Google Analytics UI and export data to Google BigQuery.

Working with GA4 data ties you into using BigQuery opposed to your warehouse or lake of choice. Exporting data from Google Analytics to BigQuery, teams face a challenging data set to work with as GA data was never truly designed to be warehouse-first. Teams spend more time preparing data, higher cost of ownership as well as increased data latency. Below we break down the most common challenges faced.

Data Readiness

GA4 exports to BigQuery are heavily nested (multiple key-value pairs per event) requiring dozens of lines of SQL to ask basic questions. As well as a lack of official and maintained models to model GA4 data once it lands in BigQuery, to build incremental logic and models on top of the data takes engineering skill and resources to implement effectively to a production-ready standard.

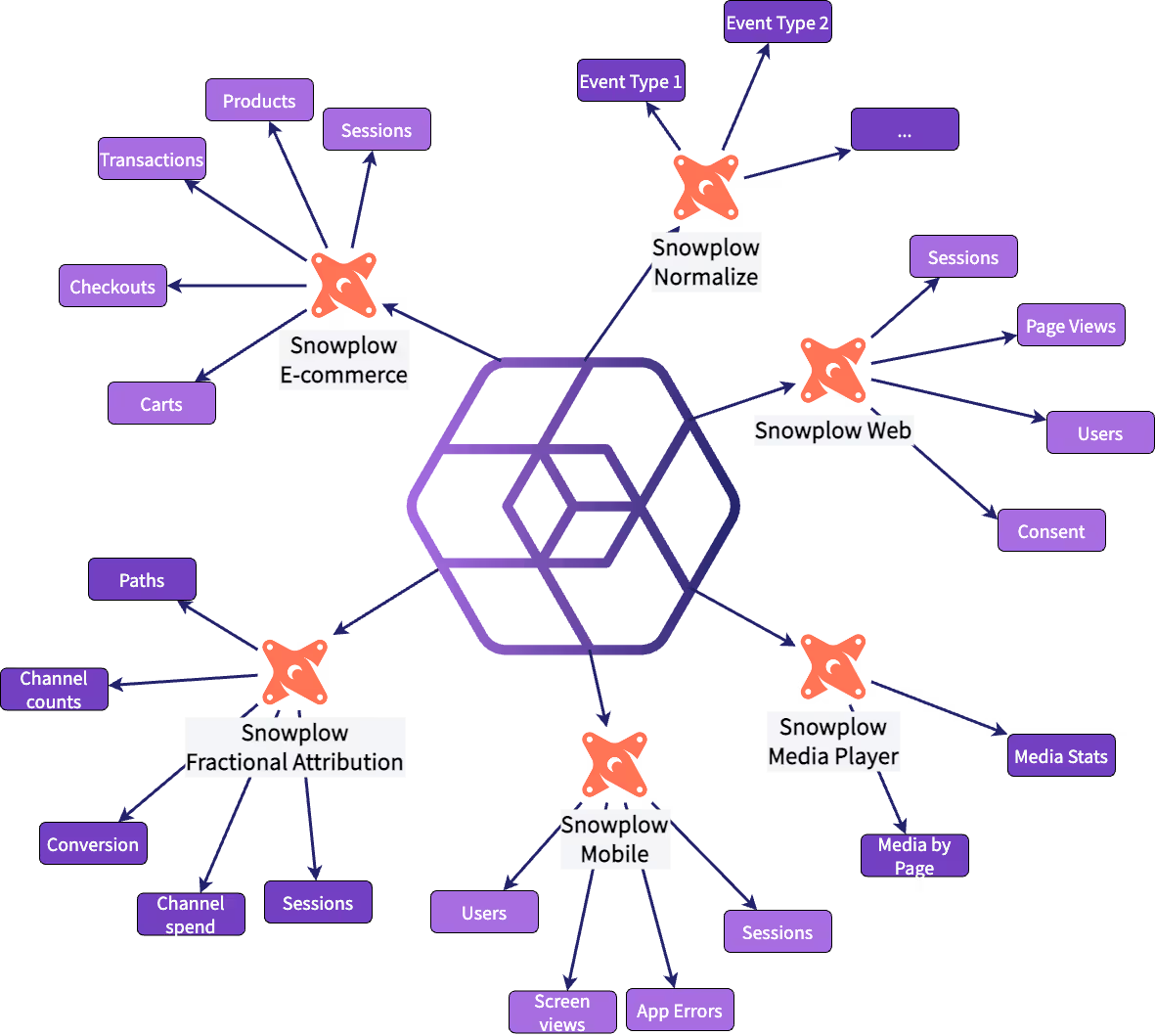

Snowplow data lands in a unified event stream, a log of all the actions that have occurred across your digital platforms. There is then a series of out-of-the-box dbt packages to model your data for analysis for example, web data is modelled to a page, session and user level to create BI and AI-ready data for analysis or building additional data products.

Our recent report with Gigaom found that Snowplow queries were 42% less complex and allowed for more user-friendly queries. Every model is optimized for efficient querying to prevent spiralling warehouse compute costs as your data set grows.

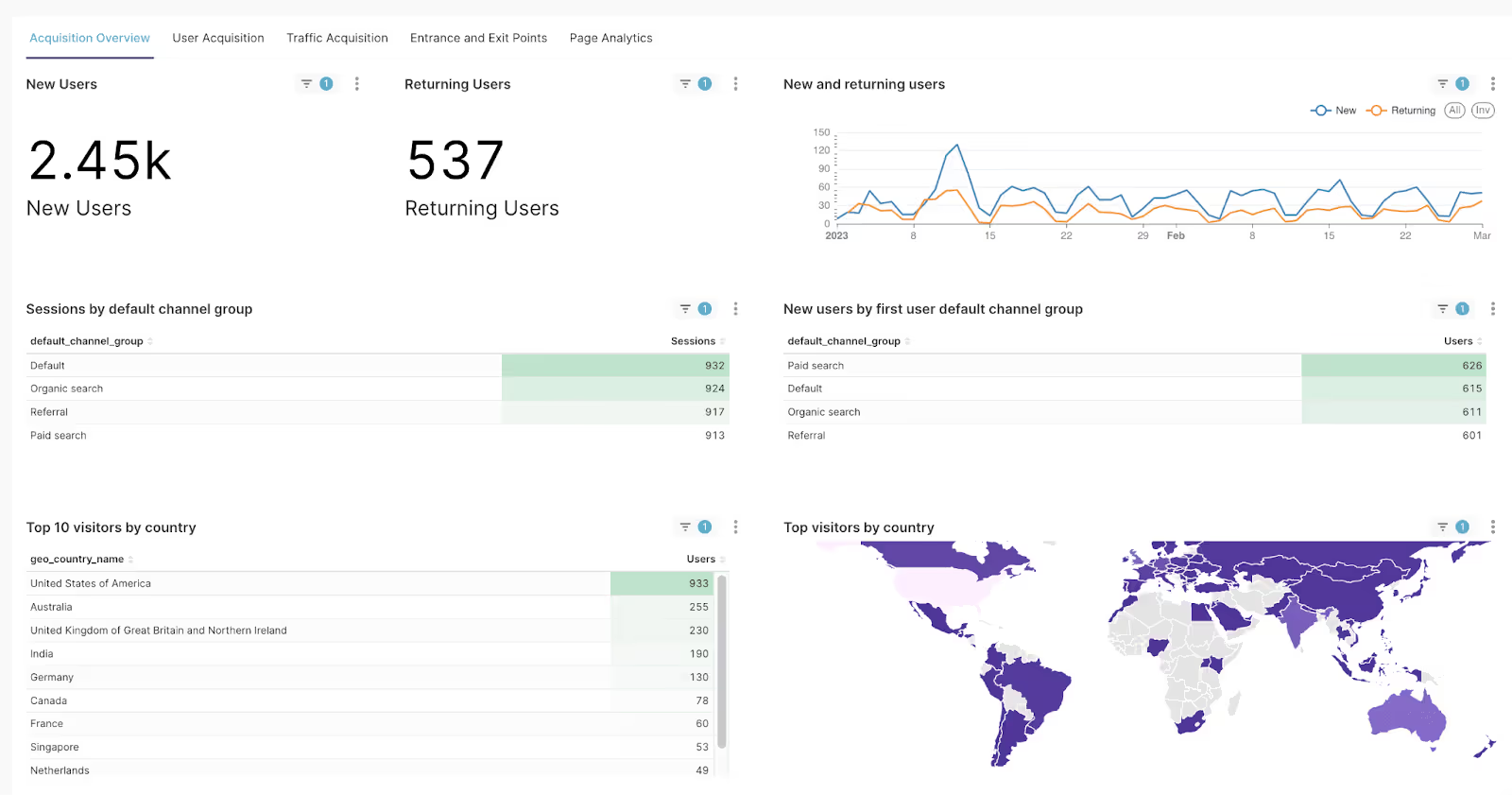

There are our series of Data Product Accelerators that enable you to activate additional and visualize additional use cases including marketing attribution and Snowplow Digital Analytics, which gives marketing teams access to all the standard reports in Google Analytics but powered by Snowplow data.

Data Richness

The richness of your data is integral for providing context and drill down options for your analytics but as the strongest signal of intent, behavioral data plays an important role in creating accurate model predictions. GA offers 50 attributes collected with each event out-of-the-box as well as limitations on the number parameters collected with events reducing the completeness and richness of the dataset.

The limitations are as follows:

- User-scoped Dimensions: 25

- Event-scoped Custom Dimensions: 50

- Item-scoped Custom Dimensions: 10

- Custom Metrics: 50

Snowplow offers 130+ out-of-the-box events as well as an extensive of plug-ins including HTML5 Media Tracking with events and entries to track interactions with media players and adverts, e-commerce tracking, form tracking and many more to provide the full context around the event and provide a richer data set for analytics and train AI models.

Data latency to power real-time analytics and applications

GA4 currently is unable to support real-time analytics or additional real-time applications onto its data when exported to BigQuery such as personalisation and recommendations engines.

GA4 daily export to BigQuery happens 24 hours after midnight in the property timezone and may take up to 72 hours beyond the date of the table to be fully updated. Google’s Data API queries are managed on a “Best Efforts” basis limiting the scope to support additional real-time use cases.

Snowplow's real-time stream is highly reliable and under strong SLA - data can be expected to land within 2-5 seconds and guarantees delivery to the warehouse within a strict SLA of 30 minutes.

The engineering team at Autotrader implemented Snowplow as part of their composable Customer Data Platform (CDP) to deliver real-time personalization of search results to improve user experience and drive a positive uplift in the mean number of advert views per session.

Data validation and quality controls

When it comes to web analytics, GA lacks built-in validation, quality control or observability. With no way to detect or modify collected events teams must always ensure the technical implementation of your data collection. With any data quality issues needing to be addressed downstream.

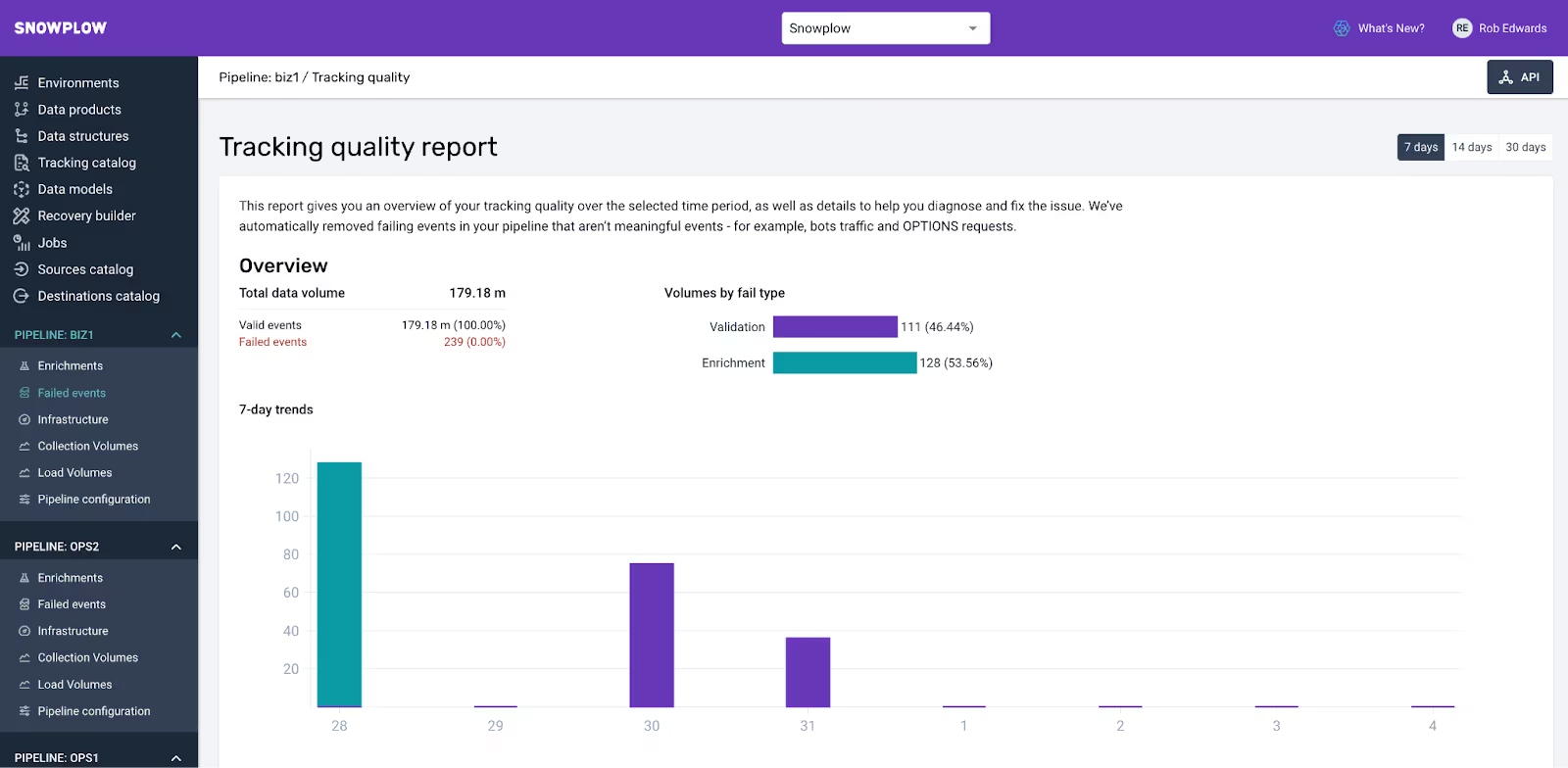

In contrast, Snowplow takes a proactive approach to data quality centred around a customizable event schema that you define. Every single event is validated against its expected structure before it lands in your downstream destinations, providing a dataset that is ready for analysis while bad events can be reviewed to address data quality issues.

Ready to explore how you can replace Google Analytics with Snowplow?

- Book a demo to see how Snowplow can help you replace Google Analytics with Snowplow for Digital Analytics;

- Read more about the GigaOm Report comparing Snowplow and Google Analytics for digital analytics.