How to build a content recommendation engine with Snowplow

Recommendation systems infer the context that will maximize user engagement and satisfaction and, thus, drive growth. With their importance now universally considered as self-evident, it is of no wonder that companies have started to seriously view their recommendation engines as a strategic asset.

Data quality goes a long way. By reducing accidental complexity, it enables you to confidently build recommendation systems that are reliable, agile and easy to reason.

The following sections describe the considerations, design and implementation decisions in the process of building the content recommendation engine for the Snowplow blog. Starting from Snowplow, event-level data points, through data modeling, we leverage the granularity of behavioral data to form a clear view of our content's performance and consequently generate successful recommendations.

Getting started

In the following sections we assume that you know what Snowplow does, what Snowplow granular raw event data looks like, what tracking design is and why event data modeling is important, but you can certainly read more following the links below:

- Understanding tracking design

- An introduction to event data modeling

- What is data modeling and why do i need it

- Snowplow web data model

Recommender systems

As a brief overview of the relevant terminology, content recommendation systems are generally comprised of the following components and phases:

- Users: The consumers of the content.

- Items: The content monads. For example, news articles or blog posts, songs or albums, videos etc. Usually, items and recommendations are of the same kind.

- Preferences: A user’s perceived value of an item. They can be explicit (user ratings) or implicit (derived from user behavior), and they are used in the scoring phase.

- Strategies: The assumptions, the component definitions and the algorithms used to recommend items to users. They typically determine all phases from scoring to candidate selection and ranking.

Content recommendation systems usually employ one or a combination of the following strategies:

- Metric-based:

Candidate items are ranked based solely on overall aggregate metrics, such as the latest, most popular or most shared ones, and even though the metrics may have been derived from past user journeys, the recommendations are eventually invariant to the current one. In other words, at a particular point in time, every user gets recommended the same things.

- Personalized:

These strategies are considered personalized because the recommendations generated do depend on some parameters of the current user journey. Candidate items are ranked based on similarity scores. The two most common algorithms are content-based filtering and collaborative filtering. The first calculates similarity of items' features. The user will be recommended items with features similar to the ones of the item currently in view, for example, songs from the same artist, books from the same category etc. Collaborative filtering goes one step further and also uses similarity of user characteristics and traits. Such a recommendation engine will recommend an item to a user based on the interests of similar users.

At this point, it is worth noting that, under the scope of the current and emerging privacy regulations and public awareness, the approaches to user similarity may need to be reconsidered. Until now, the user characteristics making the similarities predictable involve access to personal data that are probably crossing the line.

Anonymity and personalization are not inherently mutually exclusive. Having high quality event-level behavioral data across your digital products allows you to tailor your recommended content and improve the user experience with limited or even without user identifiers. As an example, for building this content recommendation engine, only session information was used.

Behavioral data

Behavior presents an abstraction that transcends the usual notion of similarity. Similar content may not be what the user needs next. Similar users do not necessarily need the same things. Social psychology research consistently finds behavior to be a more accurate predictor of itself than user traits, characteristics, or even attitudes.

Of course, the time parameter is also important. If the time frame is too big, we all, as users, need and like the same things. If the time frame is too small, we are all essentially unpredictable. How can you ensure that your recommendations do not reflect behaviors that are too random or too average to be personal?

Domain knowledge plays a major role in identifying the behaviors and user journeys of interest. Having the flexibility to explicitly embed your domain knowledge and business logic into your data pipeline, from the tracking design to your data models, can create a competitive advantage.

Snowplow enables you to unlock the value of this flexibility and stay agile on a data system level. Snowplow’s event-level behavioral data can be as granular as your business logic demands in order to specify different user journeys, accurately measure your content’s performance and derive reliable user engagement metrics for the scoring and ranking functions of your recommendation engine.

Designing the recommendation system

This section describes some of the decisions involved in the process of designing a content recommendation system using Snowplow granular event-level data. As mentioned above, our example recommendation engine is for the Snowplow blog. However, all of the design decisions assume a fairly standard tracking design and pipeline configuration, so that they can be easily generalised and adapted in your specific use case.

As a minimum set of requirements, the recommender system should be able to:

- Access historical data and process them periodically.

- Store the output of processing so that it can be queried.

- Expose an API to receive and respond to requests from the web server.

Recommendation strategy

User preferences are implicit and will be calculated from Snowplow’s detailed engagement data. In other words our scoring will reflect not only the business logic but also the content’s performance. In the data modeling section below, you can see how a variety of scores can be derived from Snowplow data.

The recommendation strategy that will be used can be described as a combination of metric-based and personalized strategies:

- The metrics used are derived from event-level granular data points, aggregating over users’ detailed engagement data such as the time spent on a blogpost page, the percentage scrolled, the conversion clicks, the social shares or other interactions of interest that may happen as part of the user journey.

- The viewing history of the user journey will also be an input to the recommendation engine, meaning that no users will be served the same recommendations unless their viewing histories are identical. In other words, we will be using behavioral similarity rather than content or user similarity.

More specifically, as an outline, we will:

- Calculate a score to represent the value of each page view on the blog.

- Aggregate the page views for each blog post in order to assemble aggregate metrics.

- Consider as relevant the blog posts that cooccurred with high-value page views during the same session.

- For each blog post, aggregate the candidate items ranked by their respective scores to map items to respective lists of relevant ones.

- Filter out the blog posts already viewed during the current user journey.

Architecture

As a starting point, we will be building upon a basic Snowplow pipeline setup in AWS. However, the architecture can be easily transferable to GCP.

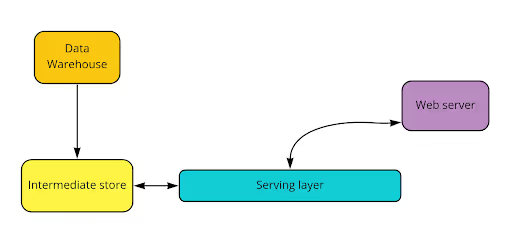

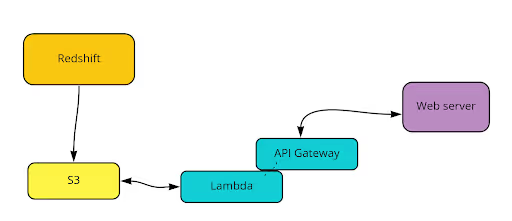

From a high level, it can be depicted as:

Having loaded our event data into a database like Redshift, we can then run our recommendation algorithm as an SQL model that also unloads the relevant data to S3. Our blog web server sends recommendation requests to API Gateway, which triggers a Lambda function to retrieve the relevant data and potentially apply any additional filtering in real time.

Building the content recommendation engine

We have already set up Snowplow data tracking on the blog website using the JavaScript tracker, to track standard page views, with activity tracking enabled (with 10 seconds heartbeat), link clicks etc. We use the default session cookie duration and have also enabled the predefined web page context.

Data modeling

Once the raw data is in the warehouse, we will need to aggregate over event-level data in order to make them available in a structure suitable for querying. Implementing the data models is the most essential step.

First, following the strategy design, we need to decide on our business logic and define the higher order entities of interest. In doing so we also leverage the part of the Snowplow web data model that aggregates Snowplow atomic data to a page view level. In particular we will use its derived.page_views table, and consequently adopt the business logic included, which in particular defines:

- A page view where the user_engaged, as one where the user engaged for at least 30 seconds and with a scroll depth of at least 25%.

- A page view where the user_bounced, as one where the time on page is zero.

Furthermore we also define:

- session_of_intention, as a session during which the user showed an intention of conversion by clicking on the buttons to get started or request a demo.

- social_shares as the events of clicking to the social buttons.

- blog_events as all the events where the app_id and the page_urlpath match for the Snowplow blog.

- blog_pageviews as the page views where also the app_id and the page_url_path match for the Snowplow blog.

- As blogposts, the discrete url paths from the blog_pageviews.

- As user journeys of interest, the ones of existing or potential Snowplow customers. Assuming that customer interests are different from, for example, job seeker interests, we need to filter out the unrelated user journeys, by excluding all events that occurred in the same session with a visit on Snowplow’s career page.

The SQL model so far could be depicted as:

Having aggregated from the raw event data, where there is one row per event, we created tables with one row per higher-order entities, as we defined them in the business logic.

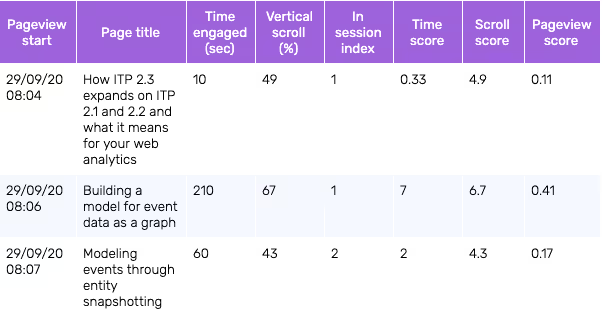

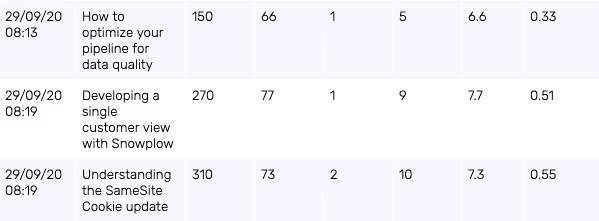

The blog_pageviews table has one row per pageview on the blog (some columns omitted for simplicity).

As you can see, having these accurate views, we implemented scoring. As also mentioned in the design section, we assigned a score to every blog pageview to reflect its “value”. This value function is also part of the business logic, which in turn evolves, so you can keep experimenting with many value functions and use them in your A/B testing.

For this case, the total_score was calculated as a linear combination of the following variables, with weights reflecting the importance we consider each to have:

- time_score

- scroll_score

- intention_score

- aging_factor

The aging factor is being used in order to account for the fact that a pageview that happened for example 2 years ago, has less value for us today compared to a pageview that happened more recently. In other words, this parameter is used to make the value of a pageview relative to the present.

In more detail:

-- ...

-- SCORING

, (CASE

WHEN a.time_engaged_in_s < 300 -- 90th percentile of engaged views

THEN (a.time_engaged_in_s / 30.0)

ELSE

10

END) AS time_score -- [0,10]

, (a.vertical_percentage_scrolled / 10.0) AS scroll_score -- [0,10]

, session_of_intention * 10 AS intention_score -- [0,10]

, (CASE

WHEN page_view_age_in_quarters < 3

THEN 1

ELSE

LN(page_view_age_in_quarters)

END) AS aging_factor -- >=1

, ((0.4 * time_score) + (0.2 * scroll_score) + (0.4 * intention_score)) / (10 * aging_factor) AS total_score -- [0,1]

-- ...

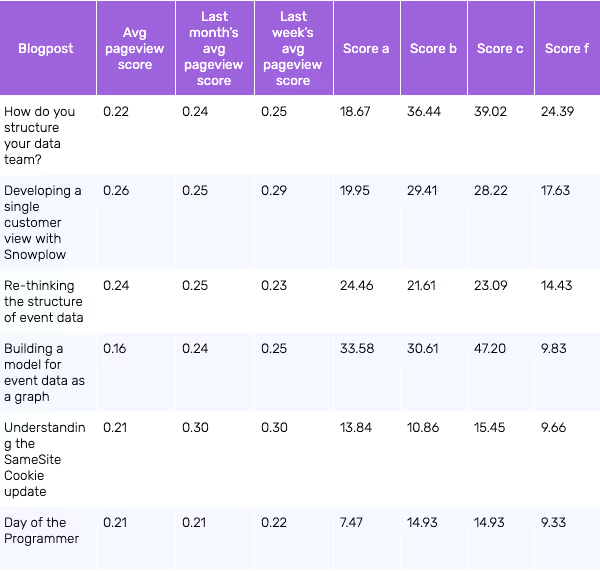

Having a page view score, enables us to derive various aggregates when grouping by blog posts. Below, you can see in the last columns various scores named a, b, ..., f, that are derived by combining various columns as parameters to the blog posts' scoring function.

Generally, scoring functions are some of the ways biases propagate or get created in recommendation systems. There is a lot of literature on the matter, and even though there is no definitive solution, but being aware of this is important. In the scoring functions above, for example, it may seem possible to reduce popularity bias by not including the number of views as an increasing factor. By doing so, however, other first or second order biases may start to shine, for example, a kind of minority bias, that naturally skews any averaging function. By having consistently high quality data to feed any personalization engine, you are already at a great starting point, since higher quality reduces bias. However, with biases, be they statistical, algorithmic or cognitive, we all always need to be alert. Especially when we engineer systems that measure or influence human behavior.

As a last data modeling step we define relevance, also as part of the business logic. As an example, we define two blog posts to be relevant if they co-occur in the same session with high value pageviews, choosing score_f. We could then create the blogpost-cooccurrences table like:

WITH

prep_self AS (

SELECT

a.urlpath AS pair_A

, b.urlpath AS pair_B

, AVG((a.total_score + b.total_score) / 2) AS pair_score

, ROW_NUMBER() OVER (PARTITION BY pair_A ORDER BY pair_score DESC) AS row

FROM blog_pageviews AS a

INNER JOIN blog_pageviews AS b

ON a.session_id = b.session_id

WHERE a.urlpath <> b.urlpath

GROUP BY 1,2

), prep_scores AS (

SELECT

urlpath

, score_f

FROM blogposts

)

SELECT

pair_A

, LISTAGG(pair_B, ',')

WITHIN GROUP (ORDER BY score_f * pair_score DESC) AS recomms

FROM (SELECT * FROM prep_self WHERE row < 16) AS cooccurrences -- limit list size

INNER JOIN prep_scores AS scores

ON cooccurrences.pair_B = scores.urlpath

WHERE pair_A IN (SELECT urlpath FROM prep_scores)

GROUP BY 1

Serving layer

In order to fetch recommendations, the web server will make a POST request to the Lambda proxy API endpoint, including the view history of the current user journey as a body parameter.

The Lambda function triggered will be responsible to parse the body parameters, then to query S3 (using the S3 Select capability), parse the response to get an array of recommendations and filter them in order to provide the final array of recommendations as a response.

S3 Select is a performant way to retrieve a subset of data stored in an S3 bucket using simple SQL statements. Even though it does not support the full functionality of other services like Athena, it enables us for this use case to avoid using another service from the AWS stack, and keep the engine’s architecture simple. S3 Select uses the SelectObjectContent API to query objects stored in CSV, JSON or Apache Parquet format. This matches the format we specified in Redshift’s unload command. As an example of the parameters it accepts:

{

Bucket: yourBucket,

Key: yourObjectKey,

ExpressionType: 'SQL',

Expression: “SELECT recomms FROM s3object s WHERE urlpath=’/blog/post’ LIMIT 3”,

InputSerialization: {

CSV: {

FileHeaderInfo: 'USE',

FieldDelimiter: delim

},

CompressionType: 'GZIP'

},

OutputSerialization: {

JSON: {

RecordDelimiter: delim

}

The serving layer of a recommendation engine can be customized to include any additional business logic, in order to filter or enrich the final response.

Where to go from here

As also mentioned above, when building our recommendation engine we intentionally kept the design and implementation decisions simple. You can actually build it even if you are just starting out with Snowplow. Another main reason for doing so was in order to show that the high quality and the granularity of event-level data can give a starting advantage to a recommendation engine just by themselves. Furthermore, even though this system can serve as a base model as is, it is also fairly extensible. There are several directions to extend it, some of which could be:

- Augment the personalized part of the recommendation strategy.

A simple way to do so could be by using custom contexts in your tracking design, like an article or a user entity, to augment your standard Snowplow events.

- Inform the serving layer in real-time.

For example, if your user logs in or subscribes, maybe you would like to switch to a different scoring or filtering strategy for the content you recommend.

- Explore ways to evaluate the system.

In the short run, any recommendation system will seem to increase user engagement. But as you recommend content, you practically influence the data you feed the engine. This creates a feedback loop that degenerates the system in the long run. Having high quality and granular data points enables bottom-up analysis to minimize the confirmation bias during evaluation of the system performance. It is worth exploring de-biasing techniques in fully visible recommendation engines like the one we just built, to increase confidence in potential future deploys of advanced yet "black box" machine learning models.

- Include recommendations in your tracking design.

Besides other things, recommendations are a great way to understand your users. You could start by tracking recommendations as custom entities to gain a full picture of how they fit into or influence the user journey.

Start recommending content with Snowplow

Snowplow enables you to build a high quality content recommendation engine that aligns with your business goals to enhance user experience and increase engagement, conversion and retention rates. In the sections above, we presented a fully working way to build a content recommendation engine fuelled by Snowplow granular, event-level, behavioral data.