From Data Assets to Data Products: How to Unlock Self-Service Analytics at Scale

Companies have always tried to empower their employees to self-serve data. Traditional approaches have focused on curating a centralized data set, facilitating access and providing convenient UIs for analyzing that data.

In the last five years, AI has created opportunities for companies to use data in radically new ways. At the same time, the proliferation of digital platforms means organizations can create and work with entirely new data sets.

Treating data-as-a-product is a new approach to managing data that has emerged in recent years. It goes a long way to solving the challenges of driving self-service, especially in today’s world where data consumers have the ability to do so many interesting things with so many different data sets.

In this post, I’ll examine why it’s so important to treat data like a product. In the follow-on blog post, I’ll look at how treating data-as-a-product supports the realization of value in data applications.

Why treat data-as-a-product?

Data is not oil

Data is valuable. The world’s leading companies use data to differentiate themselves from the competition.

The best companies in the world are “democratizing” data so that every employee has access to lots of data that gives them up-to-the-minute insight into what’s going on in their business (e.g. what are our sales, who are we selling to, how profitable are those sales, how were they generated, who bought, what type of customers they were…). This gave rise to the idea that data is an asset.

However, organizations face a wide range of challenges in realizing the value of their data, even when adopting best-in-class technology stacks (data platforms), such as cloud data warehouses, modern BI tools, AI tools, data transformation tools etc.

Enabling end users to self-serve data is difficult

It’s likely you’re keen to enable end users in the different lines of your business to “self-serve” on data:

- For end users, self-service is empowering - they can develop their own insights, make better decisions and build their own data applications to drive more impact and be more successful

- For management, the ability to self-service means that the quality of decision making across your organization is improved

- For data teams, they can drive much more value by enabling a greater number of people to derive value from the data than if they had to create that value themselves - the data team is not constrained by their own size

For this reason, self-serve has been a big topic in the data industry for decades: business intelligence solutions emerged in the 1970s and 80s to enable different people across the organization to analyze the data in the data warehouse themselves.

More recently, semantic layers and data catalogs have emerged as tools to support self-service.

Despite all this, self-service is still difficult. To enable self-service, it turns out that it isn’t enough to give end users access to data and tooling to slice and dice the data.

Data consumers need more than just data and tooling to be able to self-serve effectively

They need to know what data is available

Data discovery is a significant challenge in most organizations: there is typically a lot of data. That’s why data consumers need some kind of catalog that clearly shows what data is available so they can find what they’re looking for themselves.

They need to know how to access the data

It may not be enough to know that there is data available in a particular table, API, event stream, semantic layer, feature store or analytics tool.

Data consumers might need additional instructions on how to access the data, including the ability to gain access to logins / permissions.

They need to know what the data means

To actually use the data to draw insight, engineer features or deliver a dashboard, data consumers need to understand and interpret the data.

This is only possible if they understand what the data actually means, which in practice often means how it is generated (i.e. what triggers a new line of data, and the rules for setting the different cell / property values). This is typically referred to as the semantics of the data.

They need to know the quality of the data

The data consumer requires a certain level of data quality - how high this level is depends on how they plan to use the data. If the data is going to drive an AI model, especially for a critical decision (e.g. fraud detection), the quality bar is set much higher.

If the quality is too low, the data consumer should not waste her time trying to use the data to solve her problem.

They need to know any SLOs / SLAs associated with the data

If a data consumer wants to build a production application that uses this data on an ongoing basis, she needs to know whether she can rely on the data being delivered within a certain timeframe, for example. This is particularly important for real-time and near-real-time applications.

To enable self-service, data needs ownership

All the above information is metadata, i.e. data about the data.

This is information that hopefully lives in a data catalog, but someone needs to populate the data catalog.

Someone needs to own the data and be responsible not just for the entry in the data catalog, but also for ensuring that the data continues to meet the standards set in the data catalog (and that changes to the data are reflected in the catalog). This means that you have to be the owner of the data sets.

Of course, we want to automate the population of as much of the metadata in the catalog as is possible. But there is always some, critical metadata that a human being will need to manually populate – or example around the semantics of the data.

Data teams are not in a position to own all the data

If they are the only owners of data, they become a bottleneck

In many organizations, the responsibility for delivering data to the business (and managing assets supporting the use of the data, such as data catalogs, semantic layers and business intelligence tools) lies with a central data team that is charged with driving data usage across an organization.

The amount of data that is democratized around the business depends on the capacity of the central team.

Data teams are often not in a position to own specific data sets - especially data sets that live upstream of the data warehouse

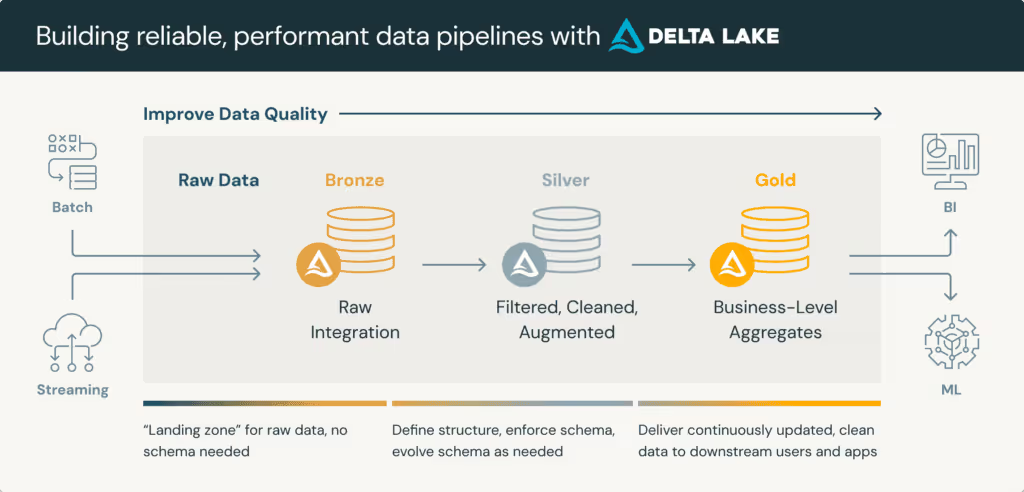

The data value chain is long and opaque, making it difficult to understand who owns what. Often the data that is “democratized” in a business is the “gold” data in a medallion architecture.

- Raw data from different sources is landed as bronze data

- This is cleaned up into silver tables, which are intermediary building blocks

- Silver tables are then computed to deliver “gold” tables - business-level aggregates that are optimized for direct consumption by individuals in the different lines of business

In this setup, it is common to have a central data team responsible for the flow of data from bronze to silver and to gold. By extension, this makes them responsible for the gold tables.

However, the management of the data upstream of the data warehouse might be completely opaque, with a variety of tooling used to bring in data from different source systems, and multiple people responsible for the data quality of each of those systems.

For example, anyone who’s had to work with Salesforce data in a data warehouse will know this can be incredibly challenging.

This is because it’s hard to get sellers and other end-users of the tool to adhere to high data quality standards when entering data.

Consequently, it becomes hard to successfully process and transform the Salesforce data into something useful from an analytics perspective in the data warehouse, because the structure of the data is not optimized for analytics or insight.

Try getting someone on the data team to compel the sales team enter data into salesforce in a specific way to make working with the data easier: it does not work.

They might not be the right people to own data sets downstream of the “gold” tables

It gets more complicated. End users who want to self-serve the data are likely to explore the gold tables.

But then they might take one or two tables and combine them to produce a useful view on the data. Or they might not find what they want in the gold tables, and work back to the silver tables, because they want to drill into a level of granularity that isn’t available in the gold tables. They’ll then take that information and combine it with data in other tables.

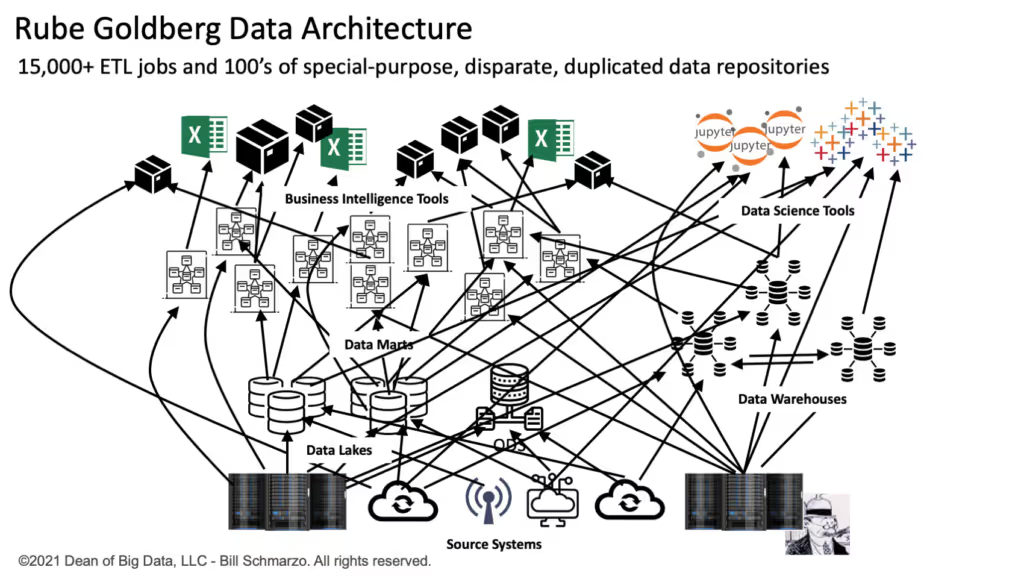

A third user might find that combined view helpful, and then take those tables and combine them with some other tables. Pretty soon you have a spaghetti of data pipelines and tables, where it isn’t clear:

- Who created which table

- Why they created each table

- What the level of governance is on each table

- What the upstream dependencies are on each table

- What each table means

- What each table is used

- What SLAs (if any) are offered around this table

To be clear, it’s great that teams in the lines of business are building new data tables that are not only helping themselves but also supporting other folks in the business.It’s also great that they’re building them on top of well- governed gold tables.

But asking the data team to keep track of those tables and “own” them is not going to work.

From data-as-an-asset to data-as-a-product

One solution to the above challenges is to treat data-as-a-product:

- Data tables have specific owners. (You can call these people “data product owners / managers” - note that in many orgs today, there are “shadow” data product managers i.e. people with other job titles but who are held responsible for one or more data sets.)

- Different people own different data sets

- Data sets (e.g. specific tables) have customers – people that use the data to do stuff, including doing analysis, powering data applications e.g. fraud detection engines, dashboards

- Those customers have needs that evolve

- The data product owners / managers are responsible for meeting the needs of the customers of the data tables. This means they have a way of keeping track of who is using what data. It also means anticipating those changing requirements

- The data product owners / managers have teams (e.g. with data engineers, analytics engineers, data scientists) so that they can build what is required to meet the needs of their customers

- The data product owners / managers are responsible for the upstream pipelines that deliver that data set - unless they’re consuming from a different data set with a different data owner. In this case, they are a customer of that data set, with a dependency on it

- The data tables have a roadmap, worked out by the data product owner, to meet the needs of the customers

- As part of her commitment to meeting the needs of customers, the data product owner is not just responsible for the delivery of one or more data tables, but all the additional things that a customer would need to be successful with the data. This might include:

- Metadata i.e. entries describing the data in the data catalog

- APIs and UIs for accessing and working with the data

Multiple people and teams can create and publish data sets

Treating data-as-a-product is a valuable way to help organizations democratize data by ensuring that there are people with the right mindset and skills to facilitate the publishing and usage of different data sets by different stakeholders across the business.

However, if the data team is the only team capable of publishing and managing data tables for the rest of the business, then it will become a bottleneck for data democratization.

Instead, if many more teams in an organization can be empowered to publish and manage data sets for the rest of the business, then that bottleneck will be removed.

Instead of competing on “how many teams use data to drive decision making”, companies can start competing on “how many teams are creating data sets that are adding value to the rest of the business”.

Teams need to be incentivized and empowered to publish and manage data sets that are valuable to the rest of the business

This is a difficult problem to solve. But it can be solved.

Many teams need to create data sets for their own internal use. For example, a product engineering unit might create a data set that describes how users engage with their product so that they can use this data to improve the product.

What if they had an incentive to publish and maintain this data set as a product that can be used by other people in the organization?

Maybe it’s important for the customer success team to understand how different people are using the product and tailor their success plan accordingly? For the marketing team, the data could be useful when it comes to segmenting customers for specific campaigns.

The key is to provide different teams with technology that makes the process of publishing and then managing data sets as a product as easy as possible. This lowers the cost to individuals and teams to create and manage data sets, empowering more people and teams to do so, thereby supporting the whole organization to develop and use more data sets across the business.

Data contracts support treating data-as-a-product

Data contracts are powerful technologies that enable individual teams to create and manage data-as-a-product.

Data contracts are machine-and human-readable abstractions that describe what each data set is (I.e. contains all the relevant metadata that a data consumer would need to successfully self-serve on the data.)

Further, data contracts are enforceable, i.e they don’t just describe how the data should be, but can also be used to provide some level of assurance / guarantee that the data meets the specification given.

For any data set we want to create, we can define a data contract that formally specifies:

- Who owns the data

- What the data means. In practice - how the data was created / manufactured

- How the data is structured (i.e. the schema)

- Where the data can be accessed. (From which API, via what type of protocol and access method(s))

- SLAs for delivery and access

- SLAs for data quality

- Policy – what you may and may not do with the data

Data contracts can support organizations to effectively treat data as a product and thereby democratize and manage data more effectively, by providing the basis of a huge amount of automation which should make it easier for different teams to publish and manage data as a product:

- Anyone who wants to create a new data set can start by defining a data contract

- Technology (e.g. Snowplow) can then be used to automate as much as possible the process of creating (manufacturing) this data set, based on the definition

- The contract can be used on an ongoing basis to ensure compliance with SLAs

- Technology (e.g. Snowplow) can alert on breaches of the SLAs, e.g. if the data does not match the schema or the latency of the data has breached the SLA)

- The contract can be versioned so that people understand the evolution of a data set over time

- The contract can enable interoperability - for example a data contract can be used to facilitate the integration of a Snowplow data set with: a data catalog, a BI tool, a semantic layer, a data science toolkit, a user permissions and access management system. The contract can be used, for example. by access control systems to ensure that only the right people have access to the data set, or by other tools to automatically expire or anonymize data after a certain period of time

I wrote more about data contracts in my Substack post, ‘What is, and what isn’t, a data contract’ [https://datacreation.substack.com/p/what-is-and-what-isnt-a-data-contract].

So, what is a data product?

Now that we understand what it means to treat data-as-a-product and why we should do so, let’s come back and think about what a “data product” actually is.

There is a real lack of consensus in the industry about what a data product is. Some commentators associate the definition of data products with treating data-as-a-product, whilst others thinking more expansively about data applications (i.e. all the different types of applications you could build that utilize this data - more on this in my next post.)

My strong preference is that we define a “data product” as a data set that is treated as-a-product, for three reasons:

- Treating data-as-a-product is an incredibly important and powerful shift in the way we think about and manage data

- We already have a perfectly good way to refer to data applications i.e. “data applications”

- It is helpful to distinguish between “data applications” and the “data sets that are treated as products” i.e. “data products”

So if a data product is a data set that is treated as a product, what are the things we would expect to make up that data product?

- The data itself

- All the metadata required to enable people to self-serve on the data (discussed above)

- Additional metadata, e.g. who owns the data set

- A data contract. This would be a machine-and-human readable version of all the metadata.

A data product then is a well documented data set with an explicit owner, who manages it as a product, and an associated data contract.

Rather than thinking about data as an asset, organizations should instead be looking to build data products, as an effective approach to enabling much wider, self-serve usage of data across the organization.

In my last post, I took a more detailed look at data applications and explained how data products provide an excellent foundation for developing more effective data applications.

How we are empowering data applications and products at Snowplow

Through my work at Snowplow, I am lucky enough to have a canvas where emerging ideas like data applications and data products can be implemented for our customers to test out and share feedback on.

I am delighted to announce that this week we are launching Data Products into the Snowplow Behavioral Data Platform. This follows the launch of Snowplow Data Apps.

We are building data products to help customers bridge the gap between data producers and data consumers, documenting the behavioral data used in your business to empower a culture of self-service.

To find out more about Snowplow Data Products, please check out our launch blog post.

Read more articles like this

This article is adapted from Yali Sassoon's Substack Data Creation: A blog about data products, data applications and open source tech.