The role of data modeling in the modern data stack

In recent years, data modeling has seen something of a renaissance within the data landscape. Transformation (as it’s also referred to) is on everyone’s lips, and powerful solutions like dbt, in combination with leading ingestion tools like Snowplow, have unlocked the power of transforming data to thousands of organizations.

Data practitioners spend a lot of time thinking about how best to model their data, the best tools to use and the talent to hire to perform key transformations.

So what’s driving this new wave of enthusiasm for data modeling, and why is it such an important part of the modern data stack?

What is data modeling, and why is it important?

Essentially, data modeling is the process of taking raw data from digital platforms and products and transforming it into derived tables that can be used for specific purposes, be that for reporting or analytics use cases.

There are two major benefits from data modeling:

- It allows you to condense large, unwieldy raw data into data sets that can be ingested into Business Intelligence (BI) tools.

- It means you can apply business logic to raw, unopinionated data, turning it into specific, opinionated data that matches your organization and wider goals.

You could think of these aggregations as ‘chunks’ of the data, carefully selected depending on what the data is going to be used for.

"Data modeling is the process of using business logic to aggregate or otherwise transform raw data" – Cara Baestlein, Product Manager at Snowplow

How good data models drive data productivity

It can be argued that your data is only as good as its productivity. Let’s look at ‘data productivity’ in isolation for a moment and what it means for a business.

A study by McKinsey suggests that three quarters of data leaders said the impact of revenue or cost improvement they had achieved through their data projects was less than 1%.

This statistic reflects how challenging it can be to drive value from data projects, and how vital it is to get data into the hands of those who need it.

One way to define data productivity might be to frame it against operational value. For instance

- When data is freely ready and available to Marketing, Product and other teams, and is used on a daily basis to inform business decisions, data productivity is high.

- When data does not flow freely, when it is caught in silos, too messy to be used to inform decisions or not produced in a format that supports internal teams – data productivity is low.

What we are seeing today is a generation of data-informed companies waking up to the opportunities of high data productivity. These organizations are taking ownership of processes like data modeling, as well as breaking out of the all-in-one solutions (that we sometimes refer to as ‘packaged analytics tools) that do the data modeling for you.

Why the rise of the modern data stack and the rise of data modeling are connected

It’s no coincidence that this concept of building a modern data stack is exploding at the same time as a surge in demand for data transformation solutions.

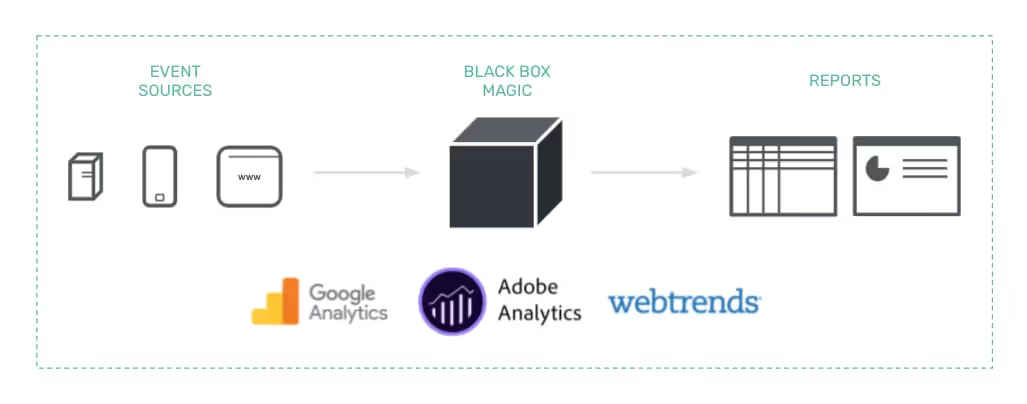

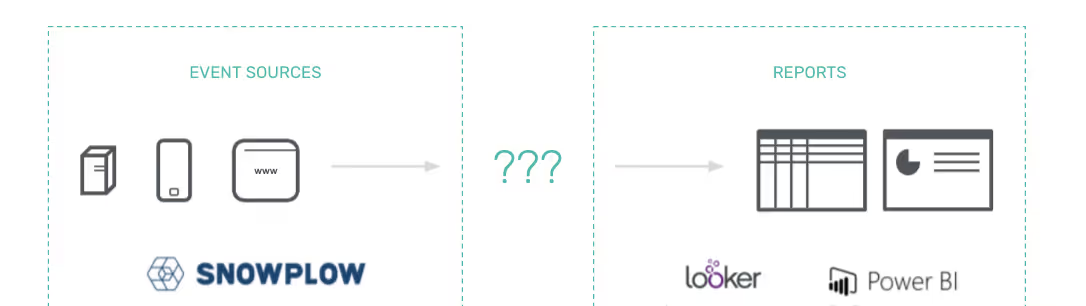

Many companies are seeing for the first time how powerful it can be to own their data stack from end to end. While automated processes remove complexity, they also remove the ‘voice’ of the organization when it comes to key stages in the pipeline. Instead, data goes into a ‘black box’ where we don’t even know how it’s transformed. And when it comes out, it’s not always aggregated in a way that’s useful or productive – especially for companies with atypical business models (think two-sided marketplaces, Media companies or SaaS platforms).

On the other hand, there are clear benefits to owning these steps in the data journey. If we look at the advantages of building your own data model alone:

- The data can be made available in a structure that’s highly relevant to the consumer, driving increased productivity;

- The data can be explored at a high level or in granular detail depending on what is required;

- Internal teams can self-serve from existing data models, rather than relying on analysts to wrangle and stitch data from siloed pockets;

- The data model can apply logic that accurately reflects the unique needs of the business;

- Everyone in the organization can unify around a common data source / single source of truth, ensuring consistency and trust in the data throughout the company.

Building a modern data stack that puts you in control of your data and your transformation unlocks limitless possibilities. It not only means the data is more relevant and therefore more useful to internal data consumers, it means you can deploy powerful use cases – in some cases even entire products – with the data you collect. This has led to the data modeling renaissance.

"The gist is that once you have all the relevant data for each event, which is possible with Snowplow, you can do whatever you want with it. Snowplow's importance will only continue to grow as we customize our pipeline" – Rahul Jain, Principal Engineering Manager at Omio

The data modeling renaissance

We are witnessing what could the early signs of a golden age for data modeling. It’s a time where new tools, discussions, communities and new job roles are beginning to burst onto the scene in the data landscape at a frantic pace.

This has given rise to the Analytics Engineer, who (as the name suggests) sits between data engineering and analytics as a person responsible for transforming data to empower data consumers. These practitioners are crucial in the middle layer between ingestion and reporting. They are key to ensuring their internal teams can explore clean, actionable data sets within their Business Intelligence (BI) tools of choice, and the data setup cannot run without them.

"Analytics engineers provide clean data sets to end users, modeling data in a way that empowers end users to answer their own questions. While a data analyst spends their time analyzing data, an analytics engineer spends their time transforming, testing, deploying, and documenting data" – What is Analytics Engineering by dbt Labs

The rise of data modeling has also led to widespread adoption of data transformation tools. These solutions enable Analytics Engineers and others to take ownership of their ‘middle layer’ by transforming data directly in the data warehouse, mostly using SQL as their primary querying language.

First among these tools is dbt, a data transformation solution that removes the blockers in the data democratization process and gives data teams the ability to develop, test, deploy, and troubleshoot data models at scale.

Tools like dbt are the driving force that make Analytics Engineering possible – the translators of the data age – who can take data in its raw format and transform it into those relevant, helpful ‘chunks’ that Marketing and Product teams need. But achieving this can be easier said than done.

It’s not always easy to get it right

For all the advantages of owning the transformation process, modeling your data in this way is a real skill that requires a deep understanding of the business and the data you’re working with.

Designing and building data models, especially for the unique needs of a particular team within a complex business, can be challenging. It’s why less data mature companies are happy to let a tool model their data for them. And while that may be suitable in the short term, in the longer term, it makes far more sense to take ownership of this critical process especially as more emphasis is placed on using data to make crucial business decisions.

In our next chapter we’ll explore how exactly an analyst or data engineer can get started designing data models for their business and outline best practices for this important process.