Introducing Spark Support for Snowplow's dbt Models: Enhancing Data Lakes

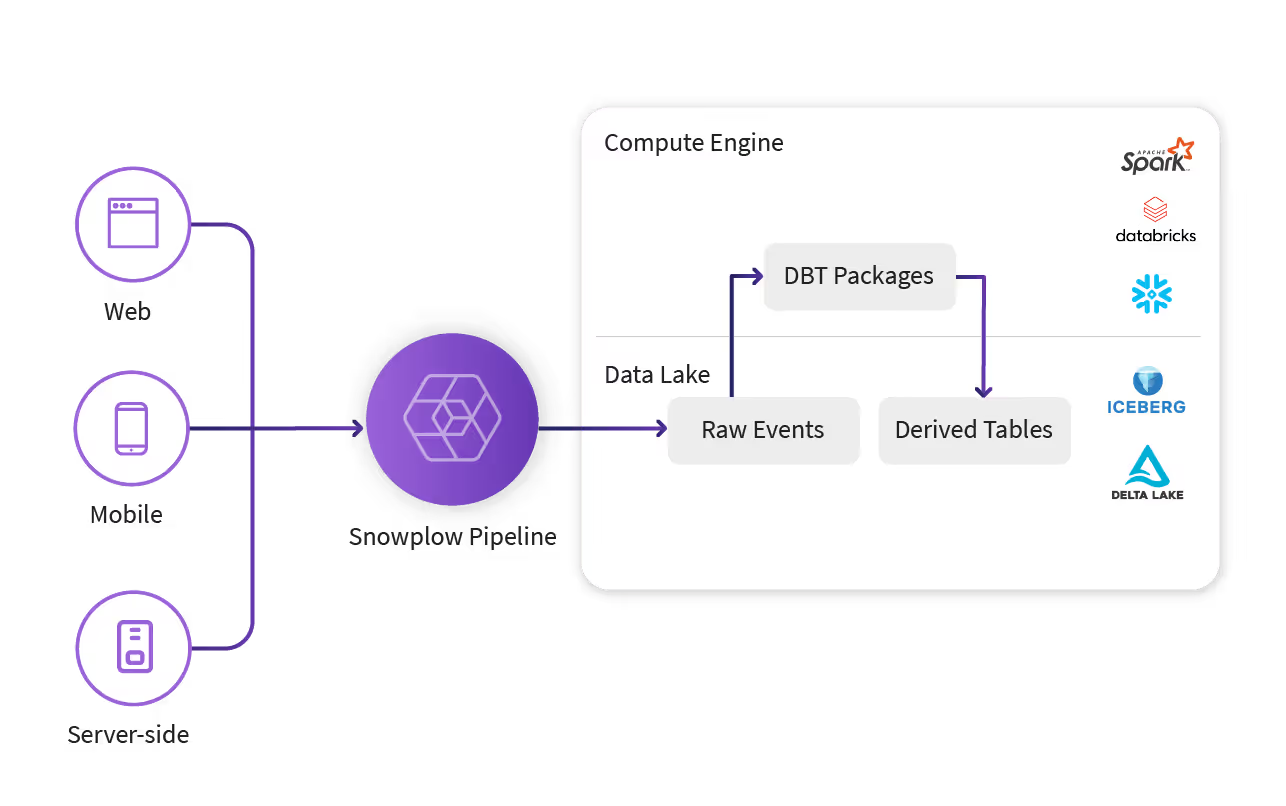

We are thrilled to announce the release of Apache Spark support for Snowplow's dbt models, marking a significant leap forward in behavioral data management within data lake environments. This new feature empowers organizations to manage and process vast volumes of data, unlocking valuable behavioral insights at scale without increasing operational costs.

The Evolution of Data Architecture

As businesses generate increasing volumes of data, the data management landscape will continue to evolve. Organizations are starting to adopt modern architectures that offer greater scalability and cost-effectiveness while maintaining high performance. At the forefront of this evolution are data lakes and supporting technologies like Apache Iceberg.

Data Lakes: The Foundation of Modern Data Architecture

Data lakes represent a modern approach to storing raw data in cost-effective cloud storage such as Amazon S3 or Google Cloud Storage (GCS). This architecture uses distributed computing frameworks like Apache Spark to transform raw data into cleaned and aggregated tables. These tables can then power BI dashboards and AI workloads, offering a model that not only reduces costs but also provides greater flexibility and control over data architecture.

Apache Iceberg: Enhancing Data Lake Capabilities

Within this ecosystem, Apache Iceberg is emerging as a popular open table format that is designed for large analytic datasets in data lakes. Iceberg adds a layer of structure and advanced features to the data lake environment, including:

- Robust metadata management

- Schema evolution

- Time travel and versioning

- ACID transactions

- Improved query performance

Apache Spark: Distributed Scalable Computation Framework

Apache Spark complements the data lake and Apache Iceberg ecosystem by providing an open-source distributed computation framework capable of handling large-scale data processing with speed and efficiency. As organizations transform raw data into actionable insights, Spark’s ability to process data both in-memory and across a distributed environment enables faster analytics and more advanced machine learning applications.

The Best of Both Worlds: Snowplow's dbt Models with Spark

Snowplow has built a suite of world-class dbt packages for transforming raw event data into useful derived tables such as users, sessions, and views. These models handle complex data processing challenges like event deduplication, sessionization, and identity resolution.

We recently introduced our Lake Loader for loading Snowplow events in near-real time into a data lake in major formats, including Hudi, Delta, and Iceberg. Now, with the introduction of Spark support for Snowplow's dbt packages, we've connected the dots, allowing you to run our models directly on data lakes.

By integrating Spark into this process, customers can scale their data transformations to handle even the largest datasets, all while benefiting from the cost efficiencies and flexibility of a data lake architecture.

“Before the Spark support for Snowplow's dbt models was released, we faced challenges with patching the models to run on Spark, leading to compatibility issues. However, after migrating to the new Spark version, our deployment experience has vastly improved. The migration streamlined our workflows, allowing us to run our dbt models on Spark seamlessly and focus on deriving insights rather than troubleshooting compatibility problems." - Rock Lu, Software Engineer, Rakuten

While Iceberg is currently the only supported Data Lake format for Spark data models, Snowplow customers can also leverage our Databricks dbt models, which run on Delta tables. This dual-format support offers greater flexibility, enabling customers to choose between Delta’s time travel, optimization, and schema enforcement on Databricks, or Iceberg’s open-table format for large-scale processing with Spark. Snowplow’s integration with both formats allows organizations to maximize the potential of their data lakes, seamlessly transforming their customer behavioral event data into analytics-ready models for AI.

Try It Out Today

Experience the benefits of this powerful combination for yourself. New to Snowplow? Book a demo to learn more about the future of scalable, cost-effective behavioral data management with Snowplow, Spark, and modern data lake architecture.

Are you a current Snowplow customer using Spark and want to streamline your workflows? Check out our latest documentation, allowing you to run our models directly on data lakes.