Improving Snowplow's understanding of time

As we evolve the Snowplow platform, one area we keep coming back to is our understanding and handling of time. The time at which an event took place is a crucial fact for every event - but it’s surprisingly challenging to determine accurately. Our approach to date has been to capture as many clues as to the “true timestamp” of an event as we can, and record these faithfully for further analysis.

The steady expansion in where and how Snowplow is used, driving towards the Unified Log methodology, has led us to re-think our handling of time in Snowplow. This blog post aims to share that updated thinking, and provides background to the new time-related features coming in Snowplow R71 Stork-Billed Kingfisher.

Read on after the jump for:

- A brief history of time

- Event time is more complex than we thought

- Revising our terms and implementing true_tstamp

- Calculating derived_tstamp for all other events

- Next steps

1. A brief history of time

As Snowplow has evolved as a platform, we have steadily added timestamps to our Snowplow Tracker Protocol and Canonical Event Model:

- From the very start we captured the

collector_tstamp, which is the time when the event was received by the collector. In the presence of end-user devices with unreliable clocks, thecollector_tstampwas a safe option for approximating the event’s true timestamp - Two-and-a-half years ago we added a

dvce_tstamp, meaning the time by the device’s clock when the event was created. Given that a user’s events could arrive at a collector slightly out of order (due to the HTTP transport), thedvce_tstampwas useful for performing funnel analysis on individual users - In April this year we added a

dvce_sent_tstamp, meaning the time on the device’s clock when the event was successfully sent to a Snowplow collector

Throughout this evolution, our advice has continued to be to use the collector_tstamp for all analysis except for per-user funnel analysis; in support of this we use the collector_tstamp as our SORTKEY in Amazon Redshift.

While this approach has served us well, two recent developments in how Snowplow is used have caused us to re-think our approach.

2. Event time is more complex than we thought

Two emerging patterns of event tracking have challenged our reliance on the collector_tstamp, and led to our re-think:

2.1 We have events which already know when they occurred

Snowplow users are increasingly using Snowplow trackers to “re-play” their historical event archives into Snowplow through a Snowplow event collector. These archives might be of email-related events from your ESP, or perhaps an S3 extract of your Mixpanel events.

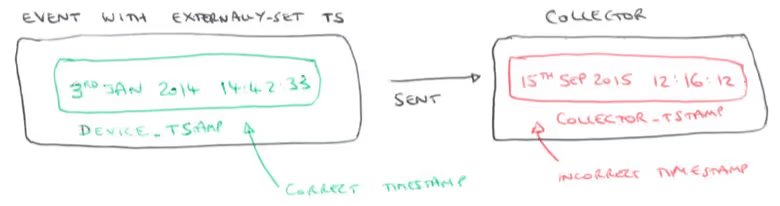

In these cases, the archived events typically already knows when it took place: it already has its “true timestamp”. Conversely, the collector_tstamp is not meaningful - it only reflects when this historical event was re-played into Snowplow.

At the moment, most Snowplow event trackers will let you manually specify a timestamp for an event, but this manual override is stored as the dvce_tstamp, with all of its associations of device clock-related unreliability.

This is highlighted in the diagram below:

2.2 Delayed event sending devalues the collector_tstamp

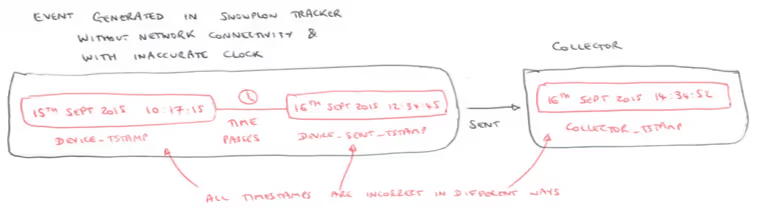

To make Snowplow event tracking as robust as possible in the face of unreliable network connections, we have added support for outbound event caches to our event trackers. The principle is that the tracker adds a new event to the outbound event cache, attempts to send it, and only removes that event from the cache when the collector has successfully recorded its receipt.

The Snowplow JavaScript, Android and Objective-C trackers - three of our most widely used trackers - all have this cache-and-resend capability, as does the .NET tracker.

One of the implications of this approach is that the collector_tstamp is no longer a safe approximation for when a long-cached event actually occurred - for those events, the collector_tstamp simply records when the event finally made it out of the tracker’s cache and into the collector.

This is a challenge, not least because our device-based timestamps are no more accurate than they were before. The problem is illustrated in this diagram:

3. Revising our terms and implementing true_tstamp

The first step in fixing the challenges set out above is to refine the temporal terms we are using:

- Our

dvce_tstampis really ourdvce_created_tstamp - We are incorrectly setting a

dvce_tstampfor events when we know precisely when they happened. We should create a new “slot” for this timestamp to remove ambiguity - let’s call ittrue_tstamp - We are treating

collector_tstampas a proxy for the final, derived timestamp. Let’s create a new slot for this, calledderived_tstamp

Let’s assume that we can update our trackers to send in the true_tstamp whenever emitting events that truly know their time of occurrence. In this case, the algorithm for calculating the derived_tstamp is very simple: it’s the exact same as the true_tstamp:

4. Calculating derived_tstamp for all other events

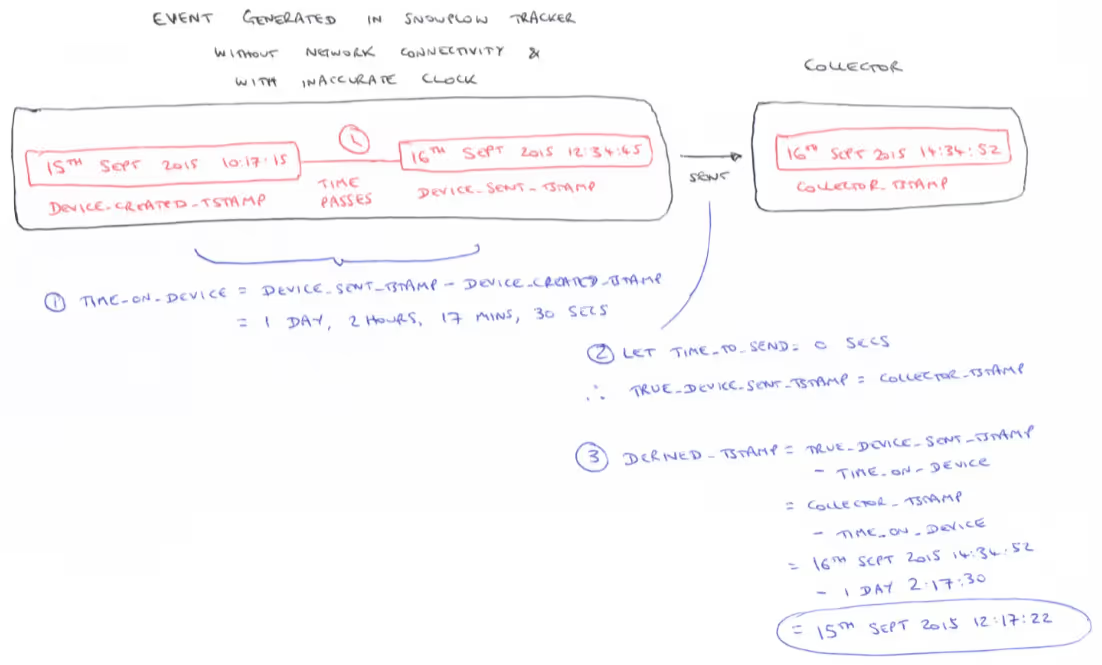

Calculating the derived_tstamp for events which do not know when they occurred is a little more complex. Remember back to the diagram in 2.2 above - all three of the timestamps were drawn in red because they were each inaccurate in their own way.

For us to calculate a derived_tstamp for possibly cached events from devices with unreliable clocks, we need to start by making two assumptions:

- We’ll assume that, although

dvce_created_tstampanddvce_sent_tstampare both inaccurate, they are inaccurate in precisely the same way: if the device clock is 15 minutes fast at event creation, then it remains 15 minutes fast at event sending, whenever that might be - We’ll assume that the time taken for an event to get from the device to the collector is neglible - i.e. we will treat the lag between

dvce_sent_tstampandcollector_tstampas 0 seconds

This now gives us a formula for calculating a relatively robust derived_tstamp, as shown in this diagram:

In other words, this formula lets us use what we know about the event and some simple assumptions to calculate a derived_tstamp which is much more robust than any of our previous timestamps or approaches.

5. Next steps

We have already added true_tstamp into the Snowplow Tracker Protocol as ttm. Next, we plan to:

- Add support for

true_tstampto Snowplow trackers, particularly the server-side ones most likely to be used for batched ingest of historical events (e.g. Python, .NET, Java) - Rename

dvce_tstamptodvce_created_tstampto remove ambiguity - Add the

derived_tstampfield to our Canonical Event Model - Implement the algorithm set out above in our Enrichment process to calculate the

derived_tstamp

Expect new Snowplow tracker and core releases in support of these steps soon - in fact starting with the next release, Snowplow R71 Stork-Billed Kingfisher! And with these steps implemented, we can then start to prioritize our new derived_tstamp for analysis over our long-serving collector_tstamp.

That completes this update on our thinking about time at Snowplow. And if you have any feedback on our new strategies regarding event time, do please share them through the usual channels.