A product analytics case study: using data to inform product development

This case study focuses on a photo and video sharing service, and the steps they took to develop their product with product analytics.

The initial analysis was focused on identifying where the product was or wasn’t working. The team knew they were spending a lot on acquiring new customers, but their user base wasn’t growing as fast as they expected.

The first question for product analysts: where were users being lost?

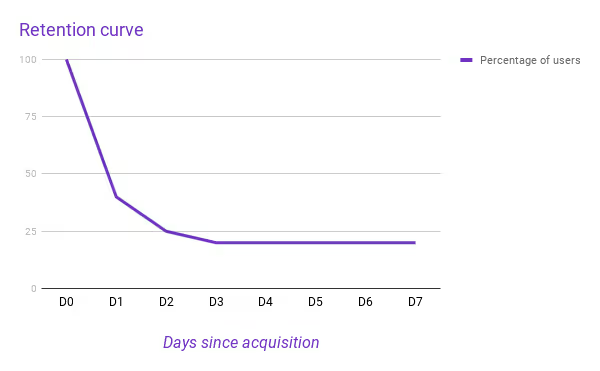

After the first round of analysis on their product data, a retention analysis showed that a huge percentage of users dropped off the product after day one. Here is an approximation of what their retention curve looked like (created with simulated data):

The team could clearly see that the biggest drop-off point in users is within the first day. A graph like this indicates two things:

- A very large number of users log into the app on day one but then don’t return. Something is going wrong and needs to be explored.

- Other users are still around on day two have very high retention.

This suggested that they had two types of distinct user journeys. The first was a type of user that got past some sort of friction point and then loved the service. The second - a large group of users - never made it beyond that point.

Identifying what caused that friction, and removing it, became the team’s number one priority and a huge opportunity. If the team could find a way to get more users to stay with the app for an additional day, the overall number of active users would increase significantly. This would lead to a massive increase in return on their acquisition spend with most of the users acquired being retained well beyond three days.

Identifying engagement metrics to solve the day-one retention problem

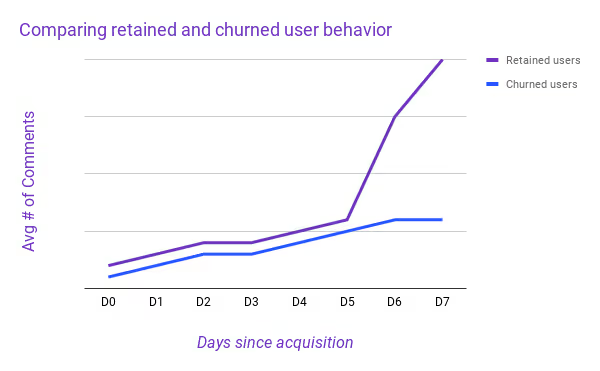

Diving into the service’s user data, the first step to answer this question was to separate out the user journeys of those who retained and those who did not in order to surface what key differences might have influenced this behavior.

They then did some basic statistical analysis, using binomial regression to quantify the impact of activities on retention, and from there, a random forest to identify which activities predict and differentiate best.

Once they had identified a list of different ‘engagement metrics’ that appeared to distinguish users who retained from those who didn’t, the team wanted to know, “which metric matters most?”

Doing this analysis, it became apparent that comments on photos, instead of likes, were a much more meaningful metric for determining engagement and a user’s likelihood of retaining.

What did different user types need from their experience?

This information around engagement, though just a starting point, was enough of a foundation for the product team to start developing alternative sign-up experiences and rigorously A/B test how these changes to the customer experience impacted the desired user behaviors and contributed to the desired outcome of a higher day one retention.

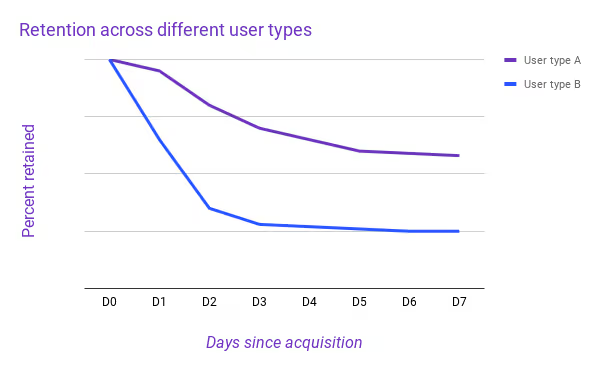

From the beginning, the team had a hunch that for their product, additional segmenting of the analyzed behaviors would be necessary. To achieve this, they needed to look at the different user types individually: parents, for example, exhibit quite different behaviors on the app (primarily driving content creation through uploading new photos) when compared to other family members (like grandparents, and other extended family members, who skew more towards consuming content generated by the parents). A quick analysis showed that these different types of users do engage with the app in very different ways:

The team went on to refine all of the initial analysis with this newly developed understanding of their user types and associated behaviors, motivations, and desires.

They looked at how their product is perceived by the different user types and how they can improve the day-one experience for each segment individually.

What does a grandmother, for example, need to experience - after signing up - to feel what she needs to feel to keep her coming back to the app?

How is that different for a new father?

Or the keeper of all of a family’s photo albums?

Learn more about advanced A/B testing to differentiate your product.

Intelligent questions about the product create actionable results

Armed with this insight, the team has been able to dramatically increase the number of users acquired that retain in their app, lowering their cost of customer acquisition and enabling them to bring their unique and much-loved service to many more families.

While the questions they asked were specific to them, the process of starting with the business and product first, then asking the right questions, led the team to real and actionable insight that they’ve been able to use to improve their user experience and grow their customer base.

Conducting an A/B test is significantly more complicated than just randomly assigning users into two groups. To run a truly meaningful experiment, as we’ve pointed out, requires meticulous planning around what experiment is run, what the expected impact of the experiment will be, and what metrics will best capture that impact.

Effective product teams run many A/B tests in parallel each week. It is therefore essential that the process of running the tests, measuring the results, and making the decision whether or not to roll out each product update is as frictionless as possible.