Data Quality Monitoring: Implementing Shift-Left Validation

It’s no revelation that the quality of your data—and how you apply data quality monitoring— impacts your business outcomes. It then comes as no surprise that data quality monitoring has become an essential practice for organizations, especially for those relying on accurate information for decision-making and operations

Despite advances in tooling, organizations continue to lose an average of $13 to $15 million annually due to poor data quality. Plus, it can result in a lead loss of up to 45%.. The impact of this is further pronounced in the case of AI/ML model training, where poor data quality heavily affects model performance, often going unnoticed until in production.

Traditionally, companies have tried to validate data at its eventual destination – the lake or warehouse. Those methods are more expensive and less effective compared to the shift-left approach to data validation. In this approach, problems are caught at the source (i.e. at the point of data creation and collection), rather than after the dataset in the destination has been contaminated. The shift-left approach to data quality monitoring mirrors successful trends in software engineering where strict type-checking has contributed to 40% fewer user-reported bugs in applications.

This post explores practical methods to implement shift-left data quality monitoring, the relevant architectural considerations, and why Snowplow’s Customer Data Infrastructure (CDI) is the leader in this arena.

What is Data Quality Monitoring?

Data quality monitoring refers to the process of verifying non-functional properties of data such as accuracy, completeness, and validity.

In practice, it covers:

- Data Validation: Ensuring data conforms to expected formats;

- Data Health: Searching for anomalies such as gaps in data collection or increased latency;

- Data Implementation Rules: Adhering to logical constraints such as acceptable value ranges.

The Cost of Poor Data Quality

The costs associated with poor data quality are often hidden and compound under the radar. They lead to data misalignment and flawed decisions, which in turn may result in severe strategic mistakes.

We already mentioned how this becomes even more problematic in AI/ML workflows: models trained on incomplete or otherwise invalid data may develop bias and perform inaccurate predictions. It is natural to assume that this can easily lead to customer trust eroding. Furthermore, a model that has been trained on bad data becomes more expensive to retrain after the dataset’s health has improved.

Eventually, you can expect that retroactively cleaning corrupt datasets will result in delayed launches, failed audits, reputational damage and, as a result of all that, substantial negative financial impact.

How Data Quality Monitoring Works

Effective data quality monitoring must evaluate non-functional properties of data (such as accuracy, completeness, and validity) continuously.

The traditional way to monitor data quality is reactive, and happens either with post-ingestion checks in the data lake or warehouse, with batch validation at the ‘T’ step of ETL, or with manual ad-hoc data profiling.

These methods may be simple to implement initially, but as always, the devil is in the detail and there are associated weaknesses. The main of which are latency to detect the issues (leading to compound failures) and resource-intensive remediation after detection.

Snowplow adopts an innovative approach to data quality monitoring, elaborated in the next section.

Architecture Overview

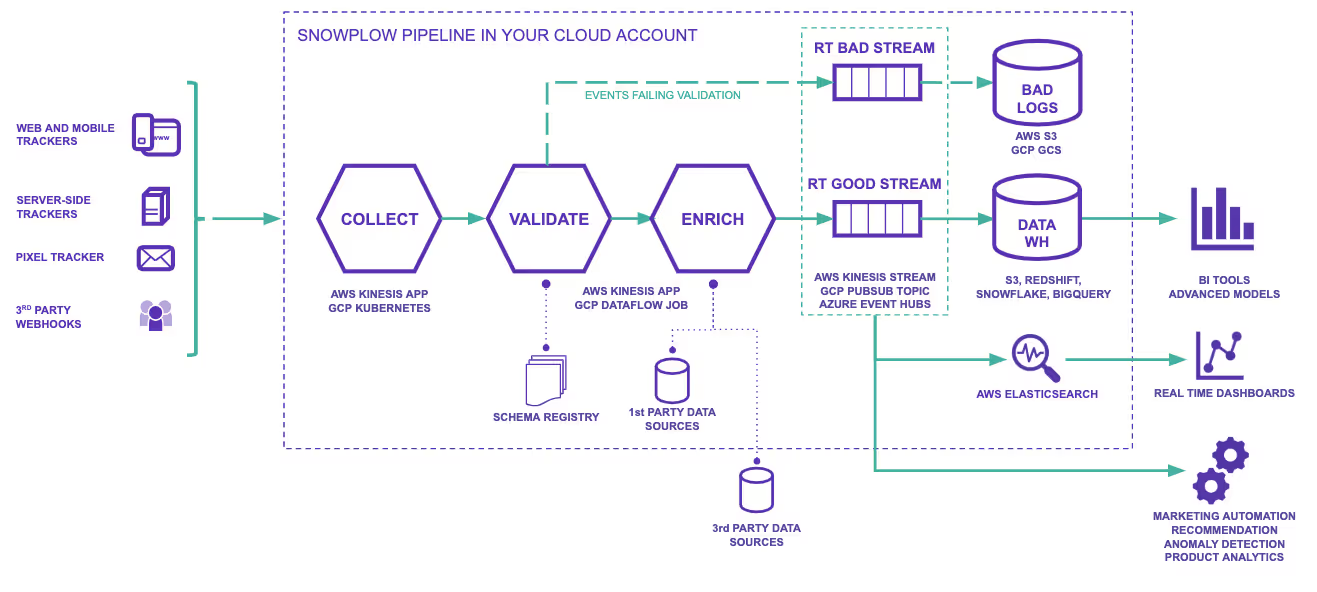

Contrary to the traditional setup, Snowplow’s Shift-Left Validation integrates schema enforcement much earlier.

Payloads are validated against structured schemas at the point of collection, with invalid events quarantined immediately and validation errors surfaced instantly. This ensures that only reliable data enters the enrichment process, and that engineering teams can react before damage spreads.

As such, early validation creates a foundation of trust that enables more sophisticated analysis and reduces maintenance overhead.

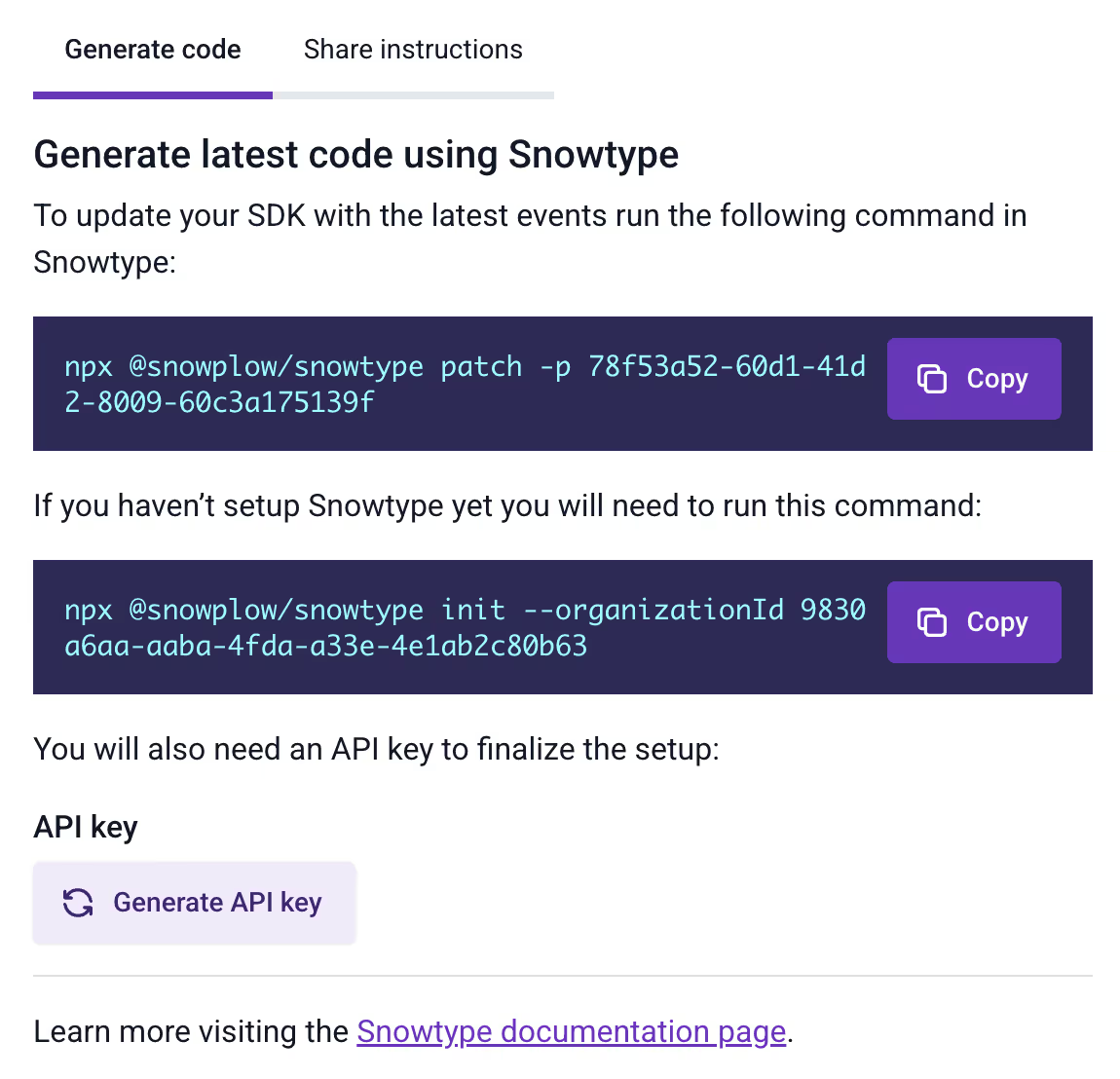

In addition, Snowplow’s Snowtype offers excellent ergonomics to avoid mistakes and ensure high data quality at the source.

Snowtype generates statically-typed definitions for the events to be sent, resulting in warnings inside the developer’s IDE long before problematic code gets deployed. This is yet another way Snowplow “shifts validation left”: not only isolating invalid events early on, but actually trying to avoid their creation altogether.

The isolation of invalid events into a distinct “bad rows” stream provides the forensic traceability that is necessary to diagnose issues and fix them, if and when they occur.

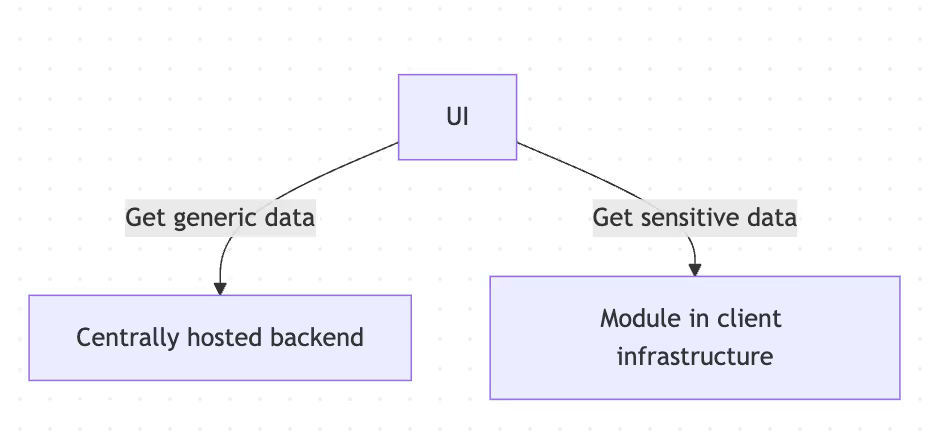

Snowplow recently released its brand new data quality monitoring feature - the Snowplow Data Quality Dashboard. It offers insight to Snowplow customers about their invalid events while maintaining guarantees for private data access.

The Data Quality Dashboard provides a lightweight component that is deployed within your infrastructure to access failed events data locally, while the frontend connects to both this local component and the central Snowplow Console.

This approach maintains security boundaries while providing visibility into data quality issues. At the same time, it relies on the centralized component to perform authentication and authorization, ensuring the same security guarantees are maintained.

Real-World Examples of “Shift-Left” Data Quality in Practice

There is a long history of companies with varying levels of data maturity coming to Snowplow. They often reach out to us with a view to fix schema drift and event structure inconsistencies that have caused schema-related incidents, long retraining cycles of AI/ML models, and poor overall customer experience.

We’ve consistently witnessed their migration to shift-left data quality monitoring yielding reliable datasets and the resulting benefits. Let’s see how you can effectively implement shift-left validation with Snowplow for better data quality.

Implementing Shift-Left Validation with Snowplow

To successfully apply a robust shift-left data quality monitoring strategy with Snowplow, you should implement the following steps:

First, define robust data quality rules. Using JSON Schema, describe every event structure. For instance, an add_to_cart event might require:

{

"$schema": "http://iglucentral.com/schemas/com.snowplowanalytics.self-desc/schema/jsonschema/1-0-0#",

"description": "Schema for a product add-to-cart event",

"self": {

"vendor": "com.example",

"name": "add_to_cart",

"format": "jsonschema",

"version": "1-0-0"

},

"type": "object",

"properties": {

"user_id": { "type": "string" },

"product_id": { "type": "string" },

"quantity": { "type": "integer", "minimum": 1 }

},

"required": ["user_id", "product_id", "quantity"],

"additionalProperties": false

}

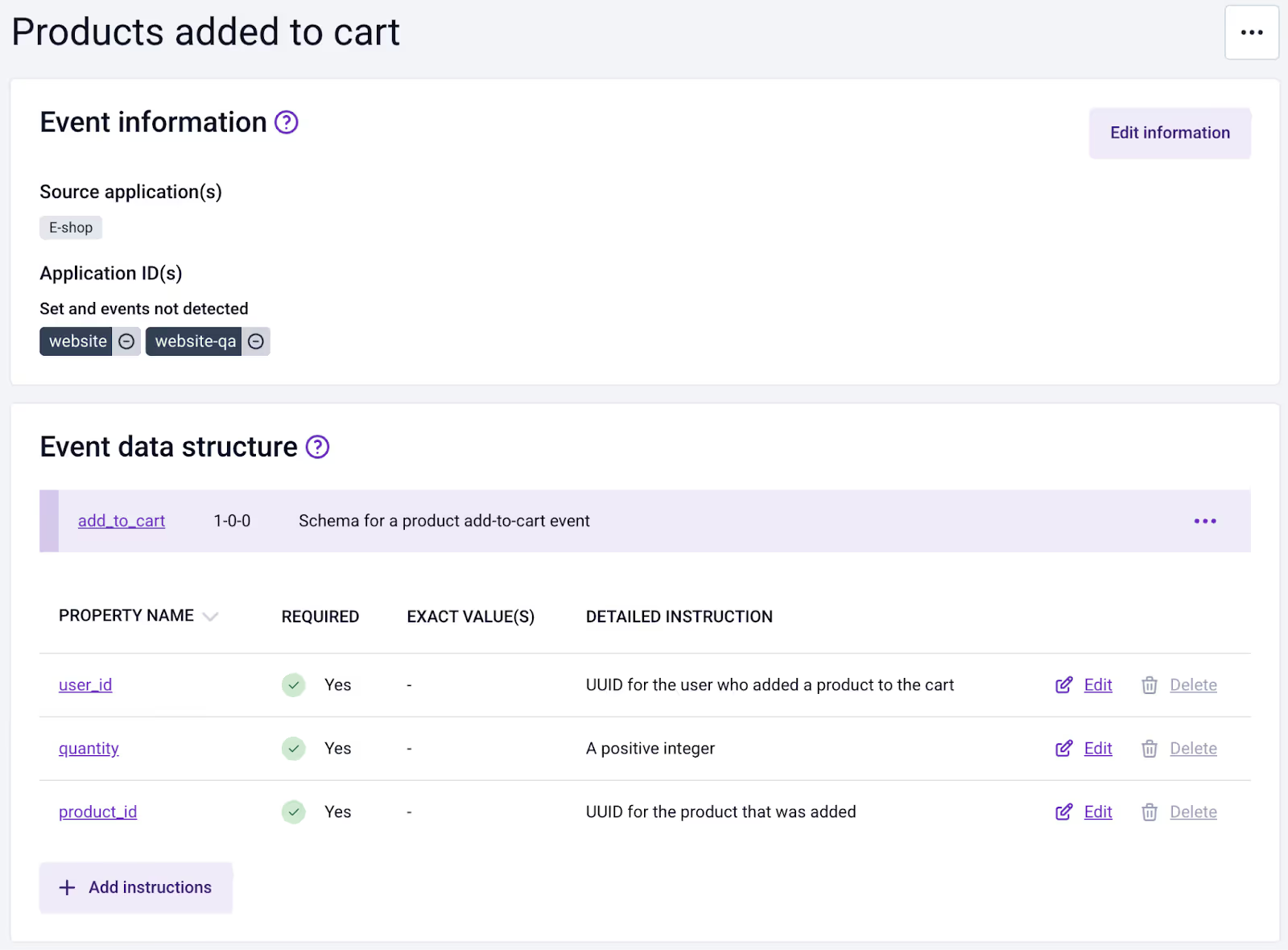

Next, implement a Snowplow Data Product that uses this schema as part of an event specification. Snowplow’s Data Products go a lot further in bringing everyone in an organization together around a coherent data collection strategy, and are the recommended way to design tracking on top of schemas like the one above.

Finally, after the data product is complete, you can generate code with Snowtype to assist in generating the events as code, not as hand-crafted JSON objects.

Customers who are using Snowtype are benefiting from strong type checking and see far fewer invalid events in their tracking.

They also have a chance to confirm that their events are landing in their warehouse as expected, with near-real-time (typically just under 5 seconds) data quality monitoring:

Implementation Challenges with Shift-Left Validation

Adopting a shift-left data quality monitoring approach presents some implementation challenges that you need to keep in mind.

For the biggest part, organizations need to reframe their thinking about data quality and where that control takes place. Plenty of tools (e.g. Heap, Fullstory) follow a “capture everything, clean up later” methodology, which eventually leads to unmanageable datasets and all the grievances we elaborated on previously in this article.

Adjusting to a shift-left approach means that data designers need to be intentional about what they are capturing.

Instrumentation engineers need to be aligned and willing to adopt best practices that, on one hand, reduces the risk for invalid events. And on the other hand, means they will need to apply changes to the way they work.

At Snowplow we are striving to ensure that the ergonomics we offer to both data designers and instrumentation engineers are such that they make the whole process easy and collaborative, and that eventually our customers can enjoy the benefits of high quality data with little disruption.

Conclusion

Effective data quality monitoring is crucial for teams that aspire to build scalable, reliable pipelines that power their analytical, operational, and agentic estates. Proactively validating at the point of collection using shift-left validation reduces cost, complexity, and risk.

Snowplow’s pipeline architecture, with its generated statically-typed tracking code, real-time schema validation, and transparent monitoring, empowers organizations to maintain accuracy, consistency, and reliability across data sources. This provides assurance that agentic, machine learning, analytics, and operational systems are fed with reliable data.

By investing in shift-left validation, organizations turn data quality from a reactive firefight into a proactive engineering strength.

If you’d like to learn more about Snowplow’s data quality monitoring approach, click here to book a demo with us.