Data Pipeline Architecture Patterns for AI: Choosing the Right Approach

Welcome back to our blog series on Data Pipeline Architecture for AI. In part one of the series, we covered why traditional pipelines fall short and the core components needed for AI-ready infrastructure.

Now, we'll compare architectural patterns like Lambda, Kappa, and Unified processing. We'll examine the strengths and limitations of each approach, helping you determine which best fits your organization based on data volume, latency requirements, and team capabilities. We'll also explore how Snowplow's architecture addresses these considerations.

Several architectural paradigms have emerged to handle large-scale data processing for AI:

Lambda Architecture

A Lambda architecture combines a batch layer with a speed (real-time) layer:

- A batch layer processes large volumes of data to produce accurate pre-computed views.

- A speed layer handles new data in real time for low-latency updates.

- Results from both layers are merged at query time.

Advantages: You gain a comprehensive view of your data, combining accuracy of batch processing with low latency of streaming.

Challenges: Maintaining two parallel pipelines increases complexity and can double operational overhead.

Kappa Architecture

A Kappa architecture simplifies things by using a single stream processing pipeline:

- All data (past and present) is treated as a stream.

- The system replays historical data through the streaming layer if needed, without a separate batch layer.

Advantages: A unified codebase reduces maintenance burden, and provides you with simpler architecture.

Challenges: Requires robust streaming infrastructure that can handle large-volume reprocessing and out-of-order events.

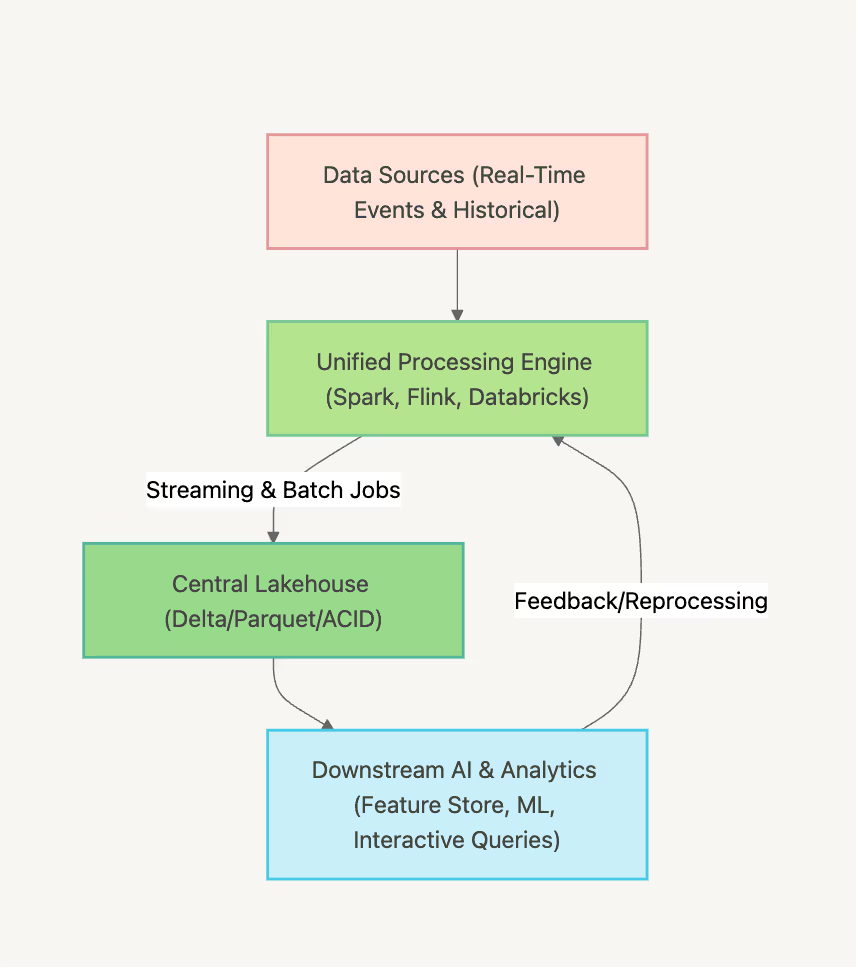

Unified Data Processing

Modern approaches often strive to unify batch and stream processing within the same platform:

- Systems like Apache Spark Structured Streaming aim to blur the line between batch and streaming.

- Data is typically stored in a central lakehouse repository, accessed by both on-demand large jobs and continuous jobs.

Advantages: One set of data pipelines that handles data as it arrives and also handles reprocessing when needed.

Challenges: Often relies on cutting-edge technology that may require careful tuning.

Choosing an architecture

The best option depends on what your organization needs and how advanced it is.

Many companies begin with basic batch pipelines. Later, they might add streaming (Lambda) for real-time updates. As tech and skills grow, they may switch to a simpler, unified system.

Cost and team know-how are important too—streaming can be tricky to handle.

Snowplow’s setup focuses on streaming first (like Kappa/Unified), but it also supports batch recovery by replaying event archives. This gives you flexibility.

Snowplow’s AI-Ready Data Pipeline Architecture

Snowplow offers a modern customer data infrastructure designed to solve the shortcomings of legacy pipeline architectures. The technology also checks data strictly when it’s collected and tracks user actions reliably. This creates high-quality, consistent datasets for AI.

Here are key ways Snowplow’s architecture aligns with AI pipeline needs:

Schema Validation at Collection

Snowplow identifies and diverts bad data before it reaches your storage. It checks every event against a set schema (using its Iglu registry) as soon as it’s collected. This prevents schema problems later, letting only good data through.

Unlike older systems that find errors days after batch processing, Snowplow stops erroneous events instantly.

Behavioral Data Collection Optimized for ML

Snowplow is great at gathering detailed user behavior data—like web, mobile, or server events. It captures specifics, such as user sessions or page details. This organized data is perfect for building features for machine learning (ML).

You can create custom events tailored to your business, like “Product Viewed” or “Added to Cart” for an online store. Later, these can help spot patterns, like where users stop or what they intend to do.

Real-Time Data Quality Monitoring

Snowplow checks data quality as it comes in. You can watch stats like event counts or error rates through the Snowplow interface or your own tools. If something goes wrong—like a bug making a field blank—Snowplow catches it right away with its validation and error stream.

This quick feedback keeps data clean and saves time by fixing problems early, before they mess up your models.

AI-Ready Data Structures

Snowplow’s output fits easily into AI tools. It saves events in clear formats (like JSON or Parquet) with defined schemas. This makes it simple to use with feature stores, data warehouses, or tools like Apache Spark or Pandas.

The self-describing schemas tell other systems how to read the data. For example, you could turn user events into vectors for advanced storage, like a vector database, knowing exactly what each part means.

Scalability and Performance

Snowplow is built to grow with your needs. It works across clouds like AWS, Azure, or GCP, using tools like Kafka or Kubernetes.

The platform can handle small batches (thousands of events per hour) or huge streams (millions per second) for big AI models or real-time use. As data grows, its quality checks and enhancements keep running smoothly by spreading the work across more nodes.

Integration with the Ecosystem

Snowplow connects well with other tools. It sends data to databases, cloud storage, or SaaS platforms. For AI, this means you can stream clean events to a storage bucket for Spark to train models, or to a database like Redshift for instant queries.

Snowplow handles the front end of your AI pipeline—collecting and checking data—while linking smoothly to ML systems for the next steps, like feature building and modeling.

Snowplow’s features enable your pipeline to manage huge amounts of data from different sources quickly, while keeping data quality high and processes simple. Its design supports good data rules and clear monitoring: you get reliable schemas, instant pipeline health updates, and a flexible but organized data setup.

Simply put, Snowplow gives data engineers a strong base to work on modeling and ML features, instead of fixing broken pipelines. It solves typical problems—like schema changes, late checks, or missing details—right away, speeding up AI development and rollout.

And that’s a wrap for blog two. To recap, we’ve compared architectural approaches from the dual-layered Lambda design to streamlined Kappa and modern unified processing frameworks, highlighting how each addresses different needs.

We've also shown how Snowplow's architecture meets AI pipeline requirements through schema validation, optimized data collection, and ecosystem integration.

In our final installment, we'll provide a practical implementation guide with step-by-step instructions, common pitfalls to avoid, and real-world case studies across various industries. Join us for part three to see these concepts in action.