Dealing with duplicate event IDs

The Snowplow pipeline outputs a data stream in which each line represents a single event. Each event comes with an identifier, the event ID, which was generated by the tracker and is—or rather should be—unique. However, after having used Snowplow for a while, users often notice that some events share an ID. Events are sometimes duplicated within the Snowplow pipeline itself, but it’s often the client-side environment that causes events to be sent in with the same ID.

This blogposts covers:

- Is the event ID guaranteed to be unique?

- What are the possible causes?

- Deduplicating the event ID

- Deduplicating the event ID in Redshift

- Deduplicating the event ID in Kinesis

Is the event ID guaranteed to be unique?

Unfortunately not. Most Snowplow users will find that some events share an event ID. The following SQL query returns the distribution of events per event ID:

SELECT event_count, COUNT(*) FROM ( SELECT event_id, COUNT(*) AS event_count FROM atomic.events GROUP BY 1 ) GROUP BY 1 ORDER BY 1

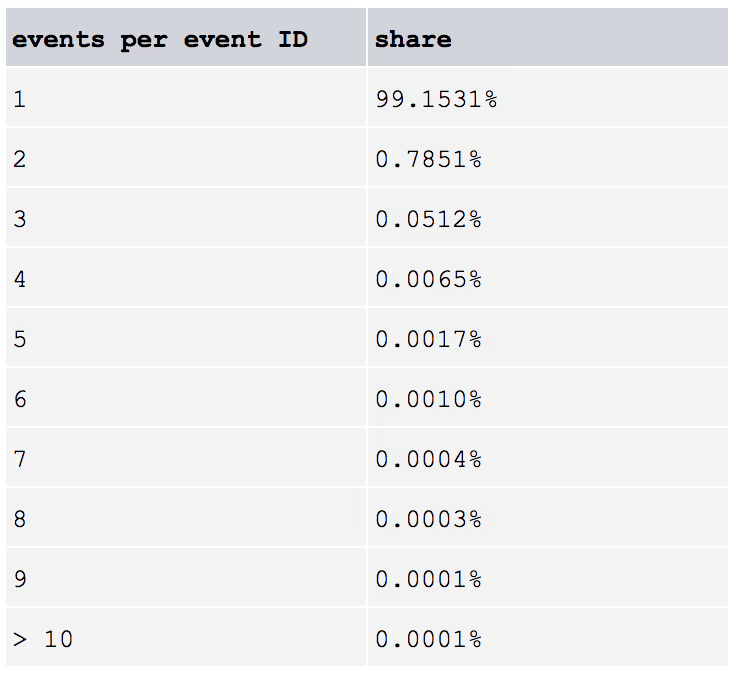

For a typical Snowplow user, and without an additional step that deduplicates the event ID, we expect the distribution to look something like this:

Most events have a unique ID, but the long tail that do not can—in some cases—cause issues. For instance, because unstructured events and contexts are loaded into separate tables in Redshift, users often need to join these tables on event ID. If the event ID is not unique, the result is a cartesian product which can make data modeling harder and slow the cluster down.

What are the possible causes?

We distinguish between endogenous and exogenous duplicates.

Endogenous or first-party duplicates

Endogenous duplicates are sometimes introduced within the Snowplow pipeline wherever our processing capabilities are set to process events at least once. For instance, the CloudFront collector can duplicate events in the batch flow and so can applications in the Kinesis real-time flow (this is discussed in more detail below).

These events are true duplicates in the sense that all client-sent fields are the same, not just the event ID. These fields include, for example, the user ID and the device timestamp, but not the collector and ETL timestamp (which are not related to the actual event but describe what happened after it was sent). To deduplicate these events, delete all but the first event. This should happen at the point of consumption when no more new duplicates can be introduced.

Exogenous or third-party duplicates

Exogenous duplicates are events that arrive at the collector with the same event ID due to some external process duplicating them. This is possible because Snowplow generates the event ID client-side, which allows us to, among other things, distinguish between exogenous and endogenous duplicates.

If all client-sent fields match, the deduplication algorithm would treat these two or more events as endogenous duplicates (i.e. delete all but the first event). The more relevant case is when one or more fields differ. It’s unlikely that these duplicates are the result of ID collisions. The event ID is a UUID V4 which makes it close to impossible for the trackers to generate identical identifiers.

Instead, exogenous duplicates are the result of other software that runs client-side. For instance, browser pre-cachers, anti-virus software, adult content screeners and web scrapers can introduce additional events that also get sent to Snowplow collectors, often with a duplicate event ID. These events can be sent before or after the real event, i.e. the one that is supposed to capture the actual event. Duplicates can be sent from the same device or a different one. These duplicates can also be actual Snowplow events, but with a single event ID. For example, we have come across crawlers that have limited random number generator functionality and generate the same UUID over and over again.

These duplicates share an event ID but one or more client-sent fields are different. In some cases, there is a parent event (the event that is meant to be captured). If it’s unclear which event is the parent event, delete all or move them to a separate stream. If the parent event can be detected, give all other events a new ID and preserve their relationship to the parent event. An alternative is to assign a new event ID to all but the first event and preserve their relationship to the first event.

Deduplicating the event ID

We use a simple algorithm to deduplicate the event ID. When 2 or more events share an ID:

- If all client-sent fields match: delete all but the first event

- If one or more client-sent fields differ:

- either assign a new event ID to all but the first event and preserve their relationship to the first event

- or delete all events

Deduplicating the event ID in Redshift

Update (2015-10-09): Snowplow 72 Great Spotted Kiwi will ship with updated deduplication queries which use the event fingerprint that was introduced in Snowplow 71 Stork-Billed Kingfisher.

Last month, we released Snowplow 69 Blue-Bellied Roller with a new data model that deduplicates the event ID in Redshift. It consists of a set of SQL queries that can be run on a regular basis (for example, after each load) using our SQL Runner application. The queries:

- Deduplicate natural copies and keep them in

atomic.events - Remove other duplicates from

atomic.eventsand insert them toatomic.duplicated_events

This ensures that the event ID in atomic.events is unique. The queries can be modified to use different criteria for deduplication and extended to

also deduplicate unstructured events and contexts.

Note that this will remove some events from atomic, some of which might be legitimate. If this causes issues with reporting, we recommend either not to implement these queries or to customise the deduplication logic.

Let’s run through the queries. First, we list the event IDs that occur more than once in atomic.events:

CREATE TABLE duplicates.tmp_ids_1 DISTKEY (event_id) SORTKEY (event_id) AS (SELECT event_id FROM (SELECT event_id, COUNT(*) AS count FROM atomic.events GROUP BY 1) WHERE count > 1<span class="p">);

We use this list to create a table with all events that don’t have a unique event ID:

CREATE TABLE duplicates.tmp_events DISTKEY (event_id) SORTKEY (event_id) AS ( SELECT * FROM atomic.events WHERE event_id IN (SELECT event_id FROM duplicates.tmp_ids_1) OR event_id IN (SELECT event_id FROM duplicates.events) GROUP BY 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125 <span class="p">);

The GROUP BY clause combines endogenous duplicates into a single row if all columns are equal. Note that this step might take a while when the absolute number of duplicates is large. It’s also possible to be less strict, and combine events when all client-sent fields are equal. We are introducing an event fingerprint which will make it easier to spot endogenous duplicates.

Next, we list the event IDs that have now become unique:

CREATE TABLE duplicates.tmp_ids_2 DISTKEY (event_id) SORTKEY (event_id) AS (SELECT event_id FROM (SELECT event_id, COUNT(*) AS count FROM duplicates.tmp_events GROUP BY 1) WHERE count = 1<span class="p">);

The last step is wrapped in a transaction to ensure that either all or none of the queries get executed.

First, it deletes the original duplicates from atomic.events. Then it inserts the deduplicated natural copies back into the main table, provided that the event ID is not also in atomic.duplicated_events. The remaining events are moved to atomic.duplicated_events.

BEGIN<span class="p">; DELETE FROM atomic.events WHERE event_id IN (SELECT event_id FROM duplicates.tmp_ids_1) OR event_id IN (SELECT event_id FROM duplicates.events<span class="p">); INSERT INTO atomic.events ( SELECT * FROM duplicates.tmp_events WHERE event_id IN (SELECT event_id FROM duplicates.tmp_ids_2) AND event_id NOT IN (SELECT event_id FROM duplicates.events) <span class="p">); INSERT INTO duplicates.events ( SELECT * FROM duplicates.tmp_events WHERE event_id NOT IN (SELECT event_id FROM duplicates.tmp_ids_2) OR event_id IN (SELECT event_id FROM duplicates.events) <span class="p">); COMMIT<span class="p">;

Deduplicating the event ID in Kinesis

The next step is to bring the deduplication algorithm to Kinesis. We plan to partition the enriched event stream on event ID, then build a minimal-state deduplication engine as a library that can be embedded in KCL apps. The engine will not be stateless because it needs to store event IDs and fingerprints in DynamoDB to deduplicate across micro-batches.

The Amazon Kinesis Client Library is built with the assumption that all processes have to be processed at least once, which was the main idea behind check pointing mechanism. This guarantees that no data is missed, but doesn’t ensure single record processing. Deduplication as a KCL application won’t work for endogenous duplicates, because the app itself can introduce endogenous duplicates. You will therefore have to embed the deduplication library in each app that cares about there being no duplicates.

Note that the ElasticSearch sink for the

Kinesis flow takes a “last event wins” approach to duplicates. Each event is upserted into the ElasticSearch collection using the event ID, later duplicates will thus overwrite earlier ones.