A Detailed Look At Tracking with Snowplow

The goal of this guide is to explain Snowplow from the perspective of someone designing data collection.

While Snowplow comprises a vast technical estate with the data pipeline, tracking SDKs, data models, managed offerings, etc, these concepts will only be briefly explained here and only for the purposes of explaining tracking design better.

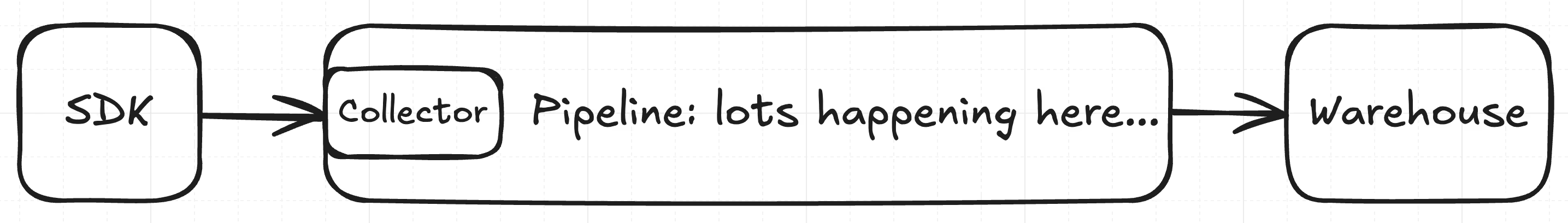

From 10k ft, Snowplow enables the collection of intentionally-defined, well-structured behavioral data, "events", submitted by tracking SDKs (in-browser, in-app, or server-side) to the pipeline's collector.

After examining an event’s structure and content and overall data content, the pipeline makes sure that if its valid it will be persisted to the configured data warehouse ready for consumption by SQL-executing data models. Otherwise (if invalid), it will be stored in a different place to be reprocessed.

Snowplow's highly sophisticated distributed technology guarantees that if an event reaches the pipeline's entry point, it will not be lost. Even if the pipeline becomes unavailable, tracking SDKs' caching provides a sensible buffer to help avoid data loss.

This guide focuses on the parts related to intentional event structure definitions, validation of compliance for incoming events, and how it all comes together with autogenerated tracking facilities.

The Event

The unit of tracking is the Snowplow Event. It is a JSON payload with dozens of canonical properties that tracking SDKs add according to their default or customized configuration.

It contains information such as screen size, ID of the application that submitted the event, OS name, and more. It also contains foundational properties that define what the event is, i.e. identifying information that enables processing of events in the warehouse.

There are three different ways an event can be identified, depending on its exact Snowplow event type:

"Baked-in" events

There are 4 such baked-in events, although 2 of them are considered legacy and Snowplow advises against using them:

- Page views (

page_view) mark a browser visiting a web page - Page pings (

page_ping) are essentially heartbeats that a browser periodically sends to the pipeline while the user is "on" a web page. - (Legacy) Transaction (

transaction), indicating that an e-commerce transaction occurred - (Legacy) Transaction item (

transaction_item) that matches the items in a transaction event above.These four events have no identifying information other than their name.

"Structured" events

These events have a strictly defined, non-extensible structure. They were Snowplow's first effort to attach additional context to an event, and have essentially been superseded by Self-Describing events that we'll see right next.

A structured event comes with five possible properties, three of which are optional. The ones that cannot be omitted are category, which defines a group of events (e.g. ones that match a particular activity, or a particular section of a website) The other is action which is the actual thing that happened - equivalent to an event's name, in practice.

Additional optional properties are: label which can add an ID that's specific to the customer's application; property that typically defines the subject of the action; and value that usually quantifies the action on said subject.

Many organizations feel there are constraints to using Structured events. They can be restrictive in terms of the volume of information one can fit there, they make it hard to relay semantics of an event, and also the pipeline only validates the types of their fields, nothing else. Enter self-describing events.

"Self-Describing" events

This is the recommended way to design your tracking. Self-describing events are events that identify themselves not simply in terms of a name (which may or may not be well defined), but rather in terms of a reference to an event shape (schema) that they comply with. They do so by using the same identifiers that the schema itself uses, those identifiers being:

- The name of the event (

name) - A namespace that acts as a grouping mechanism (

vendor) - The event's format, for the time being the only supported value is

jsonschema - The schema's version using

SchemaVernotation, e.g.1-0-0. Just like major/minor/patch inSemVerstyle versioning,SchemaVerconsists of model/revision/addition numeric identifiers.

A model change is a breaking one; think of the kind of change that would require a new table in a relational database. Revisions are a bit fuzzier and indicate that change may cause problems interacting with some historical data. Contrary to that, additions indicate that the change is fully compatible with existing data.

For the rest of this guide, without loss of generality we will assume all tracking (except for page views and page pings) takes place exclusively with self-describing events.

Schemas and data structures

In Snowplow nomenclature, the terms Schema and Data Structure are often used interchangeably.

This isn't fully accurate as a Data Structure isn't versioned and refers to the concept of the thing that is being tracked. For instance, a purchase of a product is always the same concept for an e-commerce website, independent of the exact shape of the respective tracking event and how it has evolved over time.

As such, a schema can be thought of as the normative description of a data structure at some point in time.

It was already mentioned in the previous section that the only format currently supported for schemas is jsonschema v4 (not the full specification, though).

By way of an example, below follows the content of the schema with vendor com.snowplowanalytics.mobile, name application_lifecycle, and version 1-0-0 as retrieved from iglucentral.com – Snowplow's public schema registry. This schema also has a URI that makes it uniquely addressable: iglu:com.snowplowanalytics.mobile/application_lifecycle/jsonschema/1-0-0.

{

"description": "Entity that indicates the visibility state of the app (foreground, background)",

"properties": {

"isVisible": {

"description": "Indicates if the app is in foreground state (true) or background state (false)",

"type": "boolean"

},

"index": {

"description": "Represents the foreground index or background index (tracked with com.snowplowanalytics.snowplow application_foreground and application_background events.",

"type": "integer",

"minimum": 0,

"maximum": 2147483647

}

},

"additionalProperties": false,

"type": "object",

"required": [

"isVisible"

],

"self": {

"vendor": "com.snowplowanalytics.mobile",

"name": "application_lifecycle",

"format": "jsonschema",

"version": "1-0-0"

},

"$schema": "<http://iglucentral.com/schemas/com.snowplowanalytics.self-desc/schema/jsonschema/1-0-0#>"

}

The event shape that this schema enforces is that of an object with a non-optional property, isVisible, of type boolean; and an optional property, index, of type integer.

Both properties and the schema itself are documented with their respective descriptions. No more properties are allowed to be sent with an event that complies with this schema; i.e. if there is a third property included in the event, it will be considered invalid.

To track this event, you need to instrument the Snowplow tracker you are using to create an instance of it and submit it to your pipeline's collector. For someone using the Javascript tracker, this would typically look as follows:

import { trackSelfDescribingEvent } from '@snowplow/browser-tracker';

trackSelfDescribingEvent({

event: {

schema: 'iglu:com.snowplowanalytics.mobile/application_lifecycle/jsonschema/1-0-0',

data: {

isVisible: true

}

}

});

So, what does a Snowplow event that complies with this schema look like when reaching the warehouse at the end of the pipeline?

The easiest way to see this is by looking at a document loaded into Elasticsearch, by the respective loader (this is a legacy Snowplow loader, not recommended for production workloads).

There is an envelope that contains the canonical properties, and a property for the content that complies with the schema:

{

"v_collector": "ssc-2.8.2-kinesis",

"network_userid": "d1af89d8-42a0-4b81-9c8b-a6e99feb2af5",

"page_referrer": null,

[...dozens more canonical properties...]

"unstruct_event_com_snowplowanalytics_mobile_application_lifecycle_1": {

"isVisible": true,

}

}

In Redshift, you would have a table for all the canonical properties, and a separate table for the content of the event—that latter table having a foreign key to the former.

In all other supported warehouses there is a single table (called the atomic events table) that contains all canonical properties and the event itself–the latter modeled as an additional column, its name being unstruct_event_com_snowplowanalytics_mobile_application_lifecycle_1.

Unstruct event is the unfortunate naming that Snowplow originally chose for self-describing events (we are not using that terminology anymore, but it still lives in the naming of the property that carries the event as illustrated above).

You can see the vendor, in com_snowplowanalytics_mobile, and the name in application_lifecycle. The _1 postfix is the model version, i.e. the first number of the three in the schema's SchemaVer-formatted version.

As already mentioned, a model version implies a breaking change, and the Snowplow machinery knows to create new tables or new columns when an event's model changes.

For instance, if there are incoming events for iglu:com.snowplowanalytics.mobile/application_lifecycle/jsonschema/1-0-0 and at some point the pipeline starts receiving events for iglu:com.snowplowanalytics.mobile/application_lifecycle/jsonschema/2-0-0, that will trigger the creation of a new table (in Redshift) or a new column (in all other supported warehouses), named as something like unstruct_event_com_snowplowanalytics_mobile_application_lifecycle_2.

Context (a.k.a. Entities)

One of Snowplow's superpowers is its ability to describe complex relationships between elements of the data it captures.

Those relationships come in the form of context for the events that are being tracked. The typical example is the context of an ecommerce purchase, which is carried out by a user who buys a product. In this case, user and product constitute the context to the purchase event.

The technical representation of this capability is the augmentation of an event with an array of similarly-formatted, self-describing JSON payloads that are normatively specified by entity data structures.

In terms of formatting, entities (and their data structures) are no different to events (and their data structures): They look exactly the same, and the pipeline does not recognize them to be different.

You can actually use an entity in the place of an event. However, conceptually they are very different in that an event captures something that happened, an effect; and entities capture qualifying data for the thing that happened.

The way to add that context to an event is, as mentioned above, with an array of such entity payloads. From a tracking implementation perspective, this might look as follows:

import { trackSelfDescribingEvent } from '@snowplow/browser-tracker';

trackSelfDescribingEvent({

event: {

schema: 'iglu:com.snowplowanalytics.mobile/application_lifecycle/jsonschema/1-0-0',

data: {

isVisible: true

}

},

context: [{

schema: 'iglu:com.example/user/jsonschema/1-0-0',

data: {

email: 'someone@somewhere.com'

}

}]

});

What happens above is that we use a hypothetical user data structure and add a self-describing instance of it in the context array.

In its entirety, this event tracking implementation captures a visibility change in a mobile app qualified by the user who is using that app. Predictably, this is reflected in the shape of the event in the warehouse (using Elasticsearch again for this example):

{

"v_collector": "ssc-2.8.2-kinesis",

"network_userid": "d1af89d8-42a0-4b81-9c8b-a6e99feb2af5",

"page_referrer": null,

[...dozens more canonical properties...]

"unstruct_event_com_snowplowanalytics_mobile_application_lifecycle_1": {

"isVisible": true,

},

"contexts_com_example_user_1": [

{"email": "someone@somewhere.com"}

]

}

You can immediately notice that there isn't a single context array anymore, but rather an array for the particular context "type" (i.e. the entity's schema).

This is done by the loader, and in a warehouse like BigQuery or Snowflake you would see a column per entity schema, while in Redshift one would see a table per entity schema.

In a hypothetical scenario where there are two iglu:com.example/user/jsonschema/1-0-0 entities attached, both of those would be included in the contexts_com_example_user_1 property/column/table.

Global application context

In certain cases, you may not want to add context entities in each single event you instrument, simply because all events need to include it.

To improve the ergonomics of tracking in those cases, Snowplow trackers offer what we call “global context”: those entities that must always be there.

For instance, when an application can be used only when authenticated, it only makes sense to add an entity for the active user as global context for all emitted events.

Managing data structures (and schemas)

When looking at Snowplow schemas and data structures from 10k ft, you’ll see three core software components interacting:

Iglu, the schema registry that stores all customer schemas for a pipeline (the IgluCentral ones are automatically available to all customers)Enrich, which reads the schemas from Iglu and validates incoming events based on those schemas, andData Structures API(part of Console's backend) which abstracts away Iglu implementation details (development and production environments) and maintains schema management metadata, such as deployment timestamps.

Our customers tend to interact with the Data Structures API via the Console (either using the graphical editor or the JSON text editor)

They then create and validate a schema, save it in the development environment (i.e. development Iglu), then promote it to the production environment (i.e. production Iglu).

Alternatively, all that can happen using snowplow-cli and a git repository, following a gitflow-like process and the gitops model where merging into a develop branch deploys a validated schema to the development Iglu (via the Data Structures API), and merging into a main branch takes schemas to production using the same mechanics.

After a schema has been promoted to production, it is available for Enrich to read it and validate incoming events that reference that schema.

When this happens successfully, Enrich keeps tracks of volumes for such valid events and the referenced schemas, and periodically emits telemetry including those volumes back to Snowplow.

Such telemetry data is used to drive a number of product features, such as the Tracking Catalog, which can help customer teams discover data that they are already tracking.

The difference is subtle but important: whereas by designing data structures we capture what we intend to track, by looking at the tracking catalog we observe the data that actually flows through the pipeline.

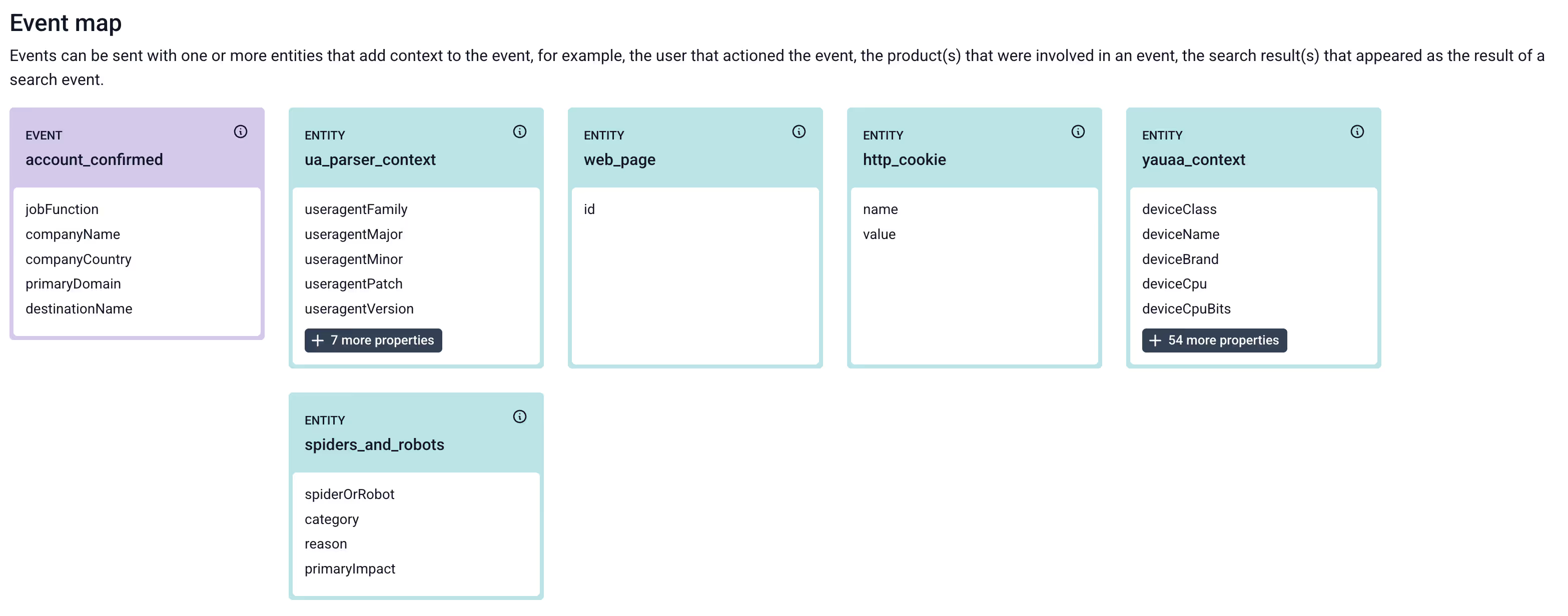

Perhaps more interestingly, we can see how customers are combining the different data structures, bringing together events and entities like in the following screenshot.

Source Applications

Source Applications represent the applications in which you are collecting behavioral data. For example, a particular web application, or a mobile application.

They act as a grouping mechanism for “application IDs” (e.g. mobile-app and mobile-app-qa), and for the entities that constitute global context for that particular application.

Tracking plans on steroids: Snowplow Data Products

With Snowplow Behavioral Data Products, you can create reusable datasets that model data collection for a particular feature, user flow, software estate, etc.

While Data Structures model one specific interaction, Data Products build on Data Structures to model a complete set of interactions into a form of tracking plan.

Data Products aren't just that, though. They take into account the requirements of data-mature companies for distribution of data collection, a la Data Mesh.

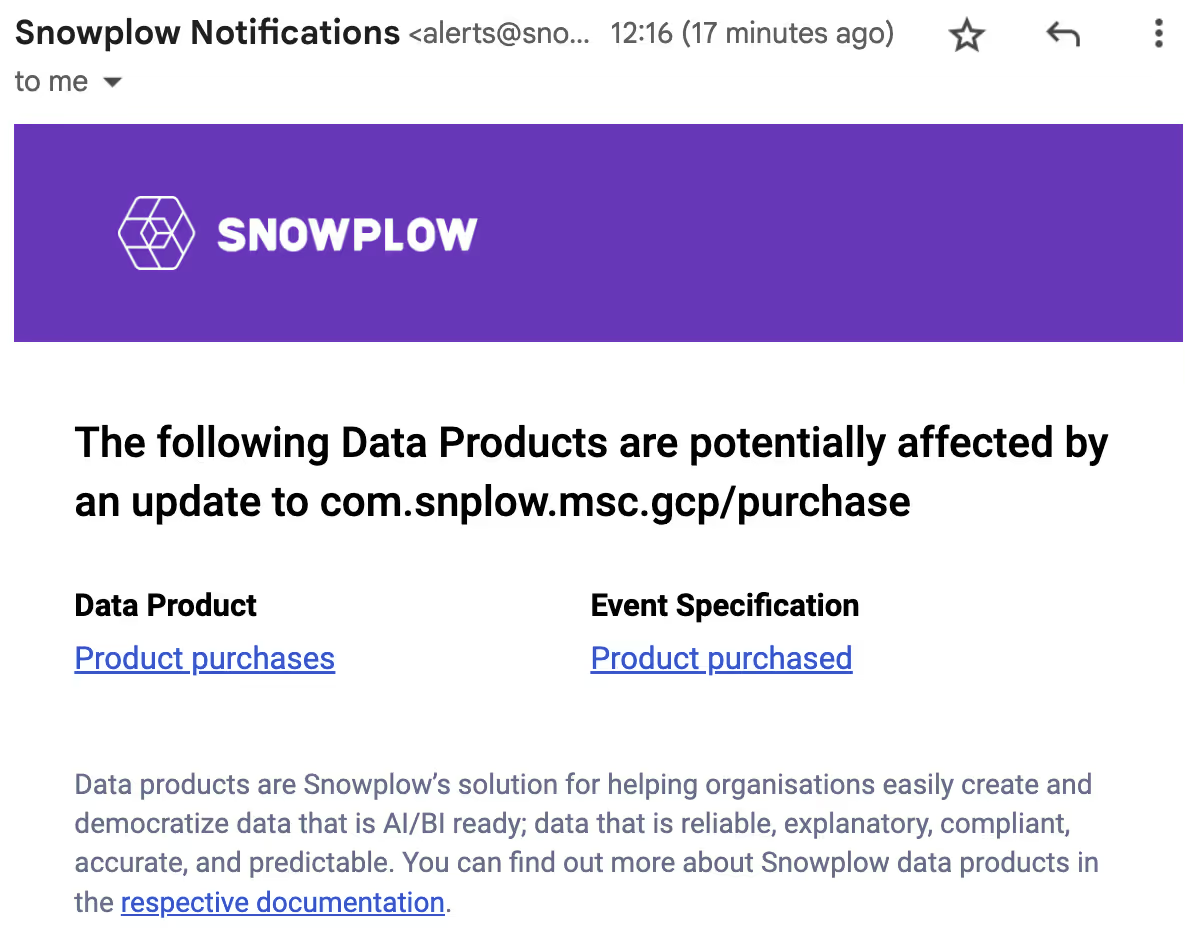

As such, Snowplow Data Products are assigned to an owner and a business area (domain), while also offering workflows around subscriptions. With the latter, it is possible for people interested in the data collected to express that interest (e.g. because they use part of that dataset to drive a dashboard) and be notified automatically when something changes.

Similarly, with subscriptions being visible by the whole organization, a Data Product owner can preemptively reach out to subscribers to discuss upcoming changes, get their opinion on future plans, etc.

Event Specifications

The main building block of a Data Product is the Event Specification. It was already mentioned that Data Products build on Data Structures; the more accurate statement is that Event Specifications build on Data Structures: They combine and specialize them to meet certain constraints.

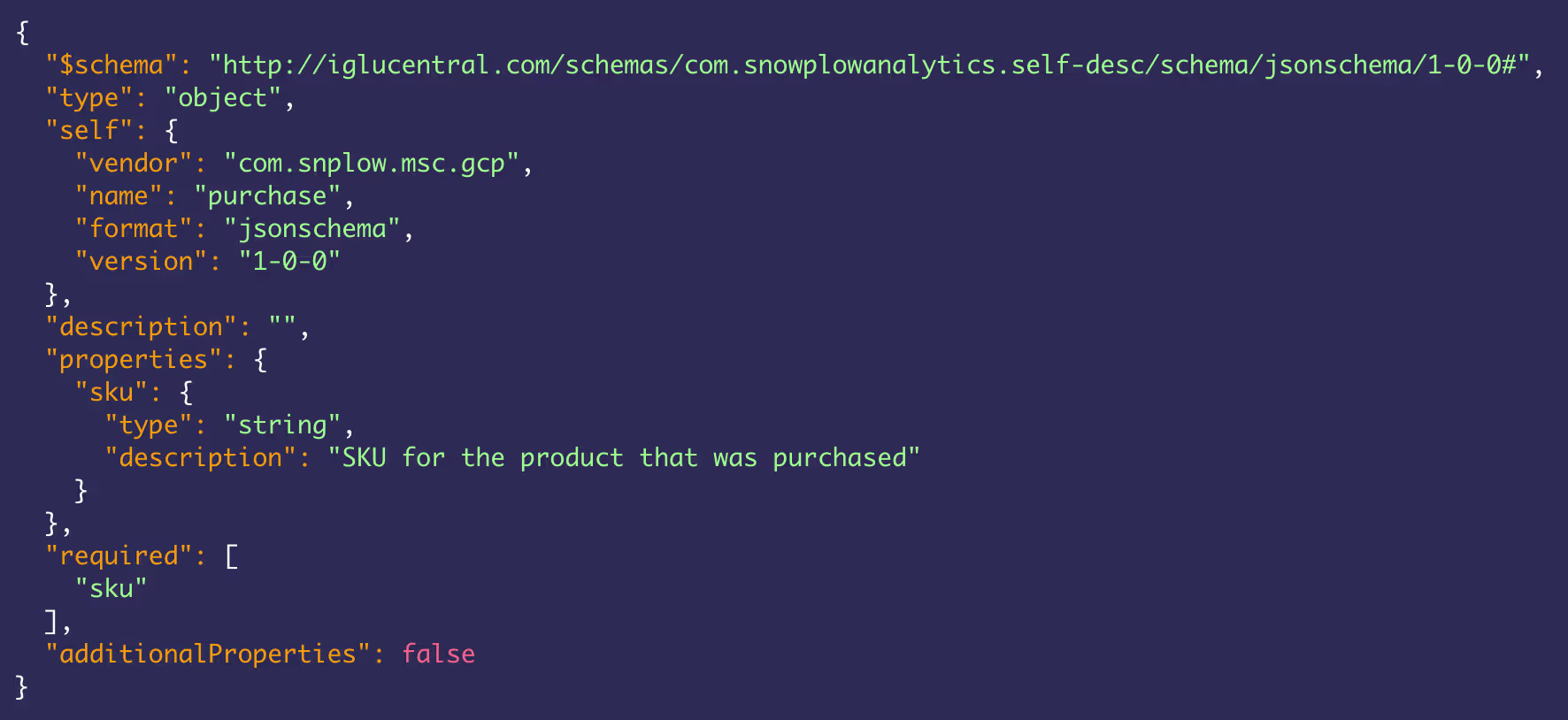

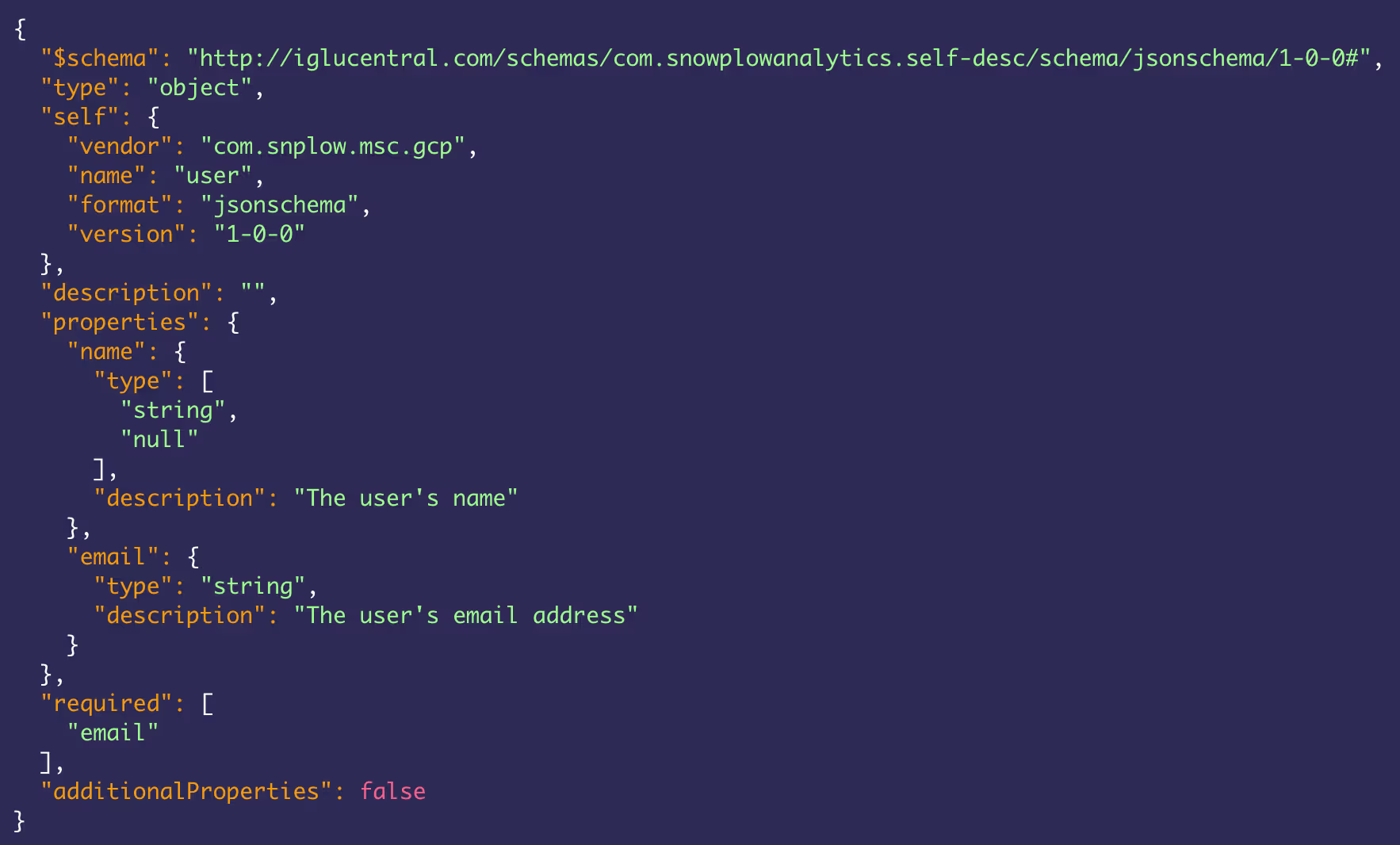

The root of that design lies exactly with the decentralization of data collection. In such setups, there is typically a central data team that creates guardrails to ensure data quality in the warehouse.

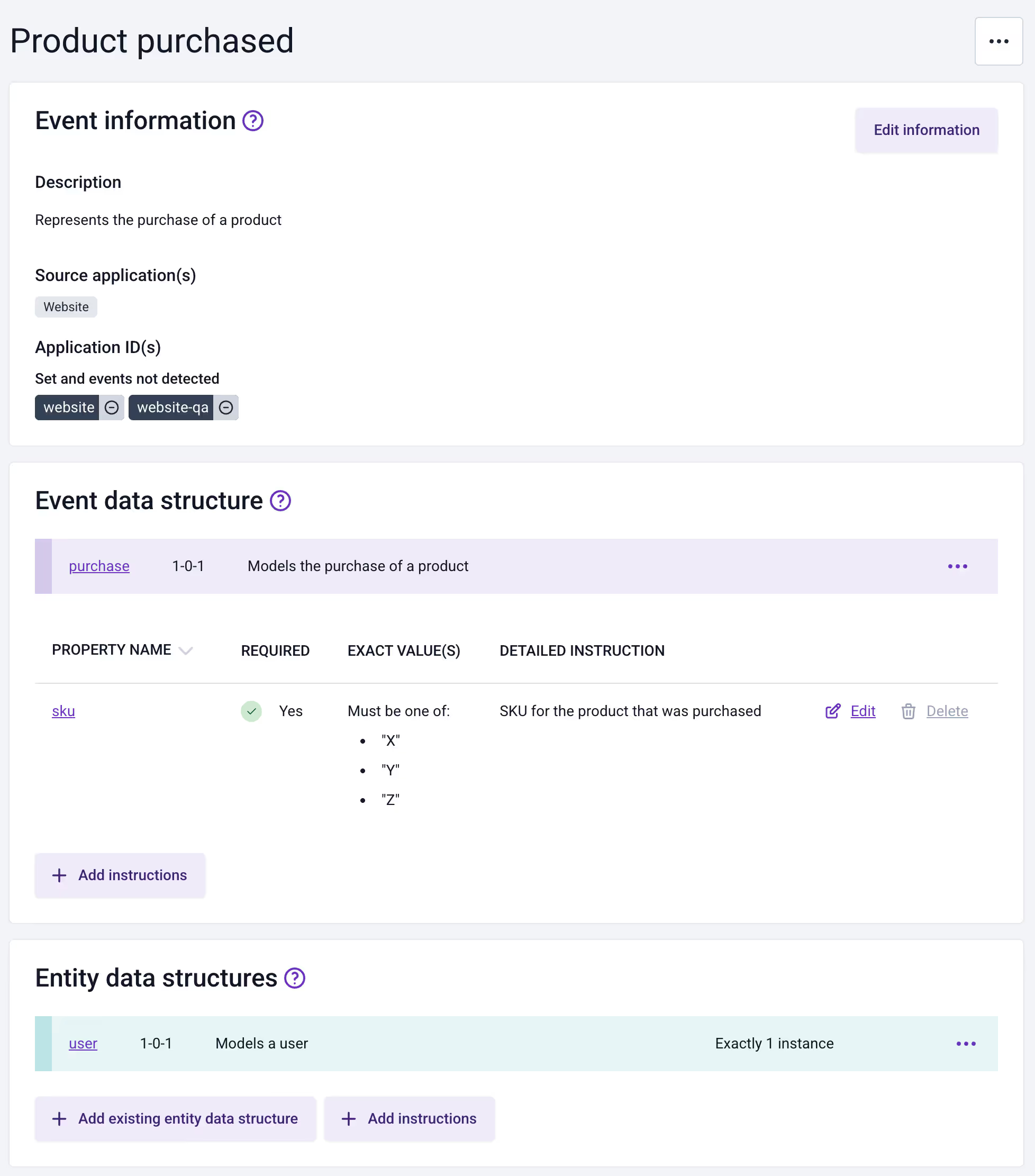

Those guardrails are permissive enough to allow flexibility and make it possible for peripheral teams to define in finer granularity what they want to achieve. For example, this hypothetical central data team may design a "purchase" data structure, where the referenced SKU is a plain string, and a "user" data structure that roughly defines what a user entity looks like:

An event specification within a data product can declare rules such as "the SKU for the purchase event can be one of X, Y and Z"; and that a user entity must be present and it has to be exactly one:

These implementation rules guide the tracking implementation engineers and ensure that the data consumers (the people designing tracking) receive the data that they expect in the warehouse, even if they are not designing the core data structures that define each event's (and entity's) shape.

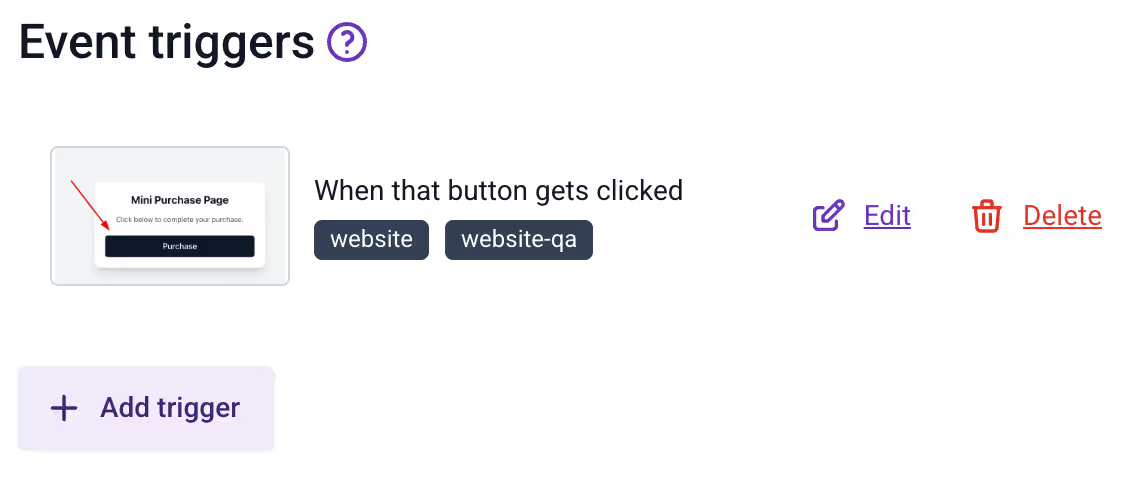

Finally, event specifications can be enriched with guidance around what triggers an event, including screenshots:

Collaboration flows

As mentioned previously, the power of Snowplow Data Products lies not only in their declarative power, but also in their features around collaboration.

To that end, there are a number of safeguards and notifications to ensure all stakeholders are aware of important updates. By way of an example, when a data structure changes and an updated version is made available, all owners of data products referencing that data structure receive an update via email, but are also informed in the Snowplow Console's UI:

Similarly, subscribers to a data product receive email notifications when that data product gets updated.

Code generation with Snowtype

Implementation instructions within event specifications guide tracking implementation engineers towards successfully instrumenting tracking, according to data consumers' expectations. However, mistakes such as typos can result in low-quality data and break data applications such as analytics dashboards.

The last piece of the Snowplow tracking puzzle is Snowtype, which protects against those mistakes. It is a command-line tool that generates strongly-typed code and function signatures wrapping Snowplow trackers.

Using the code that Snowtype generates, tracking implementation engineers can have immediate feedback inside their editor of choice when they make a typo or the events they instrument do not conform to implementation instructions. For instance, if they try to add an entity other than user to the purchase event, their editor/IDE will indicate there is an error, and offer a message to explain that a different type of entity was expected.

Snowtype integrates fully with the console's APIs and offers a seamless experience around consuming data products and generating the respective code.

For engineers that do not have console access, Snowtype also generates markdown files which essentially format the entirety of metadata captured by a data product.