How Snowplow Web Video Tracking is implemented

The JavaScript tracker is the most popular Snowplow tracker, allowing analytics to be added to websites/web apps and Node.js applications. It covers a wide range of use-cases and we've now added a new one to that list in the form of video tracking.

This is a technical look at how we implemented HTML5 and YouTube video tracking using the Snowplow JavaScript Tracker v3 plugins. If you want to use them on your website, the best place to start it to dive straight into the Snowplow Documentation for HTML5 Media Tracking and YouTube Tracking.

Contents

What is being tracked?

As a user interacts with media players you have enabled tracking on, you will receive information on both the event that has occurred and contextual information. The information received is defined in schemas created by Snowplow, and take the form of self-describing JSON. You can find a great explanation of why we chose self-describing JSON for this purpose here.

To start off, there are a couple of general-purpose schemas shared by other Snowplow trackers with video capability (such as the new Roku tracker!) which should give you an idea of the contextual information that comes with events.

An unstructured event with identifying information

// An example of the data in media_player_event unstruct event

{

"type": "play",

"label": "Identifying Label"

}

A media player entity attached to an event

// An example of the data in the media_player context

{

"currentTime": 12.32,

"duration": 20,

"ended": false,

"loop": false,

"muted": true,

"paused": false,

"playbackRate": 1,

"volume": 100,

"percentProgress": 20,

"isLive": false,

}

Both trackers also have tracker-specific schema(s), explained below.

HTML5 Media Tracking

How It Works

The design behind the HTML5 tracker is fairly straightforward, the majority of the heavy lifting is done by the HTMLMediaElement itself. Since HTMLMediaElement was designed with extensibility in mind, it's simple to add event listeners to catch the events we want to emit.

The enableMediaTracking function

This is the only function that is exposed by the API - but should be everything you need. It is defined as such:

enableMediaTracking({

id: string,

options?: {

label?: string,

captureEvents?: string[],

boundaries?: number[],

volumeChangeTrackingInterval?: number

}

})

Let's go over the object passed into the function, and what each property does.

Technical overview

The options are parsed into another object, which is then what gets used throughout the plugin (you'll see reference to these fields below, the object is named conf throughout the plugin):

export interface TrackingOptions {

id: string;

captureEvents: EventGroup;

label?: string;

progress?: {

boundaries: number[];

boundaryTimeoutIds: ReturnType<typeof setTimeout>[];

};

volume?: {

eventTimeoutId?: ReturnType<typeof setTimeout>;

trackingInterval: number;

};

}

The id property

This is the only required property, and for good reason, it's what is used to find the video element you want to track. There are potentially 2 steps to run through to find the element with the passed in id. The first is the findMediaElem function:

export function findMediaElem(id: string): SearchResult {

let el: HTMLVideoElement | HTMLAudioElement | HTMLElement | null = document.getElementById(id);

if (!el) return { err: SEARCH_ERROR.NOT_FOUND };

if (isAudioElement(el)) return { el: el };

if (isVideoElement(el)) {

// Plyr loads in an initial blank video with currentSrc as https://cdn.plyr.io/static/blank.mp4

// so we need to check until currentSrc updates

if (el.currentSrc === 'https://cdn.plyr.io/static/blank.mp4' && el.readyState === 0) {

return { err: SEARCH_ERROR.PLYR_CURRENTSRC };

}

return { el: el };

}

return findMediaElementChild(el);

}

We won't go through this line-by-line, as it's a fairly straightforward function. The main thing of note is the explicit 'Plyr' handling, a popular HTML5 video framework.

If there is an element found that is neither an <audio> nor <video> tag, we have a go at looking through the child tree for either tag, for a good reason. Take a look at the snippet below, of what you might likely see in a React app.

import { useEffect } from 'react';

import Plyr from 'plyr-react';

import { enableMediaTracking } from '@snowplow/browser-plugin-media-tracking';

export default function PlyrVideo() {

useEffect(() => {

enableMediaTracking({

id: 'plyr',

});

});

return (

<div className="video-container">

<Plyr id="plyr"></Plyr>;

</div>

);

}

And then the HTML that is produced for this element on the web page:

<div class="video-container">

<div tabindex="0" class="plyr plyr--full-ui plyr--video plyr--html5 plyr--paused plyr--stopped plyr--fullscreen-enabled">

...

<div class="plyr__video-wrapper">

<video playsinline="" src="https://cdn.plyr.io/static/blank.mp4">

<source src="https://cdn.plyr.io/static/blank.mp4" type="video/mp4" size="720">

<source src="https://cdn.plyr.io/static/blank.mp4" type="video/mp4" size="1080">

</video>

<div class="plyr__poster">

</div>

...

</div>

</div>

Sadly for us, React components can (depending on design) render out in a way that doesn't include your 'id' attribute. This means in this instance, you'd be a bit stuck.

To get around this, you can simply give the id to an HTML parent of the React component. In this case, the parent div:

...

<div id="plyr" className="video-container">

<Plyr></Plyr>

</div>

...

And as long as there is a single <audio> or <video> tag as the child of <div id="plyr" className="video-container">, the element will be found just fine!

The options.label property

Fairly straightforward, lets you assign a known label to the unstructured event you will receive.

{

id: "example-id",

options: {

label: "example-label",

},

}

Gives us:

"unstruct_event": {

"schema": "iglu:com.snowplowanalytics.snowplow/unstruct_event/jsonschema/1-0-0",

"data": {

"schema": "iglu:com.snowplowanalytics.snowplow/media_player_event/jsonschema/1-0-0",

"data": { "type": "pause", "label": "example-label" }

}

},

The options.captureEvents property

This option lets you pick which events, listed here, you want to capture. For example, if you were only interested in seeing when a video has finished, you could use the following snippet:

enableMediaTracking({

id: "example-id",

options: {

captureEvents: ['ended'],

},

});

Event Groups

As we wanted users to be able to get up and running with this plugin with minimal fuss, providing sensible defaults for potentially more in-depth features was essential. There are a lot of events that the player can produce, and you're unlikely to be wanting each and every one.

Event groups provide pre-made arrays of events if you just want to get started with tracking. There are two event groups, DefaultEvents and AllEvents

DefaultEvents

The default value of options.captureEvents, contains all the events that are likely to be appropriate for general video tracking needs:

enableMediaTracking({

id: "example-id",

options: {

captureEvents: ['DefaultEvents'],

},

})

DefaultEvents contains the following:

[ "ready", "pause", "play", "seeked", "ratechange", "volumechange", "ended", "fullscreenchange", "percentprogress" ]

AllEvents

If you want to capture every event possible (and fill up your collector!), you can use AllEvents to track every event, used in the same way as DefaultEvents

enableMediaTracking({

id: "example-id",

options: {

captureEvents: ['AllEvents'],

},

})

Extending Event Groups

A final useful part of event groups is the ability to extend them with other events. For example, you may want all the events in DefaultEvents, but also want to track the emptied event. Having to remove DefaultEvents, then add every event manually would be a bit of a chore, so you can extend an event group in the following way:

enableMediaTracking({

id: 'example-video',

options: {

captureEvents: ['DefaultEvents', 'emptied'],

}

})

The options.boundaries property

This is used in conjunction with the percentprogress event, so as a quick aside...

The percentprogress event

This event is used to emit an event when the player reaches a specified percent through the video. The idea was born after we considered using page pings (where an event is emitted event n seconds) but found them to provide a lot of noise in the event log for a video tracking application. As the usage of this would be to see if a user is still consuming the media, being able to set points in the video that fire events as the user progresses through them seemed to make sense.

Using this method to see if the user is still engaged with the media also gives additional utility, such as being able to easily see if a user has viewed any key parts of your video.

Anyway, back to options.boundaries...

By default, the boundaries provided are:

[10, 25, 50, 75]

The initial idea for these was to simply poll the player every n ms, to see if the integer (or as this is JavaScript, non-fractional) portion of current time matched any of the percentages passed into boundaries. There were a few problems with this method and it was scrapped pretty quickly:

- Shorter videos required more frequent polling to ensure every percent was checked

- Polling, in general, is undesirable, as frequent polling can cause performance issues

So this idea had a rethink, and we ended up landing on a JavaScript staple, setTimeout. Another rather simple solution, of just calculating the future time of the percentprogress event and setting a timeout to fire the event.

function setPercentageBoundTimeouts(el: HTMLAudioElement | HTMLVideoElement, conf: TrackingOptions) {

conf.progress!.boundaries.forEach((boundary) => {

const absoluteBoundaryTimeMs = el.duration * (boundary / 100) * 1000;

const timeUntilBoundaryEvent = absoluteBoundaryTimeMs - el.currentTime * 1000;

if (0 < timeUntilBoundaryEvent) {

conf.progress!.boundaryTimeoutIds.push(

setTimeout(

() => waitAnyRemainingTimeAfterTimeout(el, absoluteBoundaryTimeMs, boundary, conf),

timeUntilBoundaryEvent

)

);

}

});

}

However, there was a slight problem with the timing of the timeouts, thus enter waitAnyRemainingTimeAfterTimeout.

// The timeout in setPercentageBoundTimeouts fires ~100 - 300ms early

// waitAnyRemainingTimeAfterTimeout ensures the event is fired accurately

function waitAnyRemainingTimeAfterTimeout(

el: HTMLAudioElement | HTMLVideoElement,

absoluteBoundaryTimeMs: number,

boundary: number,

conf: TrackingOptions

) {

if (el.currentTime * 1000 < absoluteBoundaryTimeMs) {

setTimeout(() => {

waitAnyRemainingTimeAfterTimeout(el, absoluteBoundaryTimeMs, boundary, conf);

}, 10);

} else {

mediaPlayerEvent(SnowplowEvent.PERCENTPROGRESS, el, conf, { boundary: boundary });

}

}

As the comment suggests, the event was firing an inconsistent amount earlier than expected. Whilst this likely wouldn't be a problem for most use-cases, with shorter videos it could result in a significant discrepancy. Once the timeout is fired, this function takes over and checks every 10ms until the appropriate time to fire the event. Although this goes against what was said only a few lines ago (avoiding frequent polling), as this will only fire at most ~30 times, the tradeoff of accurate firing times is worth it in this instance.

The options.volumeChangeTrackingInterval property

This option is used in one place:

// Dragging the volume scrubber will generate a lot of events, this limits the rate at which

// volume events can be sent at

if (e === MediaEvent.VOLUMECHANGE) {

clearTimeout(conf.volume!.eventTimeoutId as ReturnType<typeof setTimeout>);

conf.volume!.eventTimeoutId = setTimeout(() => trackMediaEvent(event), conf.volume!.trackingInterval);

} else {

trackMediaEvent(event);

}

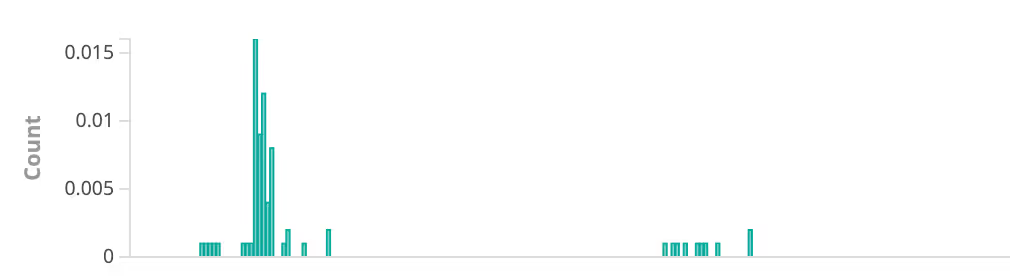

This option is related to only a single event - volumechange. This is because of the way the volume scrubber works on HTML5 players. By clicking and slowly dragging the slider, you can send out tens of events per second. The graph below shows the relative difference in event generation between setting volumeChangeTrackingInterval to 5ms (left) vs the default 250ms (right), whilst holding and slowly dragging the volume slider.

HTML5 Specific Schemas

This plugin also uses a couple of specific HTML5 Media schemas. These are mostly based on the data provided by the HTMLMediaElement and HTMLVideoElement.

The media_element schema is used by both audio and video elements:

{

"htmlId": "my-video",

"mediaType": "VIDEO",

"autoPlay": false,

"buffered": [

{

"start": 0, "end" : 20

}

],

"controls": true,

"currentSrc": "http://example.com/video.mp4",

"defaultMuted": true,

"defaultPlaybackRate": 1,

"disableRemotePlayback": false,

"error": null,

"networkState": "IDLE",

"preload": "metadata",

"readyState": "ENOUGH_DATA",

"seekable": [

{

"start": 0, "end" : 20

}

],

"seeking": false,

"src": "http://example.com/video.mp4",

"textTracks": [

{

"label": "English",

"language": "en",

"kind": "captions",

"mode": "showing",

},

],

"fileExtension": "mp4",

"fullscreen": false,

"pictureInPicture": false

}

Whilst the video_element schema is only used when tracking video elements.

{

"autoPictureInPicture": false,

"disablePictureInPicture": false,

"poster": "http://www.example.com/poster.jpg",

"videoHeight": 300,

"videoWidth": 400

}

YouTube Video Tracking

How it works

YouTube tracking works a bit differently from HTML5 media tracking. Even though it is still technically a <video> element, the way to embed YouTube videos on your site requires the use of an IFrame. Due to CORS, there isn't a way to programmatically access the content of the IFrame. This might have been a bit of a problem for this project, but luckily, Google provides an API for just that usage.

Since we are relying on Googles' API, this tracker is more restrictive on events and data, however, it should still be more than good enough for the majority of your tracking needs.

A slight drawback

Whilst Google providing the API is very handy, there's a rather unfortunate limitation. If you're using GAv4, you might have seen "Video engagement" in the "Enhanced measurement" portion of GAv4.

As it says, Google uses the IFrame API to perform their own video tracking for Google Analytics, but only one instance of the API can be attached to a player. With this setting enabled, there is a race condition as both GAv4 and Snowplow attempt to attach to the YouTube IFrame, meaning disabling this setting is required for reliable tracking.

The enableYouTubeTracking function

Like the HTML5 plugin, this is the one and only function exposed. It is defined as such:

enableYouTubeTracking({

id: string,

options?: {

label?: string,

captureEvents?: string[],

boundaries?: number[],

updateRate?: number,

}

})

As you can see, the object passed into the function is almost identical to enableMediaTracking, apart from updateRate. Despite this, how this works in the background differs in a few key places, and so there were entirely new challenges when designing this plugin. So let's start by going through the object passed in!

Technical overview

The id property

As with Media tracking, this is just passed into document.getElementById to find your IFrame element.

The options.label property

Identical to Media tracking described above.

The options.captureEvents property

This option, while used in the same way, works a little bit different to Media tracking. Since we are using the IFrame API, all our events come from that, there were a few problems to solve to get it working as wanted.

YouTube player API callbacks

The YouTube player in the API provides 6 events itself:

onReady

This callback is used to set up the other event listeners on the player:

player: new YT.Player(conf.mediaId, { events: { onReady: (e: any) => playerReady(e, conf) } })

If the other event listeners were added before this callback, trying to call methods on the player instance would all result in errors.

onStateChange

This is an important one for tracking, as this is where we get play, pause, ended, buffering and cued from.

onPlaybackQualityChange

This one is fairly self-explanatory, it fires when the playback quality changes (who could have guessed), and provides the new quality level of the video.

onPlaybackRateChange

Similar to above, does what it says on the tin.

onError

Fires if an error occurs in the player. Since there are 5 (technically 4), we just have a handy enum for slightly more descriptive error states:

export const YTError: Record<number, string> = {

2: 'INVALID_URL',

5: 'HTML5_ERROR',

100: 'VIDEO_NOT_FOUND',

101: 'MISSING_EMBED_PERMISSION',

150: 'MISSING_EMBED_PERMISSION',

};

onApiChange

This event isn't terribly useful for our purpose - essentially it fires if a module is loaded/unloaded.

Schemas

Aside from the general Snowplow media schema, there is also a YouTube specific schema, based on the IFrame API properties.

{

"autoPlay": false,

"avaliablePlaybackRates": [

0.25, 0.5, 0.75, 1, 1.25, 1.5, 1.75, 2

],

"buffering": false,

"controls": true,

"cued": false,

"loaded": 17,

"playbackQuality": "hd1080",

"playerId": "youtube",

"unstarted": false,

"url": "https://www.youtube.com/watch?v=zSM4ZyVe8xs",

"yaw": 0,

"pitch": 0,

"roll": 0,

"fov": 100.00004285756798,

"avaliableQualityLevels": [

"hd2160",

"hd1440",

"hd1080",

"hd720",

"large",

"medium",

"small",

"tiny",

"auto"

]

}

These new tracker plugins should handle your web video needs, but please feel free to open discourse topics with ideas, and add issues and bug reports to the repository.