Building data models with Snowplow and dbt

In our previous chapter, we looked at how to design data models to fit your business. This time, we’ll explore how to get started with building, testing and deploying data models with Snowplow and dbt.

Snowplow and dbt: A powerful combination to capture and model your data

Before we dive in, let’s explore why Snowplow and dbt make for a great combination when it comes to collecting and modeling behavioral data at scale. It comes down to two key reasons:

- Snowplow enables you to capture the best possible behavioral data to feed into your data models, your data warehouse and your applications further downstream;

- dbt is the most versatile, flexible tooling for data transformation, with several useful features right out of the box.

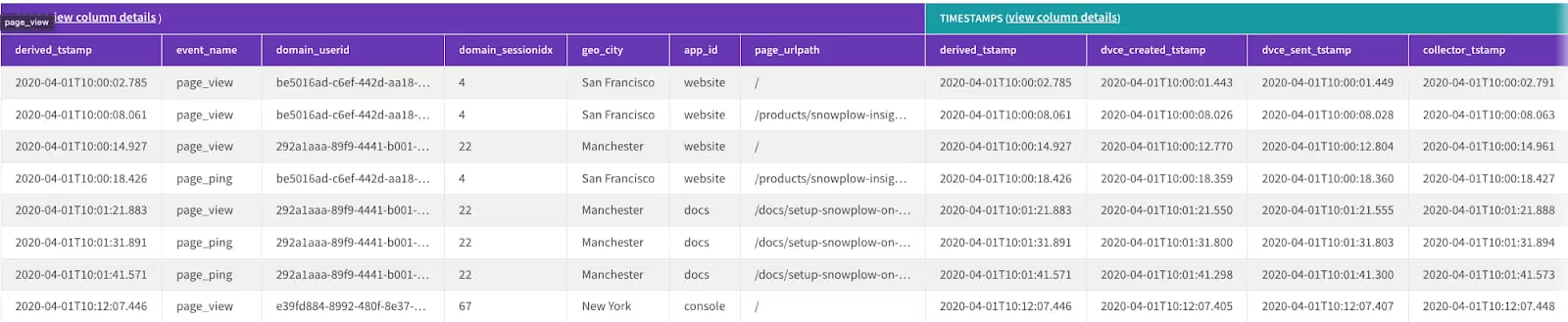

Without going into the intricacies of why Snowplow data is so powerful, behavioral data captured from Snowplow is rich, well-structured and perfectly suited to your data modeling use cases. You can also explore Snowplow data for yourself to see what it looks like. Other reasons you should consider Snowplow data for your data transformation might include:

- Raw, unopinionated data – Snowplow lets you capture raw, unopinionated data into your data warehouse, where you decide how best to apply your own business logic in a way that makes sense for your organization.

- Astonishingly rich data – Even Snowplow’s raw data is uniquely rich by default, with almost 100 data points attached to each event.

- Architected for data quality – Snowplow’s behavioral data platform was designed from the ground up to capture clean, well-structured data. When aggregating (modeling) behavioral data, data quality becomes even more important to avoid propagating bad data in large data models.

- Flexible data collection – Snowplow gives you total flexibility over how you capture your behavioral data, enabling you to collect data that suits your business and data modeling needs.

Working with Snowplow data within dbt data is a perfect setup for building data models that match your use cases and business objectives. Let’s look at a few examples of what makes dbt ideal for analytics engineers:

- Enhanced collaboration – dbt makes it easy to write transformation code using just SQL, meaning anyone on the team who knows SQL can easily contribute to end-to-end transformation pipelines. Built-in version control helps ensure alignment.

- Testing – dbt supports robust testing capabilities to ensure broken models never make it to production. This is possible through either “schema tests” or “data tests.” (more on that later)

- Documentation and Lineage – Auto-generated documentation and self updating dependency graphs reduce manual overhead while promoting transparency and consistency.

- Unique features like Jinja – In dbt you can combine SQL with Jinja, a templating language that allows you to do more than is usually possible with SQL, such as writing if statements or abstracting SQL snippets for reusable macros.

- Packages to get you started – dbt comes ready with modeling packages so you don’t need to start from scratch, such as Snowplow’s dbt package which you can iterate on for your data modeling use cases.

These are just a few highlights of what makes Snowplow and dbt a powerful combination when it comes to delivering rich insights from your data models. Let’s dive into how to get started.

What do I need to get started?

Aside from dbt and Snowplow, there are a few other things to consider before you start transforming.

- An environment to run dbt in production – dbt Cloud is a good option;

- A cloud data warehouse – either Redshift, BigQuery or Snowflake (here’s a handy guide to choosing between them) or a database such as Postgres;

- Business Intelligence tools (BI) such as Looker, Mode, Redash or Tableau where you can deliver the insights and build dashboards from your data models.

- A blueprint for how you will design your data models so they meet the needs of your internal customers (see chapter 2);

- A centralized approach to your data modeling function, ideally backed by documentation, a data dictionary and a style guide for how you’ll be writing SQL.

With these in place, including an analytics engineer or analyst, you’re in a great position to start building data models for your team.

Best practices for building your first data model

Data modeling, like other aspects of engineering, should be carefully governed from a centralized set of best practices, documented and shared throughout the data team. This will prevent a data modeling free-for-all, with code inconsistencies, duplication of work and and overall confusion. It’s worth considering that the planning and design phase of your data modeling project is as important, if not more vital than the process of writing SQL itself.

With that understood, there are some best practices you can take away when it comes to building your first data model.

- Build and adhere to a SQL style guide

When building data models, especially as the team grows, you’ll need a style guide to ensure the team writes SQL effectively and consistently. While some variation is expected, the way your SQL is written is crucial when it comes to iterating on your data model. If your code is impossible to read, or, even if it’s elegantly written but unclear to other analysts, fixing bugs in the SQL or evolving the data model for new requirements becomes a huge challenge.

In your style guide, you should set out

- How your SQL should be written to make it clear and iterative for other analysts;

- Rules to abide by when writing SQL (e.g. avoid nested queries that are hard to read);

- How to break complex models out into small, logical steps in the code to make it easy and fast to update.

- Make important decisions early in the data modeling lifecycle

Building robust data models is about preparing for the future. Planning and designing data models effectively means you’ll significantly reduce the ‘clean up’ required later downstream. It’s also a critical part to get right, because the success of your data models depends on internal communication with internal customers such as the product and marketing team.

You don’t want to be in a position where you’re making key decisions about the purpose and design of your data models when you’re about to start writing SQL. By then, it’s already too late. Spend sufficient time architecting your data models, and the implementation will be faster and easier.

- Build a data dictionary

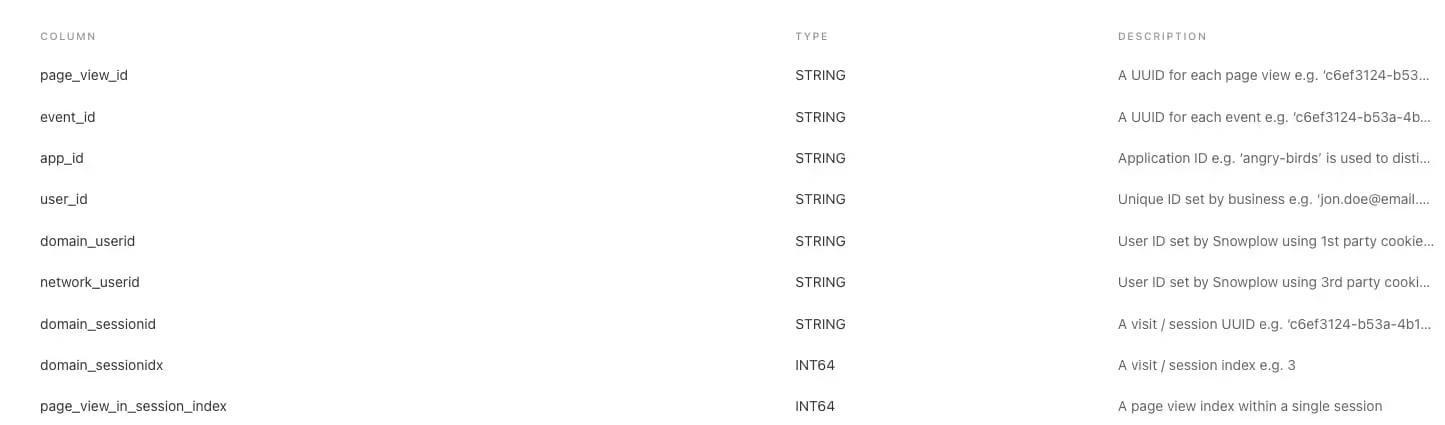

Within dbt, it’s possible to describe your properties, such as unique identifiers, timestamps and so on to make it clear what variables you’re referring to in your data model, and easily access definitions later via the built in documentation functionality.

You’ll find an example of how we’ve broken down each variable in our Snowplow dbt package. It takes additional effort to create these descriptions, but the payoff in the long run is well worth it. When each property is explicitly described, it’s easy for others in the data team and the wider business to understand the data they’re working with. This is especially useful as the business grows, when people leave or for new analysts getting up to speed with your data modeling process.

- Decide on a cost effective approach that fits your use case

In our last chapter, we mentioned how the design and planning phase of data modeling is critical to the data models running efficiently and cost effectively. For instance, the way you structure your tables will have a big impact on the costs of running queries in your warehouse. Large, flat tables can use large amounts of storage and lead to huge warehousing costs, for example.

Behavioral data volumes tend to be large by nature, so understanding your warehouse and databasing solution is key to keeping running costs efficient. By following best practices like always placing limits on partition columns, selecting only the required columns and avoiding unnecessary use of expensive functions in SQL such as window functions can significantly help reduce costs.. It’s worth factoring these factors into your design process to avoid landing unexpectedly high warehousing costs.

- Build a process for robust testing

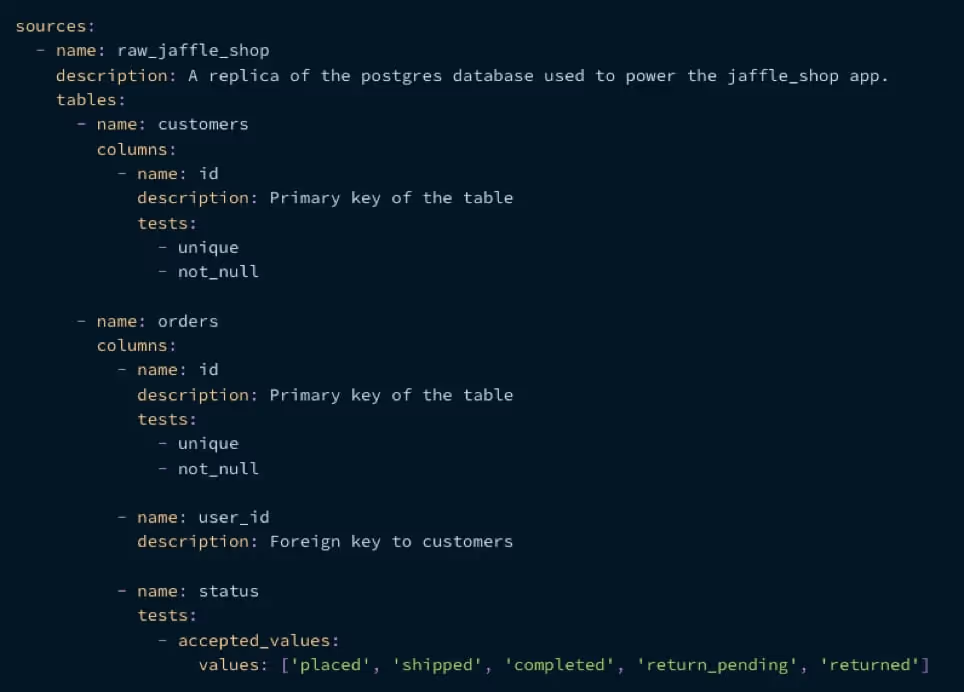

Testing is important to build assurance in your data models before you deploy to production. There are various tests you can run within dbt, let’s explore a few of these:

- Schema tests

- Schema tests are generic tests that can be reused throughout your project. They perform tests on a single column at a time, with typical example being uniqueness and not-null tests. These are really useful in development (checking your code actually produces a unique primary key for example) as well as in production (your source data does not have nulls occuring where it shouldn't) (

- Bespoke data tests

- Bespoke tests allow you to write SQL queries that will return failing records, which means you can test your model until your test returns zero results. These are flexible, one-off tests which can be great to uncover bugs in your model.

- Unit tests

- Going one level deeper, you can on a model-by-model basis create a dummy input dataset and an expected output dataset in the form of a csv. Using dbt’s seed functionality, you can upload both datasets, run the model using the input dataset and verify the actual output matches the expected... While this requires a little extra work, it is a super robust way to see that your SQL is producing your intended results before deploying your model.

Before you deploy

You’ve tested your data model and you’re comfortable with the results. There’s a few steps to check off before you submit that pull request.

- Ensure best practices have been adhered to - Checking whether best practices such as style guides, documentation & tests have been correctly implemented can be tedious. Thankfully there are some tools to help, for example the ‘dbt Meta Testing’ package can check if models have documentation and tests associated with them, while SQLFluff enforces style rules are met. Tools like these can be run as Github Actions pre-merge.

- Set up your data modeling practise as part of your Continuous Integration workflow – It’s worth taking a holistic view of your data modeling practice as part of your wider CI workflow. For example, you could set up a Github action to run your dbt project against a dev database to ensure that all tests pass before you perform a merge.

- Double check your job scheduling – Some models may only need to run every few weeks, some every few minutes. Double check you have set up your scheduled jobs performantly according to your use case.

- Communicate with your team and internal customers – Make sure the rest of the data team, as well as your end users, are in the loop on the data model’s progress and what they can expect. Communication is a crucial, but often overlooked part of the data lifecycle that can save time downstream when the data is live in dashboards and reports.

In our next chapter, we’ll look at how data modeling has evolved as a practice, where it stands today, and what the future holds for how data modeling technology and processes will develop.