5 Key Reasons to Integrate a Customer Data Infrastructure with Your Data Lakehouse

Organizations today rely heavily on customer behavioral data to gain a competitive edge. While this is a well-known fact, not every organization can effectively manage and utilize the massive volumes of customer data generated across a growing number of touchpoints.

From our experience working with some of the world’s leading companies, there are several reasons for this dilemma. From fragmented data collection to privacy concerns through to the inability to access and analyze this data in real-time, all of which lead to missed business opportunities and suboptimal decision-making.

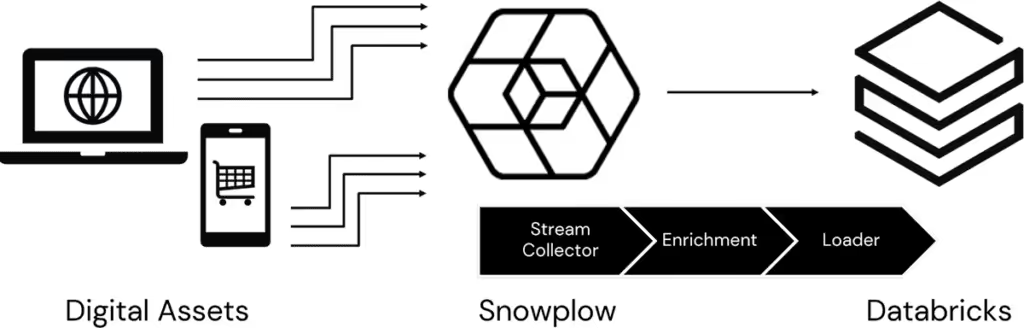

So what can you do to overcome these hurdles? Part of the solution lies in integrating a Customer Data Infrastructure (CDI) like Snowplow with a data lakehouse such as the Databricks Data Intelligence Platform.

This powerful combination is helping organizations to collect, process, model, and analyze real-time behavioral data from digital properties, while ensuring privacy and compliance standards are met.

In this blog post, we unpack five key reasons why integrating a CDI with your data lakehouse is essential for success in the digital era, drawing insights from Alex Dean (CEO and Co-founder, Snowplow), Bryan Smith (Global Industry Solutions Director, Retail & CPG, Databricks), and Fern Halper’s (VP & Senior Director, Advanced Analytics, TDWI) recent conversation on the webinar – Leveraging Customer Data for Enhanced Analytics and AI in the Lakehouse.

5 Reasons to Integrate a Customer Data Infrastructure with Your Data Lakehouse

- Centralized Management of Customer behavioral data

- Real-Time and Historical Analysis Capabilities

- Integration with Advanced Analytics and AI

- Enabling Operational Use Cases

- Improved Data Privacy and Compliance

Reason #1: Centralized Management of Customer behavioral data

One of the biggest challenges we hear companies mention is the siloed and fragmented nature of their customer behavioral data.

It’s often the case that different teams within the organization (product, marketing, customer support, etc.) have purchased their own tooling such as CDPs or CRMs and attempt to build a customer record in these packaged solutions. However, over time this setup becomes unwieldy as multiple sources of truth emerge, and customer understanding and subsequent workflows become out of sync and disjointed.

It may feel like a monumental and daunting task to centralize all this data. But it doesn’t need to be.

CDIs like Snowplow can be integrated with your data lakehouse, enabling you to centralize the management of customer behavioral data from various digital channels. Owned and operated by your data and engineering teams, you can feel confident that the behavioral data is accurate, governed, and AI-ready for consumption by various teams.

As Databricks’ Bryan Smith mentioned in the webinar, “having customer behavioral data centralized in a place where different teams can collaborate and work with the data, while ensuring it is governed consistently, is absolutely essential, especially considering the sensitive nature of the data we’re dealing with.”

So, how do Snowplow and Databricks help to achieve this? Well, Snowplow captures customer behavioral data using ‘tags’, which are snippets of code embedded on your website or app. These tags track an array of user actions such as page views, purchases, and form submissions, along with associated metadata like IP addresses, timestamps, and user agents.

By centralizing this data in the Databricks Intelligence Platform, you can break down data silos and enable teams across your organization to collaborate on the same data effectively.

Reason #2: Real-Time and Historical Analysis Capabilities

The integration of a Customer Data Infrastructure like Snowplow with Databricks really is a game-changer. It gives you the power to analyze customer behavior data in both real-time and historical contexts.

Snowplow’s real-time data processing capabilities allow you to capture and act on customer events as they happen, enabling timely and personalized experiences.Through Snowplow’s Lake Loader integration with Databricks, gold level tables are readily available for downstream workloads in the lakehouse.

As Alex Dean explained on the webinar: “It’s by capturing Snowplow customer data and running it through different processes (real-time data ingestion, data transformation with dbt packages, batch and streaming data processing, data storage and querying etc.) that we can start to understand customer intent, and start to use it to drive analytical understanding, and then operational use cases too.” In other words, you can capture Snowplow data in real-time and process it through the Databricks Data Intelligence Platform, allowing you to gain a deep customer understanding in the moment and take eventual action.

But that’s not all. The historical data you have stored in your lakehouse provides you with valuable insights into long-term trends, patterns, and opportunities. When you combine this with your real-time data, you start to develop a holistic view of customer behavior and get closer to building that coveted Customer 360 view. This really is the point where you can drive informed, data-driven decision-making and business growth.

Reason #3: Integration with Advanced Analytics and AI

Snowplow’s next-generation Customer Data Infrastructure, combined with the Databricks Data Intelligence Platform, is a match made in heaven for advanced analytics and AI.

Snowplow captures rich, granular behavioral data from your digital estate which you can stream into your Databricks lakehouse. This enriched data then serves as a foundation for sophisticated machine learning and predictive modeling to build next-gen customer experiences

Databricks’ powerful processing capabilities and pre-built ML libraries make it easy to apply advanced analytics techniques to your behavioral data, ranging from predictive modeling for marketing attribution to e-commerce funnel analytics, and propensity scoring.

Not every organization is at this level of maturity. As TDWI’s Fern Halper noted in the webinar, “About half are at the dashboard self-service stage of analytics, meaning someone is generating reports or dashboards. The other half of organizations are starting to carry out predictive analytics and machine learning or other techniques like natural language processing to analyze their data.”

For the dashboarding half, there is a real risk your organization could fall behind. But by adopting Snowplow and Databricks, you can start to leverage these techniques effectively, turning raw behavioral data into actionable insights that drive business results and customer satisfaction.

Reason #4: Enabling Operational Use Cases

Snowplow and Databricks allow you to go beyond just analytics – they enable you to power operational use cases that directly impact customer experiences.

By leveraging Snowplow’s real-time customer behavioral data in the lakehouse, you can trigger personalized actions and interventions at the right moment.

As Bryan Smith from Databricks mentioned in the webinar, “If you need to do real-time processing to trigger alerts or notifications or send signals back to your digital experience platform to adjust the engagement with your customer there, you can certainly do that.”

There are several operational use cases that you can adopt using Snowplow and Databricks:

- Real-time segmentation for tailoring content and offers

- Next-best offer recommendations

- Fraud detection

- Demand sensing

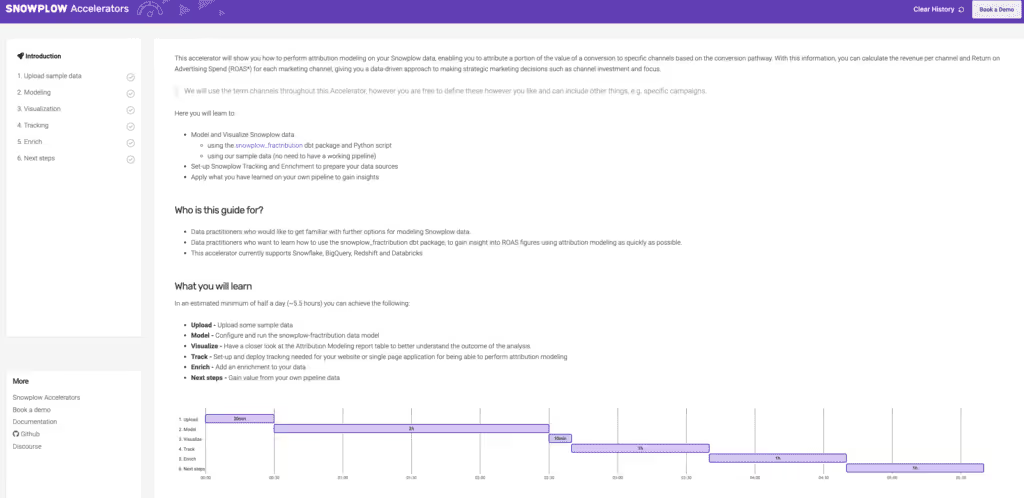

Moreover, Snowplow provides several dbt packages and solutions accelerators that simplify data modeling and transformation, enabling faster time-to-value for your operational use cases.

Reason #5: Improved Data Privacy and Compliance

The security and compliance of customer data are more critical than ever. By integrating Snowplow’s Customer Data Infrastructure with your Databricks lakehouse, you will have better control and governance over sensitive customer information.

As Alex Dean emphasized on the webinar, “The one key thing we see is that if companies can take control of their customer data and centralize it inside their lakehouse, they can start to bring the full toolkit of privacy, consent, and compliance tools to that data. We think that’s a much more positive starting point for the next ten years of consumer privacy versus the old MarTech Wild West of installing lots of tags and firing data all over the place.”

With Snowplow and Databricks, you can apply robust privacy and compliance measures to safeguard customer data. Our privacy-by-design approach with features such as Unity Catalog ensures that data collection and processing align with regulatory requirements such as GDPR and CCPA.

The ability to manage consent, anonymize data, and enforce data retention policies, allows you to build trust with your customers and mitigate the risks associated with data breaches and non-compliance. As a result, you can confidently nurture long-term customer relationships and maintain a strong reputation in the digital ecosystem.

Maximize The Value of Your Customer Behavioral Data

So there you have it. By integrating Snowplow’s Customer Data Infrastructure with the Databricks Data Intelligence Platform, you can maximize the value of your customer behavioral data and unlock new and exciting use cases for your business.

Centralized data management, real-time and historical data analysis, advanced analytics and AI, advanced operational use cases, and enhanced data privacy and compliance – are just some of the reasons for integrating Snowplow with Databricks.

This powerful combination is already helping some of the world’s leading data-driven companiesto make data-driven decisions, personalize customer experiences, and drive business growth while ensuring the highest standard of data governance and security.

You too can embrace the future of customer data management for your organization and realize the full value of your customer behavioral data with Snowplow and Databricks.

Want to Find Out More?

Watch back our joint webinar with Databricks –Leveraging Customer Data for Enhanced Analytics and AI in the Lakehouse – to learn more about this next-generation integration and how you can get started.