Snowplow for media part 4: what can we do with the data now we're growing?

We recommend you read the previous posts on this topic before diving into this article to ensure you have all the context you need:

Bear in mind there is one more post in this series you can read after this one: What can we do with the data, we’re well established.

What can we do with the data as our data team grows?

- Advertiser analytics

- Track critical events server-side

- Track app installs and social media clicks

- Funnels to improve UX and inform paywall decisions

- Further user stitching

- Informed content creation

What can we do with the data as our data team grows?

Now we’re looking at a data team that is growing and has several analysts and maybe some spare engineering resources as the company is starting to see real value in the analytics you have served to date.

We’re working under the assumption that you’ve already taken all the steps recommended for a data team that’s just starting out.

That means that at this point you have (where applicable):

- Aggregated and refined web and mobile data

- Joined data from web and mobile

- Created a marketing attribution model

- Understood what drives user retention

Now that you are a team with several analysts then you can take this a bit further and take the following steps:

- Advertiser analytics

- Track critical events server-side

- Use webhooks to track app installs and social media clicks

- Understand the conversion funnels

- Begin building a more intelligent user stitch

- Make informed content creation decisions

Again, let’s take a non-technical look at how each of these could be achieved with Snowplow. All the ideas mentioned here are things we have seen Snowplow users do.

1. Advertiser analytics

If you rely on ad revenue rather than subscriptions or donations (or even a mixture of all 3), some key questions can be answered with event level data.

- Which users should we look to find more of since they are attracting high bid values

- What content attracts many and/or high bids (not just article name, but product mentions in content, themes, personalities etc., all captured by custom entities)

- How much is each page view worth

- Which authors are the best at driving high CPMs

Successful bid data can be tracked as Snowplow events server-side or if the data already exists in tables in your warehouse, it can be easily joined to Snowplow user engagement data in your warehouse. If you use prebid.js, all the bid data the user browser receives can be tracked as Snowplow events allowing you to build a great understanding of ad space value in your product since you know which impression received which bids.

A better understanding of what drives DSP decisions can lead to large gains in ad revenue and is something that can be quite hard to understand at a granular level with one-size-fits-all analytics solutions.

All this great analysis can also then be served back to advertisers and affiliates to encourage confidence and therefore further investment.

2. Track critical events server-side

Rather than rewrite what has already been brilliantly covered by our Customer Success Lead, Rebecca Lane, please read her blog post on the subject here.

In short, ensure that you are always tracking your mission critical events, such as subscriptions and donations, server-side as this is the most robust way to track these. Doing this also helps maintain good data governance by limiting what information is pushed to the datalayer.

3. Track app installs and social media clicks

Use Snowplow’s third party integrations to track app installs and social media clicks (as examples). Any third party data can be sent through the Snowplow pipeline so that it lands in your warehouse in the same format as all the other events. This means you can use whatever service you are comfortable with to track social media clicks and send this event to the Snowplow pipeline. A great blog post that goes into more detail on this by Snowplow Co-Founder Yali Sassoon can be found here.

With these events in your data warehouse, you can gain an even better understanding of your user journeys. You can also add this data to your attribution model and refine it further. Remember, you can figure out which user installed the app or clicked on the Facebook link using the third party cookie or IDFA/IDFV as described in the earlier section on how to join mobile and web data.

4. Funnels to improve UX and inform paywall decisions

We have written an eBook on the subject of Product Analytics which you can download here. To look specifically at user journeys, most Snowplow customers can take advantage of our Indicative integration with a free Indicative account. Indicative is one of the best tools out there for understanding how users move through your product. Do get in touch for more information or to request a demo!

Example: the signup process

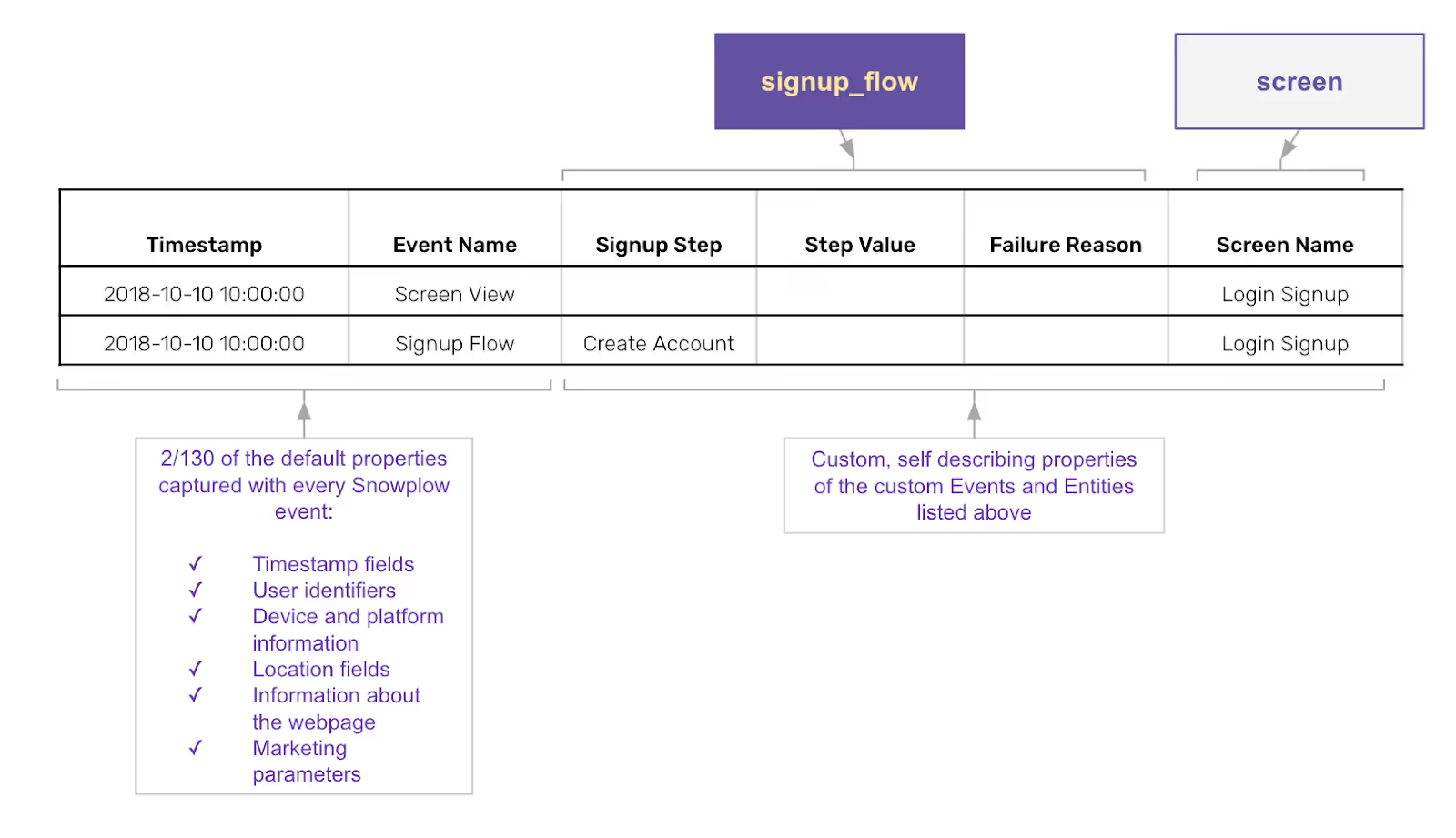

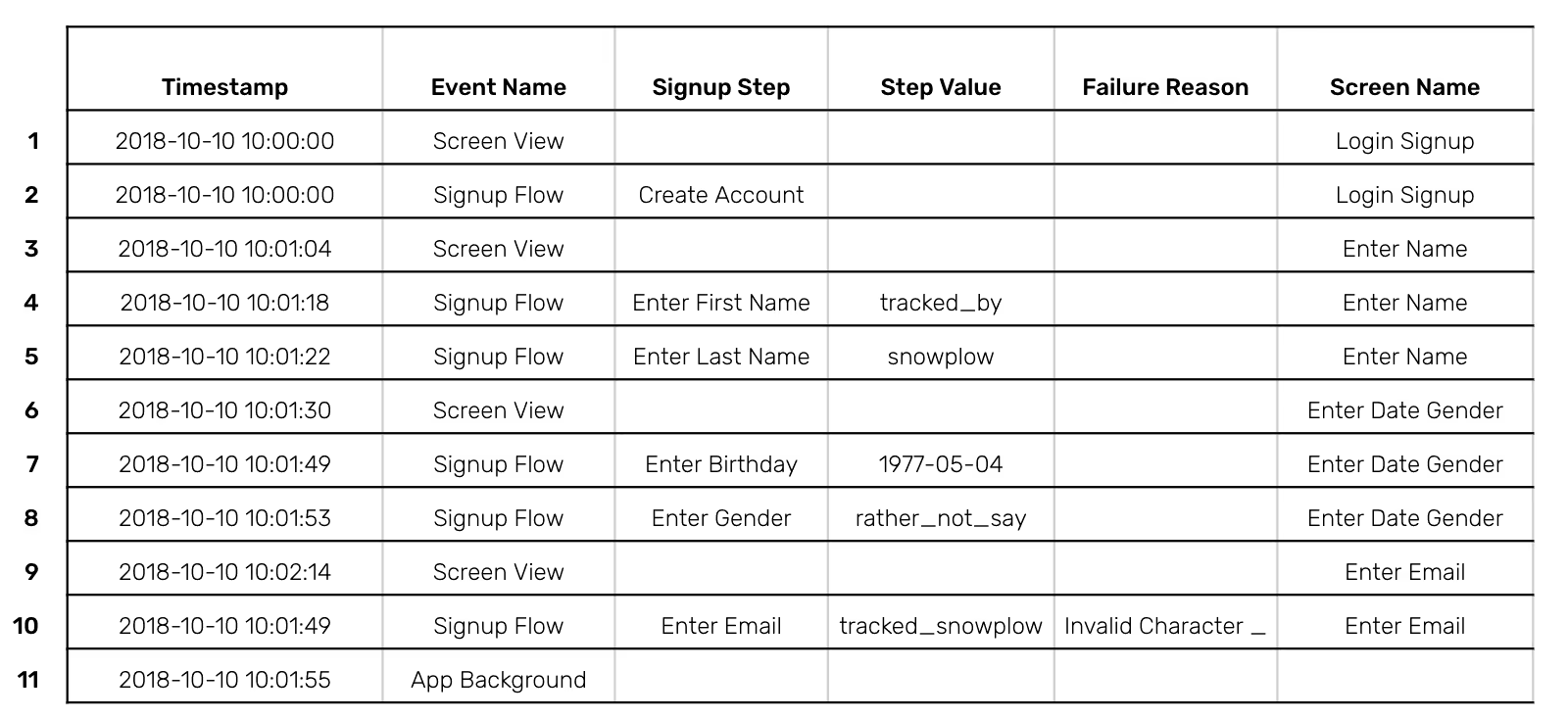

Let’s assume the product manager wants some insights into how users move through the signup process before subscribing. The Snowplow data they would work with may look something like this:

From this table, some quick insights we have:

- Which steps took the longest to complete (step 2)

- The user gave up after their email address attempt had an invalid underscore character (steps 10 and 11)

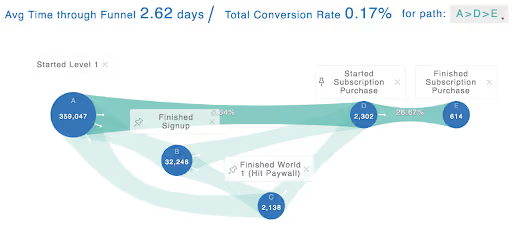

This is only one user so the data isn’t too reliable, so now that you have an understanding of the data, it is easy to aggregate up to millions of sessions and users and show this in Indicative:

The UX designer and product manager can work on this to maximise conversions where possible.

Importantly, funnels can be built based on paywall experiments. A/B test data for different paywall strategies can be pulled into Indicative to inform decisions on what paywall logic to employ. Are there unsubscribed users who exhibit traits of high LTV subscribers engaging in certain content? Can this be taken advantage of to push them to subscribe?

Using data to drive paywalling decisions means that you won’t be giving away content for less than it is worth.

5. Further user stitching

Snowplow was built as a tool to help companies understand their users and take actions from that understanding. A key step in that process is to evolve the user stitch. The more reflective the user stitch is of the truth, the more confidence we have when bucketing users. They can be bucketed based on which are the most valuable and what influences them. Armed with knowledge on how to influence the most valuable users, very effective actions can be taken off the data.

An example of what I mean by evolving the user stitch is to look at the case where multiple people browse your site with the same browser (a family, office or school) and not all of them identify themselves in every session.

Let’s steer clear of machine learning as a one stop solution for now since we are working under the assumption the data team consists of 4-5 data analysts at this stage. What can you do to build on the user stitch of the previous section?

Assuming each family member identifies themselves at some point, you have some sessions where you have a high confidence that you know who they are. You can start assigning probabilities to future sessions on the same device based on behaviour.

- One family member (Josephine) reads informational content and tends not to comment

- The other family member (Joseph) watches many videos and leaves comments often

You can build a simple ranking of frequent behaviour using the event level data. Then, when a session starts and the user doesn’t identify themselves you can guess who they are.

- They search for “snowplo” and open a “Snowplows” article about how those machines work – might be Josephine

- They scroll 75% of the way through the article – more likely Josephine

- In the sidebar the spot a video and play it – could actually be Joseph

- They then watch a related video and leave a string of comments – quite sure its Joseph

This is a very simplified example just meant to illustrate what you can do with access to rich, event level data. The model that you build will be specific to your business and will be designed after thorough exploration of this rich dataset.

6. Informed content creation

Having already answered some basic questions about what content is most popular in User engagement on the website we can start to think about what content is most sought after at what time. This can then inform what content is created.

Using your data to drive an understanding of which content is being engaged with by which users means a content roadmap can be built. This allows for highly efficient spend on its creation and means that only the content most likely to encourage engagement and therefore higher ad revenue, higher subscription rates and lower churn will be produced.

The producers of content can also be served dashboards on how their content performs. This is made easy as you can track a custom property in a content entity which contains a creator_id. Having access to all the event level data means you can simply filter all events for that creator_id and serve the same dashboard to every creator.