Let’s Begin

Hi! I’m Ryan, an analytics engineer at Snowplow. I joined about 6 months ago.

One of the things I often get asked is how Snowplow compares to CDPs like Rudderstack. And while I’d heard all the right answers—Snowplow offers richer tracking, better structure, and more transparency—I wanted to get a hands-on feel for the difference.

Especially now, as Snowplow moves deeper into shift-left data quality, governance, and powering agentic AI systems, I wanted to test how these foundational differences play out in real life.

So I decided to build a test project. Naturally, I created a dating app.

As a 20-something single guy living in London, I had plenty of inspiration—and more importantly, I had a great way to generate behavioral data across multiple user journeys, screens, and decision points.

The app—DatingXS—wasn’t just a fun experiment. It gave me a perfect sandbox to compare Snowplow vs. Rudderstack across data modeling, validation, querying, and even AI-readiness.

What does good data look like?

I’ve been working with data my whole career—both as a consumer and a creator. So I came into this experiment with a few "red flag" questions I always look for:

- Are there multiple ways to do the same thing that produce different results?

- Is it easy to generate bad data by accident?

- Is the data discoverable and explainable?

- Is my logic duplicated across pipelines?

- What happens when the one person who knows everything leaves?

These aren’t just annoyances—they’re blockers for scale, governance, and trust.

At Snowplow, we tackle these issues at the source. With shift-left validation, explicit schema versioning, and semantic modeling, we make it harder to get data wrong in the first place.

Why? Because AI systems, real-time operations, and even your basic dashboards all break without trust in the data.

That’s what this whole experiment was about: testing what it feels like to work with these tools when you're both the engineer and the analyst and the product manager—because at many companies, that's the reality.

Key Differences: Rudderstack vs. Snowplow in 2025

Rudderstack is a Warehouse Native CDP. It’s focused on routing data and activating it in downstream tools.

Snowplow is a Customer Data Infrastructure (CDI). It’s built for teams who want to own their data end-to-end—with full observability, governance, and flexibility—so they can feed analytics, AI models, and operational systems with complete confidence.

- Shift-left validation: Schema enforcement before data hits the warehouse

- Rich, structured, event-level data: Not sampled or flattened

- Composable by design: Easily feed clean data into your warehouse, lakehouse, or AI pipelines

- Governance built-in: Data contracts, schema versioning, and auditability

You’re not locked into one tool’s vision of the customer journey—you define your own.

My Journey – creating and tracking a dating app

Comparing two tools like Snowplow and Rudderstack is no easy task, so I started simple, using the Rudderstack tools and a basic Snowplow pipeline.

I began by building a mockup of a dating app which I called DatingXS, using next.js to generate some behavioral events which I sent to BigQuery. My original plan was to send this data to Databricks, but unfortunately the self-hosted destination hub from Rudderstack (Control Plane Lite) is no longer supported and doesn’t offer Databricks as a destination.

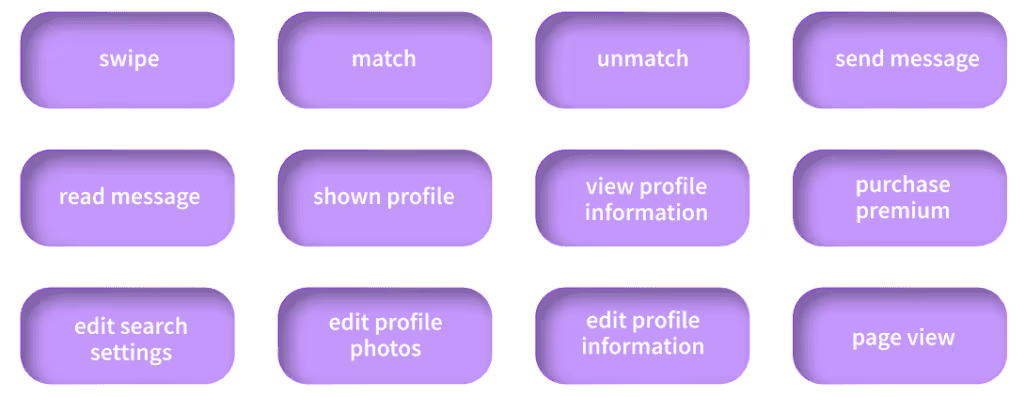

I had 12 events (11 custom and a page view), which were:

Both tools could handle basic tracking on a simple app, with the tracking code being similar between them. One big difference in tracking was the use of schemas and entities with Snowplow, which I’ll talk about lower down. After tracking some events from my app (sadly I did not find love), I was ready to compare the outputs and experience.

Rudderstack creates more tables than an IKEA showroom

Snowplow has a single atomic events table, with all the attributes of my tracked events and the events themselves stored in the same place.

Rudderstack instead generates a table (and a view) for every event type. Even with my XS implementation, this started to make my analytics queries across multiple events extremely long and full of joins – they weren’t necessarily more complex but they were more tedious and harder to read. Beyond that, having events in their own table AND the unified (but thin) tracks table meant that I had multiple ways to answer a question, but ones that could give different results.

That to me is a red flag.

Rudderstack created columns seemingly at random

With Rudderstack, the columns were also ordered in what appeared to be a completely random way in each table.

What is column 3 in one table is column 45 in another.

All these issues meant that I found data discovery and query creation a real challenge, as visually exploring the data in a preview was not at all helpful.

Another red flag.

Snowplow’s consistent structure and enforced schemas meant I could reason about my data confidently—an essential piece when building governed pipelines or training AI models that rely on stability.

What is an entity and why are they useful?

Snowplow pioneered the concept of entities – the nouns of my data – which can be reused across different events.

In my app I had 2 entities, user and profile, each with their own schema to define them.

This meant I could attach my user information to all my events (using a global context), and attach the profile to just those that it was relevant to.

Rudderstack has no support for such an idea. I had to manually add all the profile attributes to each event, mixing them in with the event attributes.

With Snowplow, entities not only had the benefit of being reusable and consistent across events, but because Snowplow bundles these all into a single nested column, it was easy to understand which attributes were for which context and event.

This structure makes Snowplow data more explainable, maintainable, and AI-consumable.

Data richness

In terms of the number of out-of-the-box fields that comes with events, Rudderstack provides about 45 unique fields across their tracks, users, and event table (with many of those fields duplicated across each table). Snowplow provides anywhere from 150+ depending on what one-line contexts you choose to enable.

Snowplow provides additional information through enrichments such as geo lookup, IP lookup, marketing attribution, and page pings for scroll and engaged time. These make a huge difference for behavioral modeling, LTV scoring, Agentic AI and personalization.

More importantly: this context is added upstream, before hitting your warehouse.

That’s shift-left in action.

Rudderstack’s definitions and validation were ineffective

Setting up Snowplow required me to define schemas for each event and entity. That meant thinking through data structures and naming conventions from day one.

Rudderstack? None of that. I could send anything.

It felt fast at first… until I made a mistake. A typo created a new table. A data type mismatch broke queries. In one case, I changed a field from integer to decimal, and Rudderstack just rounded the value—with no alert, no log, and no ability to recover the lost data.

For a small app, that’s annoying. For a business using that data for financial or ML modeling? That’s dangerous.

This is why Snowplow’s validation system matters. It catches issues at ingestion—before data hits your warehouse. You can test schemas locally with Snowplow Micro, ensuring the quality never breaks in production.

Extra tooling to test my data

Snowplow Micro let me test all of my tracking locally. It validated every event against my defined schemas and gave me real-time feedback. That’s a huge win.

With Rudderstack, I had to wait for the batch job to run—and then go dig into the warehouse to see what landed.

That delay slows development and introduces risk. With Snowplow, you shift that quality left.

Final Summary

The red flags I hit using Rudderstack were manageable as a one-person team. But as DatingXS grows into DatingXL—with more teams, more data, and more decisions—the cracks become canyons.

Snowplow’s approach to governance, shift-left validation, and AI readiness isn’t just a luxury—it’s how you future-proof your data.

Whether you're training LLMs, powering personalization, or just trying to maintain trustworthy dashboards, Snowplow gives you:

- Consistent structure

- Schema validation at the edge

- Full context for downstream AI/ML use

- A governed pipeline that scales with your team

Unlike DatingXS, this one’s built to last.

And unlike my dating app… this one’s a match.